Data volume refers to the sheer amount of data generated, collected, and stored by organizations. It plays a crucial role in analytics, enabling you to derive valuable insights and make informed decisions. As data generation accelerates, managing this volume becomes essential. Tools like FineDataLink, FineReport, and FineBI offer solutions to handle large data volumes efficiently. They provide real-time data integration, dynamic reporting, and self-service analytics. These capabilities help you navigate the challenges and seize the opportunities presented by increasing data volumes.

Defining Data Volume

What is Data Volume?

Data volume refers to the sheer amount of data that you generate, collect, and store within a system. It plays a crucial role in analytics, as it directly impacts the processing power and storage requirements needed for effective analysis. Understanding data volume helps you select the right tools that can scale with your needs and provide meaningful insights from large datasets.

Characteristics of Data Volume

Data volume is characterized by its size, which affects the storage, scalability, and performance of the systems handling such data. Large datasets may require optimized algorithms and storage solutions to ensure efficient processing. The volume of data can range from terabytes (TB) to petabytes (PB) and even exabytes (EB). To put this into perspective:

- A terabyte is 1,000 gigabytes (GB).

- A petabyte is 1,000 terabytes.

- An exabyte is 1,000 petabytes.

These measurements highlight the vastness of data that organizations deal with, especially in fields like genomics and physics, where analyzing enormous datasets is common.

Types of Data Volume

Data volume can be categorized into different types based on its source and nature. Some common types include:

- Structured Data: This type of data is organized and easily searchable, often stored in databases.

- Unstructured Data: This includes data that lacks a predefined format, such as emails, videos, and social media posts.

- Semi-structured Data: This type of data contains elements of both structured and unstructured data, like JSON or XML files.

Understanding these types helps you manage and analyze data more effectively, ensuring that you can derive valuable insights from various data sources.

Measuring Data Volume

Measuring data volume is essential for managing and analyzing data efficiently. It involves understanding the size of data sets and the tools required to handle them.

Units of Measurement

Data volume is measured using specific units that indicate the size of data sets. Common units include:

- Bytes: The basic unit of data measurement.

- Kilobytes (KB): 1,000 bytes.

- Megabytes (MB): 1,000 kilobytes.

- Gigabytes (GB): 1,000 megabytes.

- Terabytes (TB): 1,000 gigabytes.

- Petabytes (PB): 1,000 terabytes.

- Exabytes (EB): 1,000 petabytes.

These units help you quantify the amount of data you are dealing with, allowing for better planning and resource allocation.

Tools for Measurement

Several tools can help you measure and manage data volume effectively. These tools provide insights into the size and growth of your data, enabling you to make informed decisions about storage and processing needs. Some popular tools include:

- Database Management Systems (DBMS): These systems help you organize and manage structured data efficiently.

- Data Analytics Platforms: Tools like FineDataLink, FineReport, and FineBI offer solutions for handling large data volumes, providing real-time data integration, dynamic reporting, and self-service analytics.

- Cloud Storage Solutions: These services offer scalable storage options, allowing you to store and access large volumes of data without the need for physical infrastructure.

By utilizing these tools, you can ensure that your data management strategies are aligned with your organization's needs, enabling you to harness the full potential of your data.

The Role of Data Volume in Analytics

Impact on Data Processing

Data volume plays a significant role in how you data processing. As the amount of data increases, you must consider several factors to ensure efficient processing.

Data Storage Considerations

When dealing with large volumes of data, storage becomes a critical factor. You need to choose storage solutions that can scale with your data needs. Traditional storage methods may not suffice for big data volumes. Instead, you might explore cloud storage options or distributed databases. These solutions offer scalability and flexibility, allowing you to store vast amounts of data without physical constraints.

Data Processing Speed

The speed at which you process data directly affects your ability to derive timely insights. Large data volumes can slow down processing if not managed properly. To maintain speed, you can employ strategies like in-memory processing and data compression. These techniques help reduce the time it takes to analyze data. Additionally, using scalable architectures and efficient algorithms can further enhance processing speeds, ensuring that you can handle large datasets effectively.

Influence on Data Quality

Data volume also impacts the quality of your data. As you manage larger datasets, maintaining accuracy and precision becomes more challenging.

Accuracy and Precision

With more data, the potential for errors increases. You must ensure that your data remains accurate and precise. This involves implementing robust data validation processes. By doing so, you can minimize errors and maintain the integrity of your data. Accurate data leads to more reliable insights, which are crucial for informed decision-making.

Data Cleaning Challenges

As data volume grows, so do the challenges associated with data cleaning. You need to identify and rectify inconsistencies, duplicates, and errors within your datasets. This process can be time-consuming, but it's essential for maintaining data quality. Automating data cleaning tasks can help you manage these challenges more efficiently. By using advanced tools and techniques, you can streamline the cleaning process, ensuring that your data remains clean and ready for analysis.

Challenges of Managing Large Data Volume

Handling large data volumes presents several challenges. You must consider storage solutions and data security to manage your data effectively. Let's explore these aspects in detail.

Storage Solutions

Choosing the right storage solution is crucial when dealing with large data volumes. You have two primary options: cloud storage and on-premises storage.

Cloud Storage

Cloud storage offers scalability and flexibility. You can store vast amounts of data without worrying about physical infrastructure. This solution allows you to access your data from anywhere, making it ideal for businesses with remote teams. Cloud providers often offer pay-as-you-go models, which help you manage costs effectively. However, you must ensure that your cloud provider complies with data privacy regulations to protect your data.

On-Premises Storage

On-premises storage gives you complete control over your data. You can customize your storage infrastructure to meet your specific needs. This option is suitable for organizations with strict data security requirements. However, maintaining on-premises storage can be costly and requires significant resources. You must invest in hardware, software, and personnel to manage your storage infrastructure effectively.

Data Security Concerns

Data security is a top priority when managing large data volumes. You need to implement robust security measures to protect your data from unauthorized access and breaches.

Encryption Techniques

Encryption is a vital tool for securing your data. By encrypting your data, you ensure that only authorized users can access it. You can use various encryption techniques, such as symmetric and asymmetric encryption, to protect your data. Symmetric encryption uses a single key for both encryption and decryption, while asymmetric encryption uses a pair of keys. Choose the technique that best suits your security needs.

Access Control Measures

Access control measures help you manage who can access your data. Implementing role-based access control (RBAC) allows you to assign permissions based on user roles. This approach ensures that users only have access to the data they need for their tasks. Regularly reviewing and updating access permissions is essential to maintain data security. Additionally, you can use multi-factor authentication (MFA) to add an extra layer of security to your data access processes.

By addressing these challenges, you can manage large data volumes effectively. Implementing the right storage solutions and security measures will help you protect your data and maximize its value.

Opportunities Presented by Large Data Volume

Enhanced Decision Making

Large data volumes offer significant opportunities for enhancing decision-making processes. By leveraging vast amounts of data, you can gain deeper insights and make more informed choices.

Predictive Analytics

Predictive analytics allows you to forecast future trends and behaviors by analyzing historical data. For instance, Netflix uses predictive analysis to determine what content their customers might enjoy. By examining viewing habits, Netflix can recommend shows and movies that align with user preferences. This approach not only enhances user experience but also increases engagement and retention. You can apply similar strategies in your organization to anticipate customer needs and optimize your offerings.

Real-time Analytics

Real-time analytics provides immediate insights into current data, enabling you to respond swiftly to changing conditions. Platforms like YouTube utilize real-time data to recommend content based on user interactions. By analyzing data as it is generated, you can make timely decisions that improve operational efficiency and customer satisfaction. Implementing real-time analytics in your business can help you stay ahead of the competition and adapt to market dynamics quickly.

Innovation and Insights

Large data volumes serve as a catalyst for innovation and the discovery of new insights. By analyzing extensive datasets, you can uncover patterns and trends that drive creativity and growth.

Identifying Trends

Identifying trends within large datasets allows you to understand market dynamics and consumer behavior. For example, businesses can analyze social media data to detect emerging trends and adjust their strategies accordingly. By staying attuned to these trends, you can position your organization to capitalize on new opportunities and remain relevant in a rapidly changing environment.

Discovering New Patterns

Discovering new patterns in data can lead to groundbreaking innovations. Netflix's recommendation system, which analyzes user habits to suggest content, exemplifies how data can drive innovation. By exploring data from various sources, you can uncover hidden patterns that inspire new products, services, or business models. Embracing data-driven innovation can set your organization apart and foster long-term success.

Strategies for Handling Data Volume

Data Reduction Techniques

Managing large data volumes requires effective reduction techniques. These methods help you streamline data, making it easier to store and analyze.

Data Compression

Data compression reduces the size of your data files. This technique allows you to save storage space and improve processing speed. By compressing data, you can handle larger datasets without overwhelming your systems. Tools like gzip and bzip2 offer efficient compression solutions. They help you maintain data integrity while reducing file sizes significantly.

Data Aggregation

Data aggregation involves summarizing detailed data into a more compact form. This process helps you focus on key insights without getting bogged down by excessive details. For example, instead of analyzing every transaction, you can aggregate sales data by month or region. This approach simplifies analysis and highlights important trends. Aggregation tools, such as SQL queries, enable you to perform these tasks efficiently.

Implementing efficient data management practices ensures that you can handle data volume effectively. These practices align your data strategy with corporate goals, enhancing overall performance.

Implementing efficient data management practices ensures that you can handle data volume effectively. These practices align your data strategy with corporate goals, enhancing overall performance.

Data Governance

Data governance establishes policies and procedures for managing data assets. It ensures data quality, security, and compliance. By implementing governance frameworks, you can maintain control over your data. This practice involves defining roles, responsibilities, and standards for data management. According to a survey, 49% of organizations believe their data strategy aligns well with corporate goals. This alignment underscores the importance of governance in achieving business objectives.

Data lifecycle management (DLM) oversees data from creation to disposal. This practice helps you manage data efficiently throughout its lifecycle. DLM involves categorizing data based on its value and relevance. You can then apply appropriate storage, retention, and deletion policies. By managing data lifecycles, you ensure that your systems remain efficient and cost-effective. This approach also helps you comply with regulatory requirements, safeguarding your organization's reputation.

Data lifecycle management (DLM) oversees data from creation to disposal. This practice helps you manage data efficiently throughout its lifecycle. DLM involves categorizing data based on its value and relevance. You can then apply appropriate storage, retention, and deletion policies. By managing data lifecycles, you ensure that your systems remain efficient and cost-effective. This approach also helps you comply with regulatory requirements, safeguarding your organization's reputation.

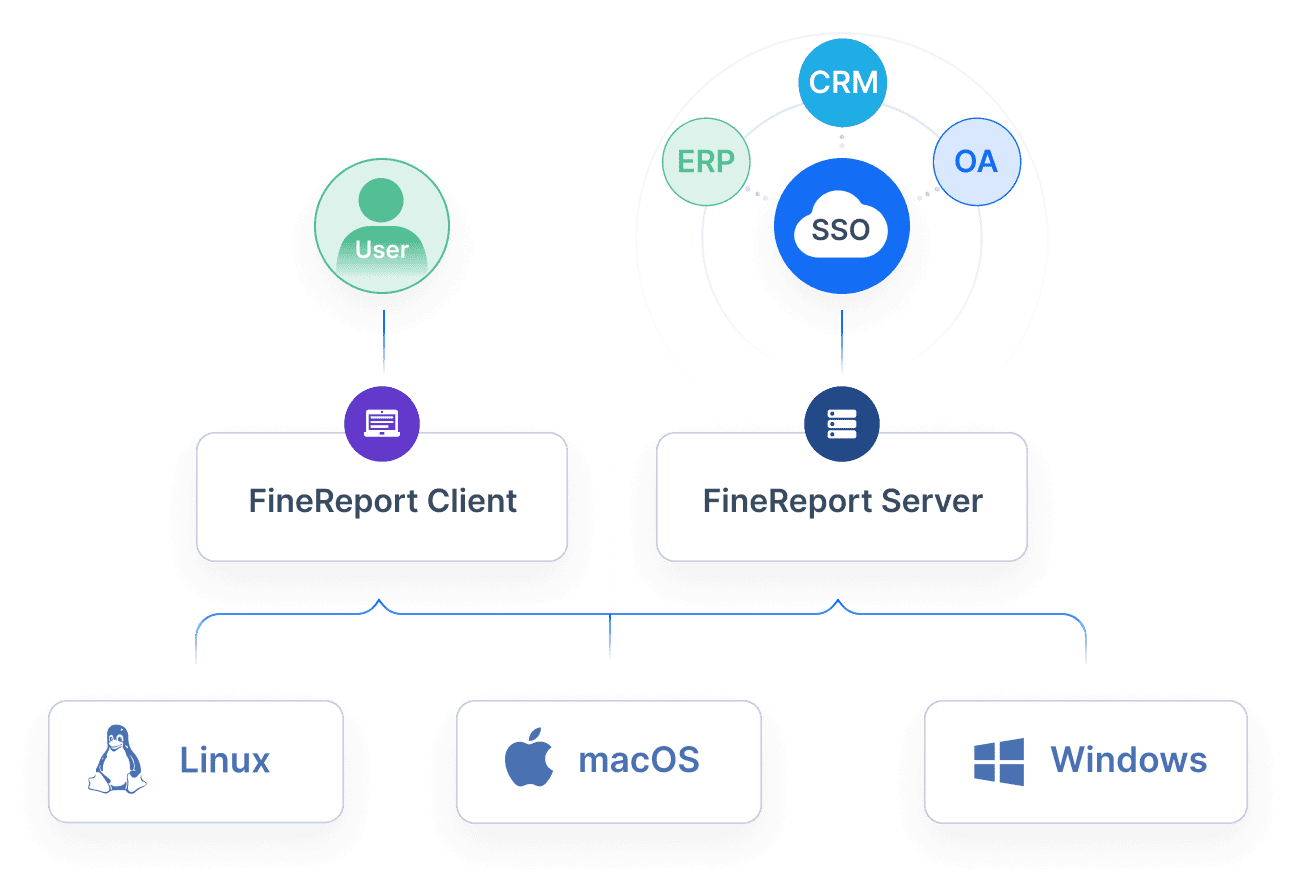

FanRuan's Solutions for Data Volume Challenges

In the realm of data analytics, managing large data volumes presents unique challenges. FanRuan offers innovative solutions to address these challenges effectively. By leveraging tools like FineDataLink, FineReport, and FineBI, you can streamline data integration, enhance reporting, and empower self-service analytics.

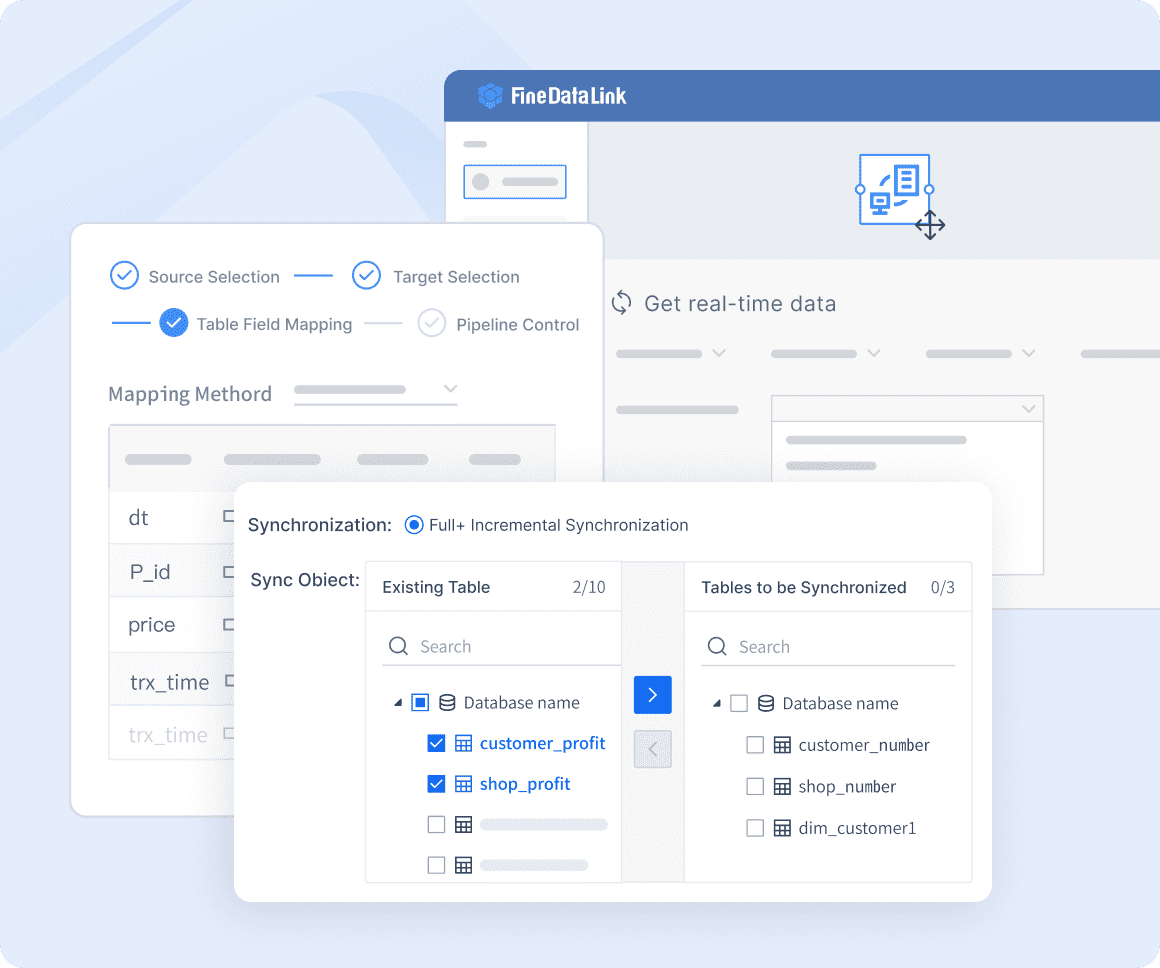

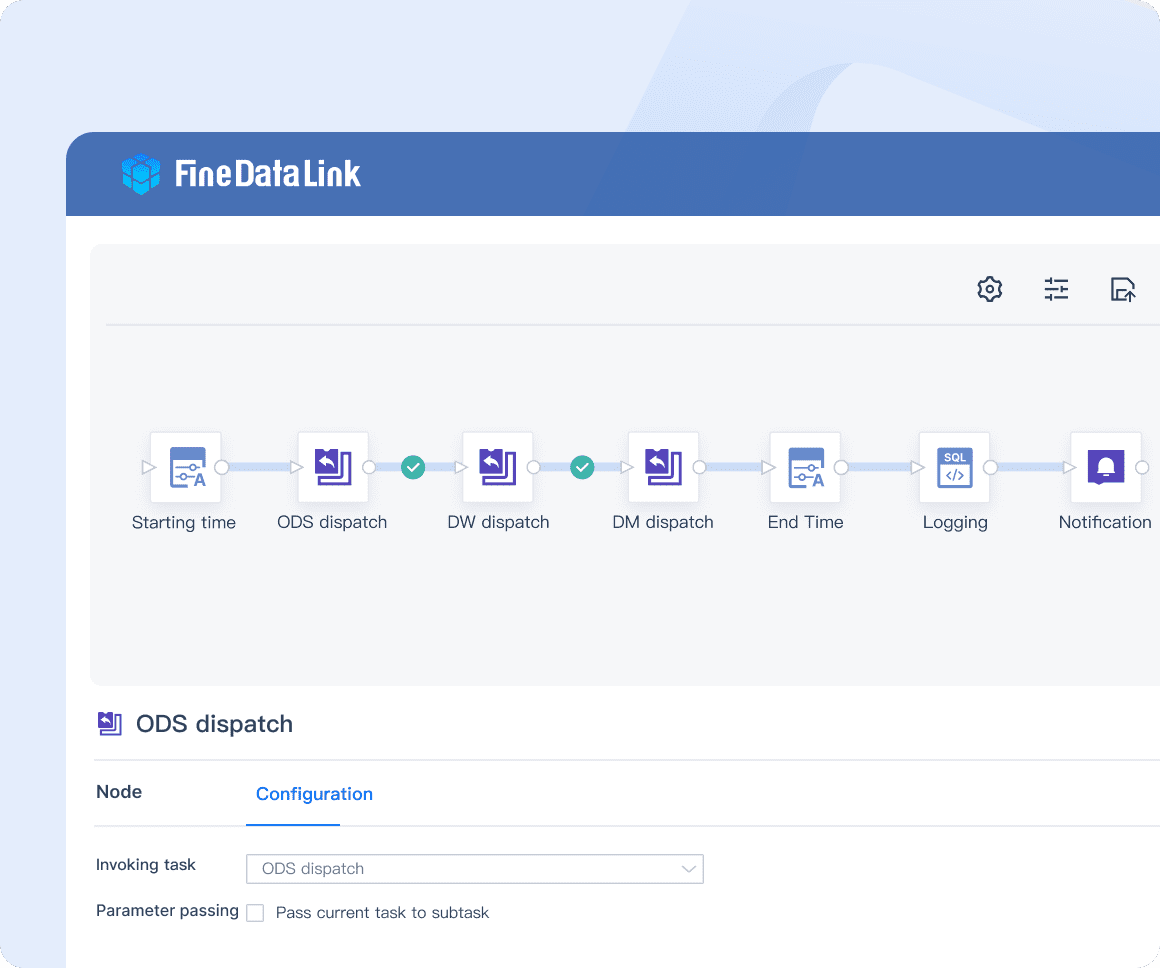

FineDataLink for Data Integration

FineDataLink stands out as a robust platform for data integration. It simplifies complex data tasks, ensuring seamless data flow across systems.

With real-time data synchronization, FineDataLink ensures that your data remains current and accurate. This feature minimizes latency, allowing you to access up-to-date information instantly. By synchronizing data in real-time, you can make informed decisions quickly, enhancing operational efficiency.

With real-time data synchronization, FineDataLink ensures that your data remains current and accurate. This feature minimizes latency, allowing you to access up-to-date information instantly. By synchronizing data in real-time, you can make informed decisions quickly, enhancing operational efficiency.

ETL/ELT Capabilities

The ETL (Extract, Transform, Load) and ELT (Extract, Load, Transform) capabilities of FineDataLink enable efficient data processing. These functions allow you to transform raw data into actionable insights. By automating data workflows, you reduce manual intervention, saving time and resources.

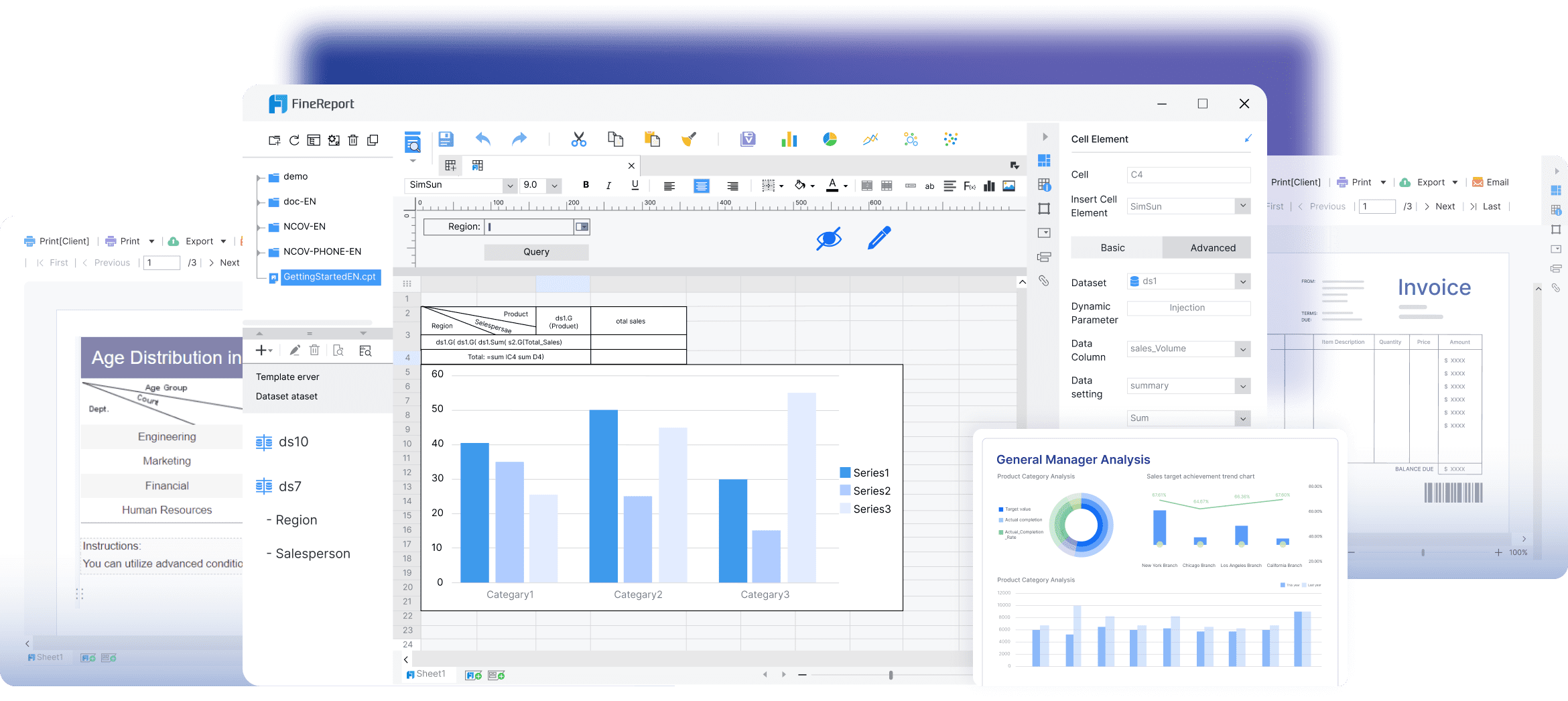

FineReport for Enhanced Reporting

FineReport offers a comprehensive solution for creating dynamic reports and dashboards. It empowers you to visualize data effectively, uncovering deeper insights.

Dynamic Data Integration

Dynamic data integration in FineReport allows you to connect to various data sources effortlessly. This feature ensures that your reports reflect the latest data, providing accurate insights. By integrating data dynamically, you maintain consistency across your reports, enhancing their reliability.

Interactive Analysis

Interactive analysis with FineReport enables you to explore data visually. You can engage with your data through intuitive dashboards, uncovering trends and patterns. This approach fosters a deeper understanding of your data, supporting strategic decision-making.

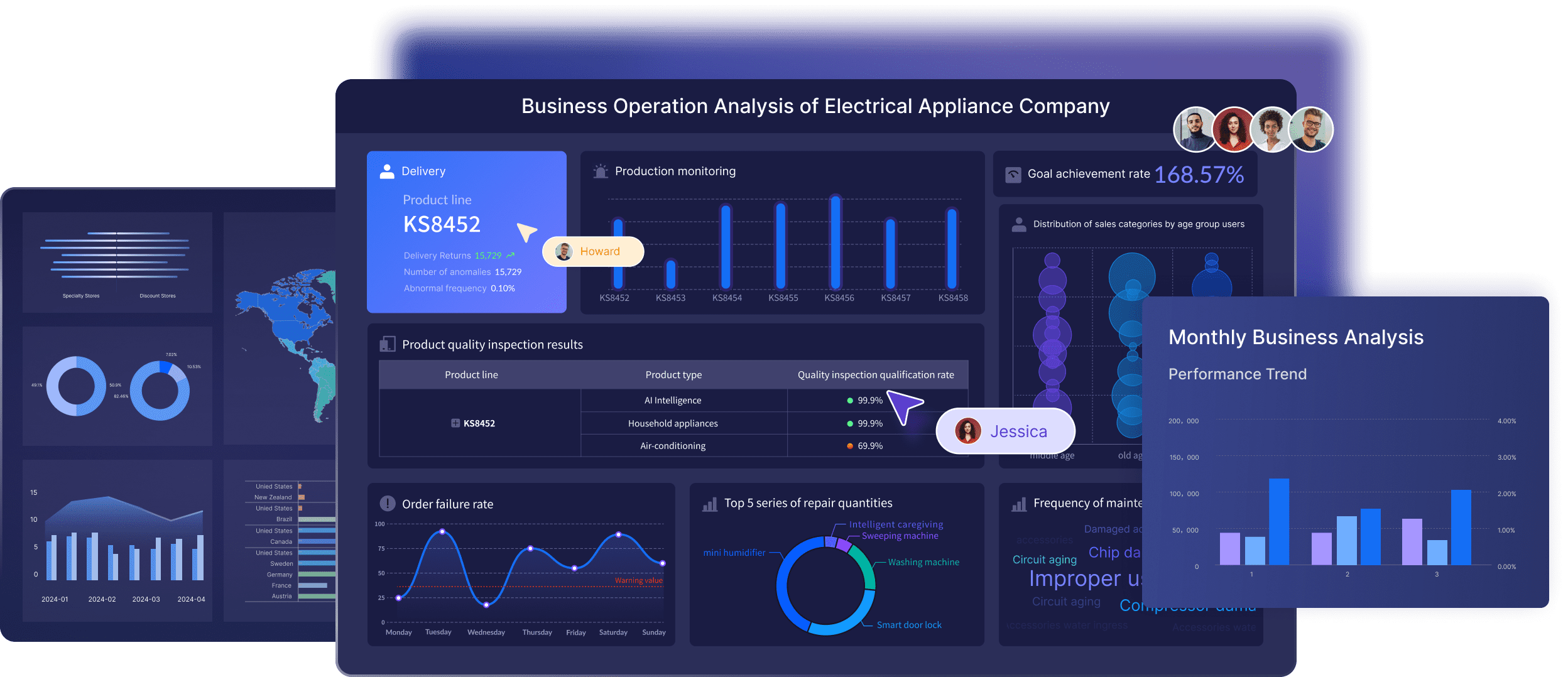

FineBI for Self-Service Analytics

FineBI empowers users with self-service analytics, democratizing data access across your organization. It transforms raw data into meaningful visualizations, facilitating informed decisions.

Visual Data Exploration

Visual data exploration in FineBI allows you to interact with your data seamlessly. By using drag-and-drop functionality, you can create compelling visualizations without extensive technical knowledge. This feature enhances data accessibility, enabling you to derive insights independently.

Role-Based Access Control

Role-based access control in FineBI ensures data security and integrity. By assigning permissions based on user roles, you manage data access effectively. This approach protects sensitive information while allowing users to access the data they need for their tasks.

By utilizing FanRuan's solutions, you can navigate the complexities of data volume management. These tools provide the scalability and efficiency needed to harness the full potential of your data, driving innovation and growth.

Future Trends in Data Volume and Analytics

Emerging Technologies

The landscape of data volume and analytics is rapidly evolving, driven by emerging technologies that promise to transform how you manage and analyze data.

AI and Machine Learning

Artificial Intelligence (AI) and Machine Learning (ML) are at the forefront of this transformation. These technologies enable you to process vast amounts of data with unprecedented speed and accuracy. AI algorithms can sift through terabytes and petabytes of data, identifying patterns and insights that would be impossible for humans to discern manually. This capability not only enhances decision-making but also opens new avenues for innovation. As AI and ML continue to advance, expect them to play an even more significant role in handling large data volumes.

IoT and Big Data

The Internet of Things (IoT) contributes significantly to the increase in data volume. With billions of connected devices generating data every second, you face the challenge of managing and analyzing this influx. IoT data, often unstructured, requires sophisticated techniques for storage and analysis. Big Data technologies provide the tools necessary to handle this complexity. By leveraging these technologies, you can gain real-time insights into operations, improve efficiency, and create new business opportunities. The integration of IoT and Big Data will continue to shape the future of data analytics.

Evolving Data Management Practices

As data volumes grow, your data management practices must evolve to keep pace with the changing landscape.

Automation in Data Handling

Automation plays a crucial role in managing large data volumes efficiently. By automating data handling processes, you reduce the need for manual intervention, which minimizes errors and increases efficiency. Automated systems can process and analyze data continuously, providing you with up-to-date insights. This capability is essential in a world where data is generated at an ever-increasing rate. Implementing automation in your data management strategy ensures that you can handle the growing volume of data effectively.

Advanced Data Visualization

Advanced data visualization techniques help you make sense of large datasets. By transforming complex data into intuitive visual formats, you can quickly identify trends and patterns. These visualizations enable you to communicate insights clearly and effectively, supporting informed decision-making. As data visualization tools become more sophisticated, they will offer even greater capabilities for exploring and understanding data. Embracing these tools will enhance your ability to derive value from large data volumes, driving strategic growth and innovation.

Understanding data volume is crucial in today's data-driven world. It determines the infrastructure and resources you need to handle big data effectively. By employing strategies like data reduction and efficient management practices, you can harness large data volumes for actionable insights. Tools like FineDataLink, FineReport, and FineBI offer robust solutions for managing these challenges. As data continues to grow, adapting your strategies will be essential. Investing in scalable infrastructure and advanced analytics will keep you competitive. Embrace these changes to unlock the full potential of your data.

FAQ

Data volume refers to the amount of data you generate, collect, and store. It plays a crucial role in analytics, impacting processing power and storage needs.

Large data volumes provide detailed insights, enhancing decision-making. However, managing them requires efficient tools and strategies to ensure data quality and speed.

You measure data volume using units like bytes, kilobytes, megabytes, gigabytes, terabytes, petabytes, and exabytes. These units help quantify the size of your data sets.

Large data volumes can lead to storage issues, slower processing speeds, and data quality challenges. You need robust solutions to manage these effectively.

Strategies include data reduction techniques like compression and aggregation, scalable architecture, efficient algorithms, and real-time analysis. These methods streamline data handling.

Thematic analysis breaks down large datasets into manageable segments. This approach helps you extract domain knowledge efficiently, making data more accessible.

These tools offer solutions for data integration, dynamic reporting, and self-service analytics. They help you handle large data volumes by providing real-time insights and efficient data management.

Continue Reading About Data Volume

Step-by-Step Guide to Setting Up a Data Analytics Framework

Set up a data analytics framework with our step-by-step guide. Learn about data collection, processing, analysis, and visualization for business success.

Howard

Oct 16, 2024

2025 Best Data Integration Solutions and Selection Guide

Explore top data integration solutions for 2025, enhancing data management and operational efficiency with leading platforms like Fivetran and Talend.

Howard

Dec 19, 2024

How to Select the Best Data Visualization Consulting Service

Select top data visualization consulting services to transform data into insights, enhancing decision-making and driving business success.

Lewis

Nov 29, 2024

Explore the Best Data Visualization Projects of 2025

Discover 2025's top data visualization projects that transform data into insights, enhancing decision-making across industries with innovative tools.

Lewis

Nov 25, 2024

2025 Data Pipeline Examples: Learn & Master with Ease!

Unlock 2025’s Data Pipeline Examples! Discover how they automate data flow, boost quality, and deliver real-time insights for smarter business decisions.

Howard

Feb 24, 2025

2025's Best Data Validation Tools: Top 7 Picks

Explore the top 7 data validation tools of 2025, featuring key features, benefits, user experiences, and pricing to ensure accurate and reliable data.

Howard

Aug 09, 2024