Data processing transforms raw data into meaningful information through a structured approach. This systematic conversion enables organizations to analyze, sort, and organize data effectively. With proper processing, you can uncover insights that drive strategic decisions and optimize operations.

By leveraging efficient data processing, industries can save resources, improve productivity, and enhance decision-making capabilities.

Key Takeaways

- Data processing changes raw data into useful information for better decisions.

- The six steps—collecting, preparing, inputting, processing, outputting, and storing—keep data correct and useful.

- Using real-time data processing helps businesses act quickly and improve services.

- Tools like FineDataLink make handling and processing big data easier for companies.

- New tech like AI and machine learning will make data processing stronger and open new chances for growth.

Understanding Data Processing

What is Data Processing

Data processing refers to the systematic approach of transforming raw data into meaningful and actionable information. This process involves a series of steps that ensure data is collected, organized, and analyzed effectively. By following a structured data processing lifecycle, you can extract valuable insights that drive better decision-making. Whether you are managing customer data or analyzing market trends, processing data accurately ensures reliability and relevance.

Importance of Data Processing in Modern Industries

In today’s fast-paced industries, data processing plays a pivotal role in enhancing efficiency and productivity. Businesses rely on real-time data to adapt strategies and stay competitive. For instance, 80% of market research studies now break geographical barriers, while 65% of professionals emphasize the importance of real-time data for strategic adjustments.

| Statistic | Description | | --- | --- | | 63% | Increase in productivity rates due to data-driven decision-making. | | 80% | Operational efficiency improvement from incorporating business intelligence into analytics. | | 500% | Acceleration of decision-making processes attributed to data analytics. | | 81% | Companies believing data should be central to business decision-making. |

By implementing a robust data processing strategy, you can improve operational efficiency and accelerate decision-making processes. This approach not only saves time but also ensures your business remains agile in a competitive market.

Key Characteristics of Data Processing

Effective data processing exhibits several key characteristics that ensure its success:

- Accuracy: Ensures data is free from errors, providing reliable insights.

- Timeliness: Processes data quickly to deliver real-time or near-real-time results.

- Scalability: Handles large volumes of data without compromising performance.

- Automation: Reduces manual intervention, saving time and minimizing errors.

These characteristics make data processing a cornerstone of modern industries. By focusing on accuracy and automation, you can streamline operations and achieve better outcomes.

The Six Stages of Data Processing

Data Collection

The first stage of the data processing cycle is data collection. This step involves gathering raw data from various sources, such as surveys, interviews, sensors, or databases. Defining clear objectives during this stage ensures the data aligns with your business goals and provides the necessary information for informed decisions. Choosing the right method for collecting data, whether through structured surveys or observational techniques, is crucial for maintaining accuracy and relevance.

High-quality data collection relies on careful planning and validation processes. For example, randomization reduces bias, while regular checks maintain data integrity. These practices ensure the raw data is accurate and ready for further processing.

| Best Practice | Description | | --- | --- | | Careful Planning | Planning data collection procedures to mitigate errors. | | Validation of Tools | Ensuring measurement tools are validated for accuracy. | | Appropriate Sampling | Using suitable sampling techniques to enhance data quality. | | Randomization | Employing randomization to reduce bias in data collection. | | Effective Communication | Minimizing nonresponse through clear communication and incentives. | | Regular Checks | Conducting regular checks to maintain data integrity. | | Validation Processes | Implementing validation processes to ensure data accuracy. | | Data Cleaning | Performing data cleaning to rectify errors during analysis. |

By following these best practices, you can maximize the value of the insights obtained from your data.

Data Preparation

Once the data is collected, the next stage involves data preparation. This step focuses on cleaning, sorting, and filtering raw data to ensure accuracy and consistency. Data cleaning is particularly important, as it removes errors, duplicates, and irrelevant information that could compromise the quality of your analysis.

During this stage, you organize the data into a structured format, making it easier to process. For example, you might standardize formats, fill in missing values, or remove outliers. These actions improve data quality and ensure the information is ready for transformation and analysis.

Tip: Data preparation is the foundation of effective data processing. Investing time in this stage reduces errors and enhances the reliability of your results.

Data Input

The third stage of the data processing cycle is data input. This step involves converting the prepared data into machine-readable formats, such as spreadsheets, databases, or software applications. Accurate data input is essential for informed decision-making, as errors during this stage can lead to flawed analyses and financial losses.

Efficient data input processes enhance productivity and reduce redundant work. For example, automated systems can streamline data entry, minimizing manual intervention and ensuring consistency. Maintaining accuracy during this stage is also critical for compliance with legal requirements and for generating meaningful insights through data analytics.

- Decision Making: Accurate data is essential for informed decisions.

- Customer Satisfaction: Maintaining accurate customer data improves personalized experiences.

- Operational Efficiency: Efficient data entry processes save time and resources.

- Compliance and Legal Requirements: Errors in data entry can lead to non-compliance.

- Data Analytics: Reliable data input ensures meaningful insights for analysis.

By focusing on precision and efficiency, you can transform raw data into actionable information that drives success.

Data Processing

Data processing is the core stage where raw data undergoes transformation into meaningful insights. This step involves applying algorithms, statistical methods, and software tools to analyze and interpret the data. The goal is to extract actionable information that supports decision-making and strategic planning.

To ensure the reliability of this stage, you should follow a structured approach:

- Setting Clear Objectives: Define measurable goals to align the analysis with your business needs.

- Acquiring High-Quality Data: Use reliable methods like surveys or digital tracking to gather accurate information.

- Data Preparation: Clean and organize the data to eliminate errors and inconsistencies.

- Advanced Data Analysis: Leverage statistical tools and software to uncover patterns and trends.

Each step enhances the accuracy and relevance of the results, ensuring the processing of data yields valuable insights. For example, businesses can use predictive analytics to forecast market trends or customer behavior, enabling proactive strategies.

Tip: Invest in advanced tools and technologies to streamline this stage. Platforms like FineDataLink simplify data integration and processing, ensuring high-quality outcomes.

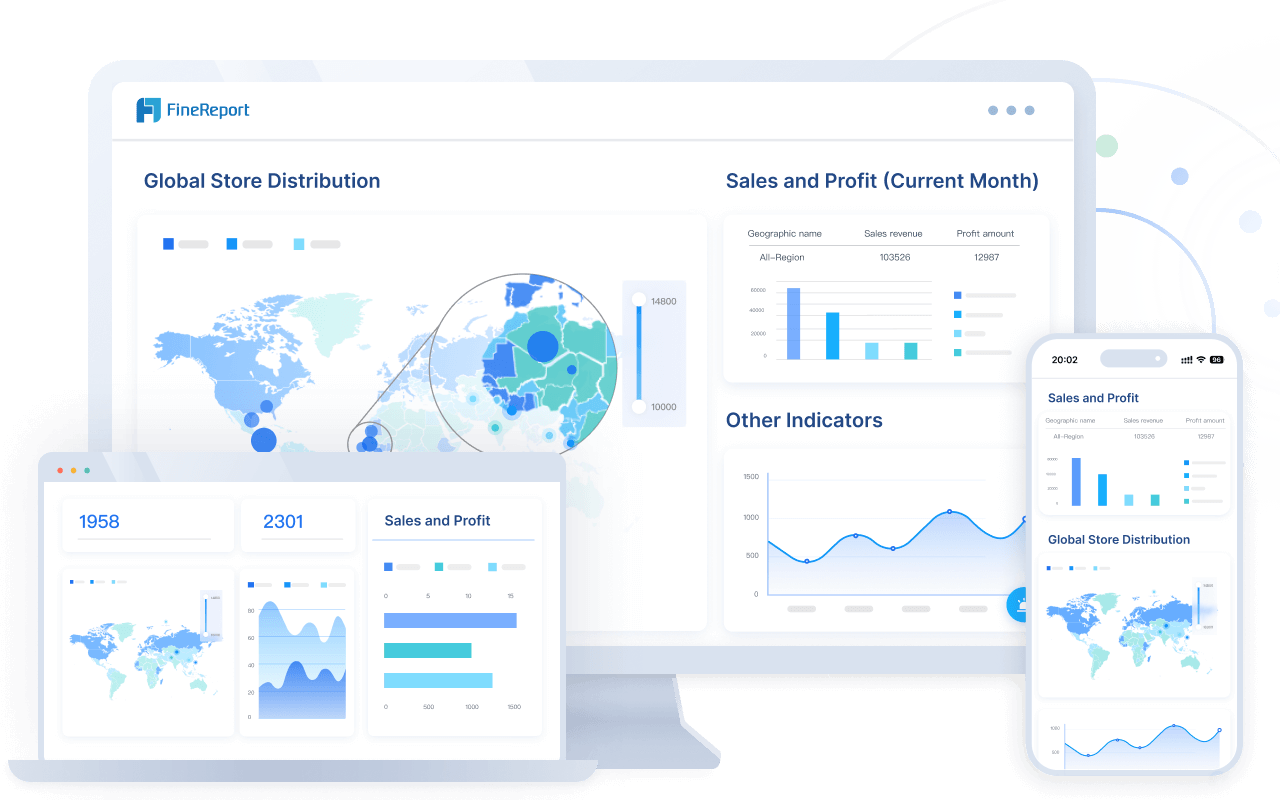

Data Output

Data output is the stage where processed data is presented in a usable format. This could include reports, dashboards, visualizations, or even automated alerts. The primary objective is to make the interpretation of data straightforward and actionable for stakeholders.

Effective data output implementation leads to several outcomes:

- Changes in decision-making processes and organizational policies.

- Improved knowledge and skills among team members.

- Enhanced systems and workflows due to actionable insights.

- Long-term cultural shifts toward data-driven strategies.

For instance, a well-designed dashboard can help you monitor key performance indicators (KPIs) in real time, enabling quick adjustments to strategies. Short-term improvements, such as better skill development, often pave the way for long-term benefits like increased organizational efficiency.

Platforms like FineReport simplify data output, ensuring high-quality outcomes.

Note: Ensure your data output aligns with the needs of your audience. Visual tools like charts and graphs can make complex information easier to understand.

Data Storage

Data storage is the final stage of the data processing cycle. It involves storing the data securely for future use, whether for compliance, analysis, or operational purposes. Choosing the right storage system is critical to maintaining data integrity and accessibility.

When storing the data, consider factors like scalability, security, and cost. For example, hybrid cloud storage offers flexibility by combining cloud scalability with on-premise control, making it suitable for sensitive data. However, it requires careful management to avoid integration challenges.

Tip: Regularly review your storage solutions to ensure they meet your evolving business needs. Tools like FineDataLink can help you manage and integrate data across multiple systems efficiently.

Types of Data Processing

Manual Data Processing

Manual data processing involves handling data without the use of machines or automated tools. You perform tasks like data entry, calculations, and analysis manually. This method often relies on tools such as paper, pens, and calculators. While it may seem straightforward, manual processing can be time-consuming and prone to errors.

Despite its limitations, manual data processing remains useful in situations where automation is not feasible. Small-scale projects or environments with limited resources often rely on this method. However, as data complexity grows, manual methods become less practical.

Mechanical Data Processing

Mechanical data processing uses machines like typewriters, calculators, or punch card systems to handle data. These tools help you perform repetitive tasks more efficiently than manual methods. For instance, a mechanical calculator can quickly perform complex calculations, saving time and reducing errors.

This method marked a significant step forward in data processing history. It allowed businesses to handle larger datasets with greater accuracy. However, mechanical processing still requires human intervention to operate the machines and interpret the results.

Although outdated in many industries, mechanical data processing paved the way for modern technologies. It remains a reminder of how innovation can transform the way you manage and process data.

Electronic Data Processing

Electronic data processing (EDP) uses computers and software to automate data handling tasks. This method is the most efficient and widely used today. You can process large volumes of data quickly and accurately with minimal human intervention.

For example, electronic systems allow you to collect, analyze, and store data in real time. This capability supports faster decision-making and improves operational efficiency. Compared to manual methods, EDP eliminates the need for double entry and verification, reducing errors and saving time.

Electronic data processing also supports advanced techniques like data mining. This approach helps you uncover patterns and trends, enabling better decision-making. By adopting EDP, you can streamline your operations and stay competitive in a data-driven world.

Real-Time Data Processing

Real-time data processing allows you to analyze and act on data as it is generated. This method processes data instantly, enabling you to make decisions without delay. For example, financial institutions use real-time systems to detect fraudulent transactions as they occur. Similarly, e-commerce platforms rely on real-time analytics to recommend products based on your browsing behavior.

To implement real-time data processing, you need systems capable of handling continuous data streams. These systems process data in milliseconds, ensuring timely insights. Key components of real-time processing include:

- Data Streams: Continuous flow of data from sources like sensors or user interactions.

- Processing Engines: Tools that analyze and transform data in real time.

- Output Systems: Dashboards or alerts that present actionable insights immediately.

Real-time processing offers several benefits. It enhances decision-making by providing up-to-date information. It also improves customer experiences through personalized interactions. For instance, ride-sharing apps use real-time data to match drivers with passengers efficiently.

Tip: Use platforms like FineDataLink to integrate and process real-time data seamlessly. Its low-latency capabilities ensure you can act on data as it arrives.

Batch Data Processing

Batch data processing involves collecting and processing data in groups or batches at scheduled intervals. Unlike real-time processing, this method does not handle data instantly. Instead, it processes large volumes of data at once, making it ideal for tasks that do not require immediate action.

You often see batch processing in industries like finance and healthcare. For example, payroll systems calculate employee salaries in batches at the end of each month. Similarly, healthcare providers use batch processing to analyze patient records for trends and insights.

The batch processing workflow typically includes:

- Data Collection: Gather data over a specific period.

- Processing: Analyze and transform the data in bulk.

- Output: Generate reports or summaries for review.

Batch processing is cost-effective and efficient for handling large datasets. However, it may not be suitable for scenarios requiring real-time insights. By combining batch and real-time processing, you can address diverse business needs effectively.

Note: FineDataLink supports both batch and real-time data processing, offering flexibility for various use cases.

Practical Applications of Data Processing

Data Processing in Business and Marketing

Data processing plays a crucial role in business and marketing by helping you understand customer behavior, optimize campaigns, and improve decision-making. By processing customer data, you can identify trends, predict future behaviors, and create personalized marketing strategies. This approach enhances customer satisfaction and boosts revenue.

For example, businesses use data analytics to track customer interactions, measure campaign performance, and refine their strategies. Metrics like Customer Acquisition Cost (CAC) and Customer Lifetime Value (CLV) provide insights into financial efficiency and long-term profitability.

Data Analysis in Healthcare and Medical Research

In healthcare, data analysis transforms patient data into actionable insights that improve outcomes and advance medical research. By processing clinical data, you can identify patterns, predict disease outbreaks, and enhance patient care.

For instance, continuous statistical analyses help identify adverse events, ensuring patient safety. Physicians rely on well-analyzed data to make informed treatment decisions. Advanced techniques like machine learning uncover patterns in complex datasets, driving innovation in treatment development.

| Aspect | Evidence | | --- | --- | | Patient Safety | Continuous statistical analyses can identify adverse events, ensuring ethical standards are maintained. | | Clinical Decision-Making | Well-analyzed data guides physicians in treatment recommendations based on efficacy and safety. | | Innovation in Treatment | Advanced statistical techniques like machine learning uncover patterns in complex datasets. |

Data analytics in healthcare not only improves patient outcomes but also accelerates the development of new treatments. By processing data effectively, you can contribute to a healthier and more efficient healthcare system.

Data Integration for Government and Public Services

Data integration plays a vital role in enhancing the efficiency and effectiveness of government and public services. By unifying information from various sources, you can streamline operations, improve decision-making, and support data-driven policies. This approach ensures that public resources are allocated wisely and services are delivered efficiently.

For example, principal component analysis (PCA) captures over 80% of variance in large datasets, simplifying complex information for better decision-making. Policies informed by such techniques often result in higher public satisfaction due to their evidence-based approach. By integrating data effectively, you can ensure that public safety initiatives and governance strategies are both impactful and efficient.

To maximize these benefits, it is essential to foster a culture that prioritizes data-driven decision-making. Training staff on analytics tools and emphasizing the importance of evidence in policy discussions can significantly enhance outcomes. This approach not only improves operational efficiency but also builds public trust in government initiatives.

Role of FineDataLink in Technology and Artificial Intelligence

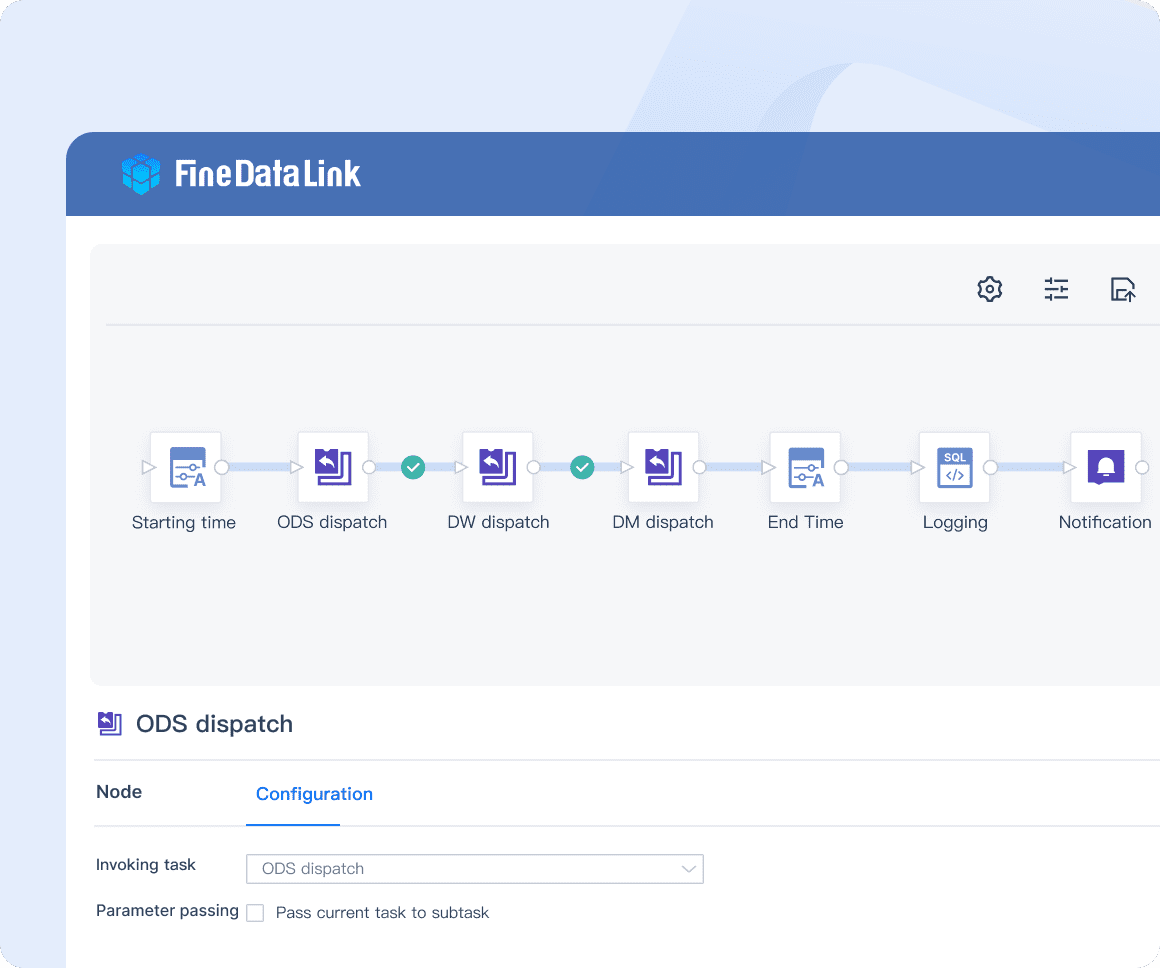

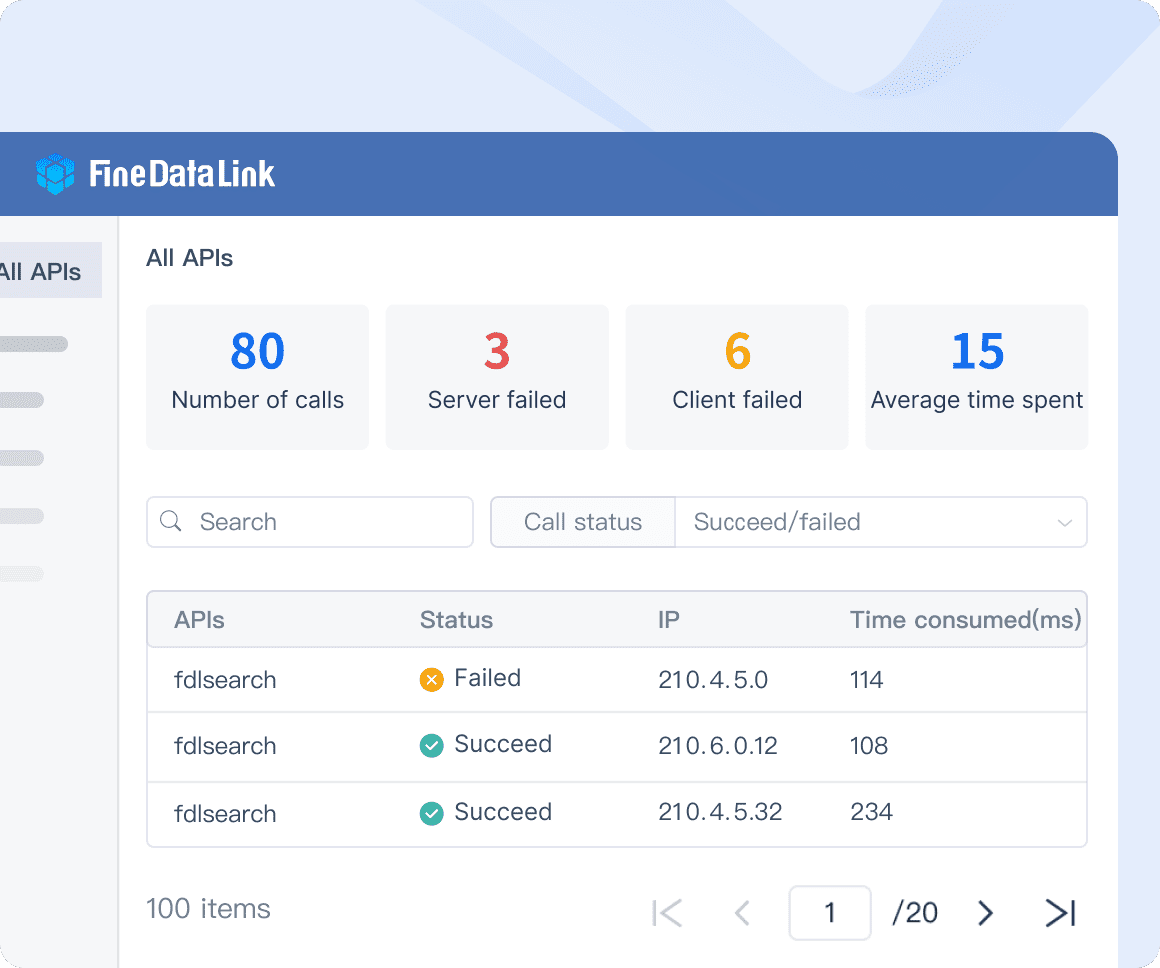

FineDataLink serves as a powerful tool for organizations looking to harness the potential of technology and artificial intelligence (AI). Its advanced data integration capabilities enable you to synchronize and process data across multiple systems seamlessly. This functionality is crucial for building robust AI models and driving innovation in various industries.

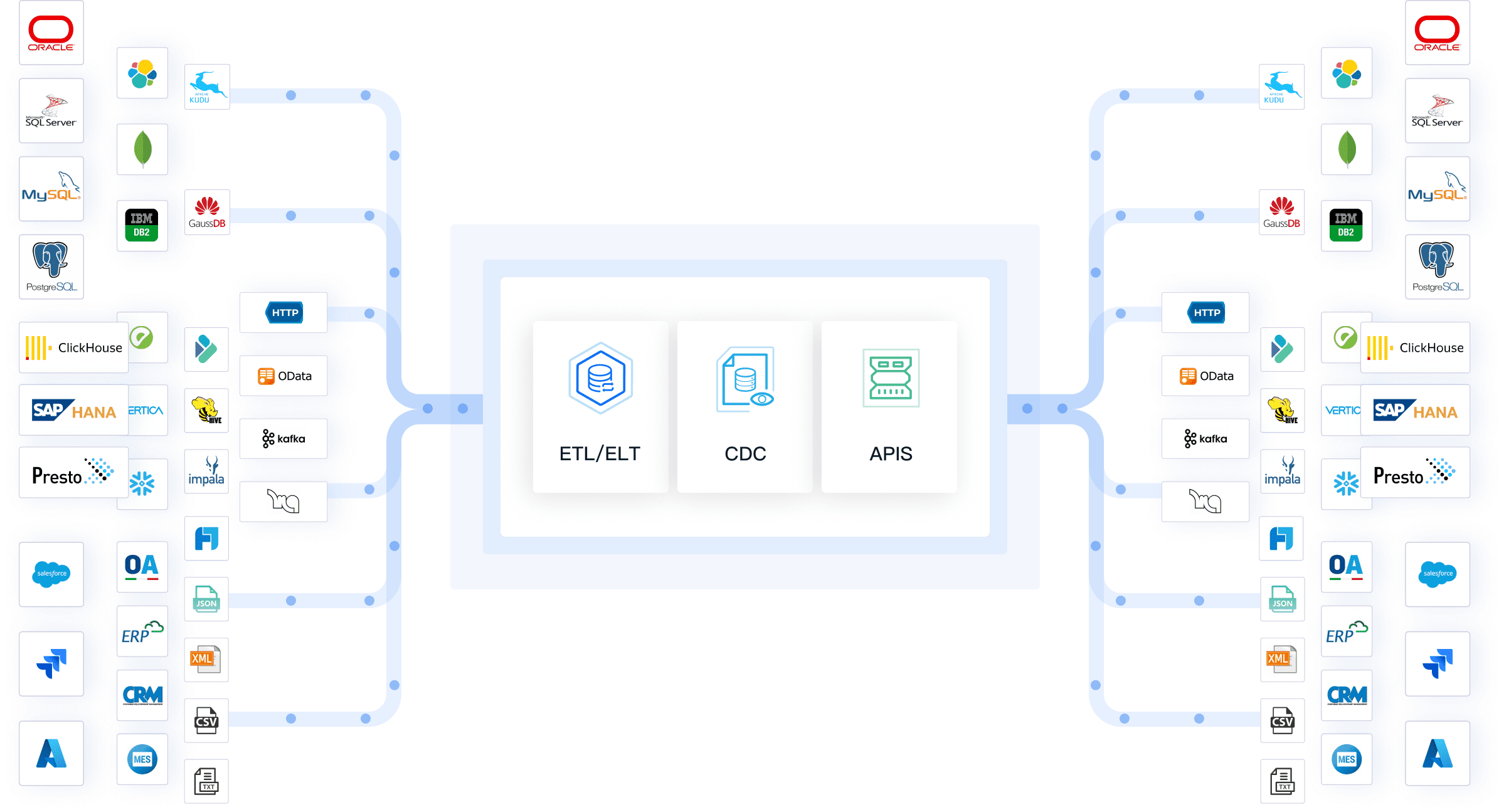

FineDataLink offers three core features that make it indispensable for AI and technology applications:

- Real-Time Data Synchronization: Ensures minimal latency, allowing you to build real-time data warehouses and support AI systems that require up-to-date information.

- ETL/ELT Capabilities: Facilitates data preprocessing, which is essential for training accurate and reliable AI models.

- API Integration: Allows you to share data between systems effortlessly, enabling smoother collaboration and innovation.

For instance, FineDataLink's low-code platform simplifies complex data processing tasks, making it accessible even to non-technical users. Its drag-and-drop interface and support for over 100 data sources ensure that you can integrate diverse datasets without hassle. This ease of use accelerates the development of AI applications, from predictive analytics to machine learning models.

By leveraging FineDataLink, you can overcome common challenges like data silos and scalability issues. Its ability to handle large volumes of data efficiently ensures that your AI systems perform optimally. Whether you are developing smart manufacturing solutions or enhancing customer experiences, FineDataLink provides the foundation for success in the age of AI.

The Future of Data Processing

Emerging Trends in Data Processing

The future of data processing is shaped by emerging technologies and innovative approaches. You can expect several trends to redefine how data is managed and utilized:

- Artificial intelligence integration enhances predictive capabilities, enabling you to make informed decisions faster.

- Real-time data analysis becomes essential for industries that rely on up-to-the-minute insights, such as finance and healthcare.

- Blockchain technology improves data security and transparency, ensuring trust in sensitive transactions.

- Machine learning explainability gains importance, helping you understand complex models and their outcomes.

- Big data and AI reshape credit risk forecasting by analyzing vast datasets for better accuracy.

- Enhanced fraud detection systems use advanced analytics to identify anomalies and prevent losses.

These trends highlight the growing importance of real-time monitoring and early warning systems. By adopting these advancements, you can stay ahead in a competitive landscape and unlock new opportunities for growth.

Challenges and Opportunities in Data Analysis

Modern data analysis faces unique challenges, but it also offers significant opportunities. The integration of advanced technologies like AI and machine learning reshapes how you process and analyze data. However, ensuring data integrity requires improved sharing and verification frameworks.

Privacy concerns remain a critical issue. You must adopt privacy-preserving technologies to protect sensitive information while maintaining transparency. Despite these challenges, opportunities abound. Advanced analytics can prevent improper payments and optimize resource allocation.

Data democratization is another promising trend. By empowering teams with access to data, you can foster a culture of collaboration and innovation. This approach not only enhances decision-making but also builds a foundation for long-term success. As you navigate these challenges, embracing innovation will help you unlock the full potential of data processing.

Data processing transforms raw data into meaningful information, enabling you to make informed decisions and optimize operations. Its six stages—collection, preparation, input, processing, output, and storage—ensure data flows seamlessly from raw form to actionable insights. Each stage plays a vital role in maintaining accuracy, efficiency, and relevance.

Tools like FineDataLink simplify data processing by integrating and synchronizing data across systems. Its real-time capabilities and low-code platform make it easier for you to manage complex datasets and drive innovation.

As industries evolve, data processing will continue to shape decision-making and operational strategies. Emerging technologies like AI and machine learning will enhance its capabilities, offering you new opportunities to harness data for growth and efficiency.

FAQ

Data processing helps you transform raw data into meaningful information. This process ensures that the data you collect becomes actionable insights, enabling better decision-making, improved efficiency, and strategic growth in various industries.

FineDataLink simplifies data processing by offering real-time synchronization, ETL/ELT capabilities, and API integration. Its low-code platform allows you to manage complex datasets easily, ensuring seamless data integration and faster insights without requiring extensive technical expertise.

Real-time data processing allows you to analyze and act on data instantly. This capability is essential for industries like finance and healthcare, where timely decisions can prevent losses, improve customer experiences, and enhance operational efficiency.

Electronic data processing automates tasks, saving you time and reducing errors. It enables real-time monitoring, improves data accuracy, and supports advanced analytics like data mining. This method ensures you can handle large datasets efficiently and make informed decisions quickly.

Yes, data processing helps educators analyze student performance and identify learning gaps. By using processed data, you can create personalized learning strategies, improve outcomes, and foster student success in both traditional and e-learning environments.