Sean, Industry Editor

Feb 11, 2025

Data quality management means making sure your organization’s data stays accurate, complete, consistent, reliable, and ready to use. You must define quality standards, profile data, monitor key metrics, and fix issues to keep trust in your data.

Poor data quality can cost you real opportunities. Studies show that 71% of companies struggle with go-to-market activities because of bad data, and 70% find it hard to make strategic decisions. FineDataLink gives you a modern way to handle data integration and improve data quality. You will see why strong management matters and how you can put it into practice.

Data quality management means you set up processes and standards to keep your data accurate, complete, consistent, and reliable. You use data quality management to make sure your organization’s data supports your business goals. This approach covers the entire data lifecycle, from data creation to storage, integration, and use in analytics.

You play a key role in building a strong data quality management system. You need to:

You also need to set clear data quality standards and use a data governance framework. This framework helps you keep your data quality kpis on track and ensures your data stays accurate and consistent across all systems. When you use a data quality strategy, you create a foundation for high-quality data that supports every part of your business.

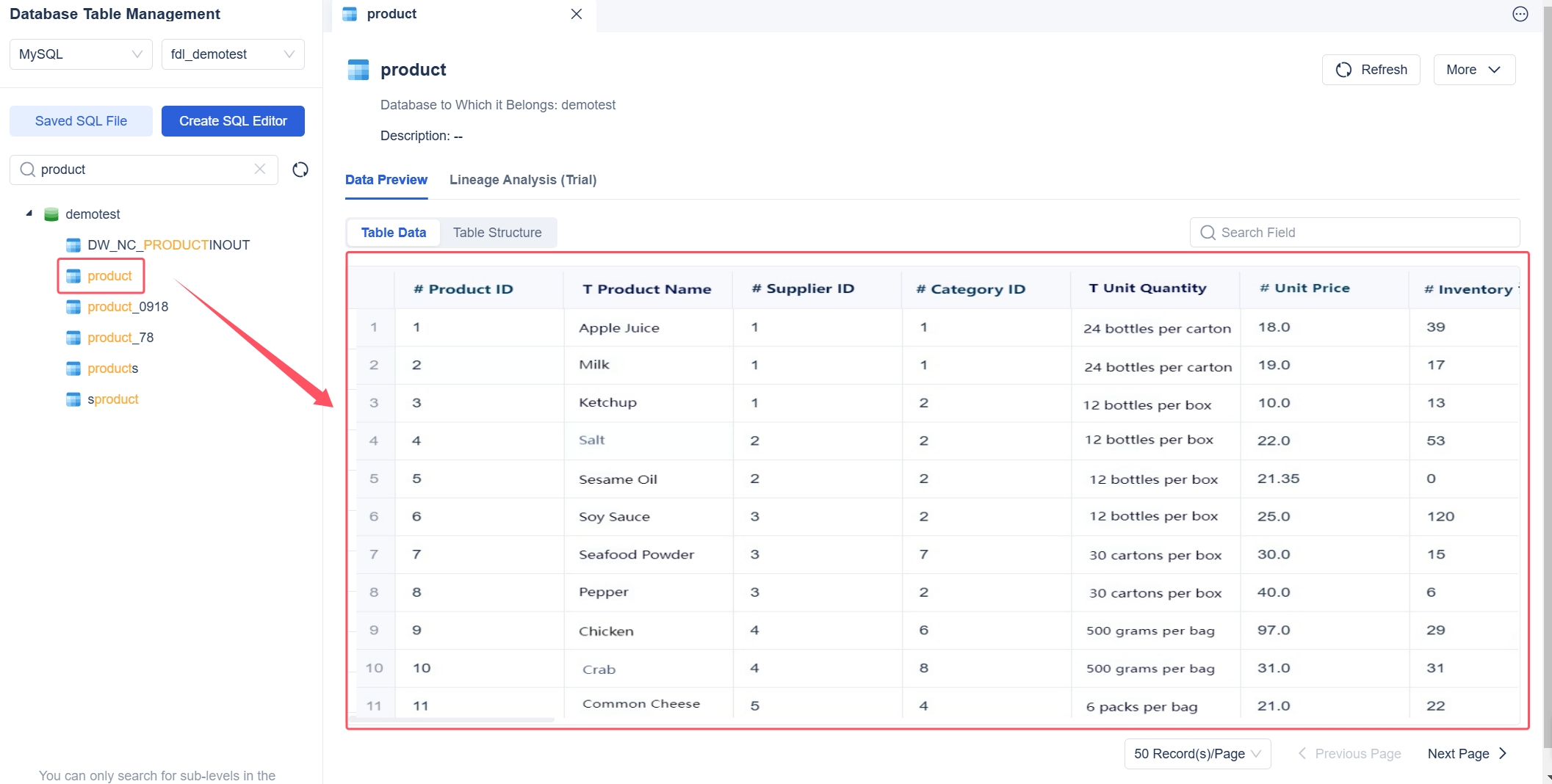

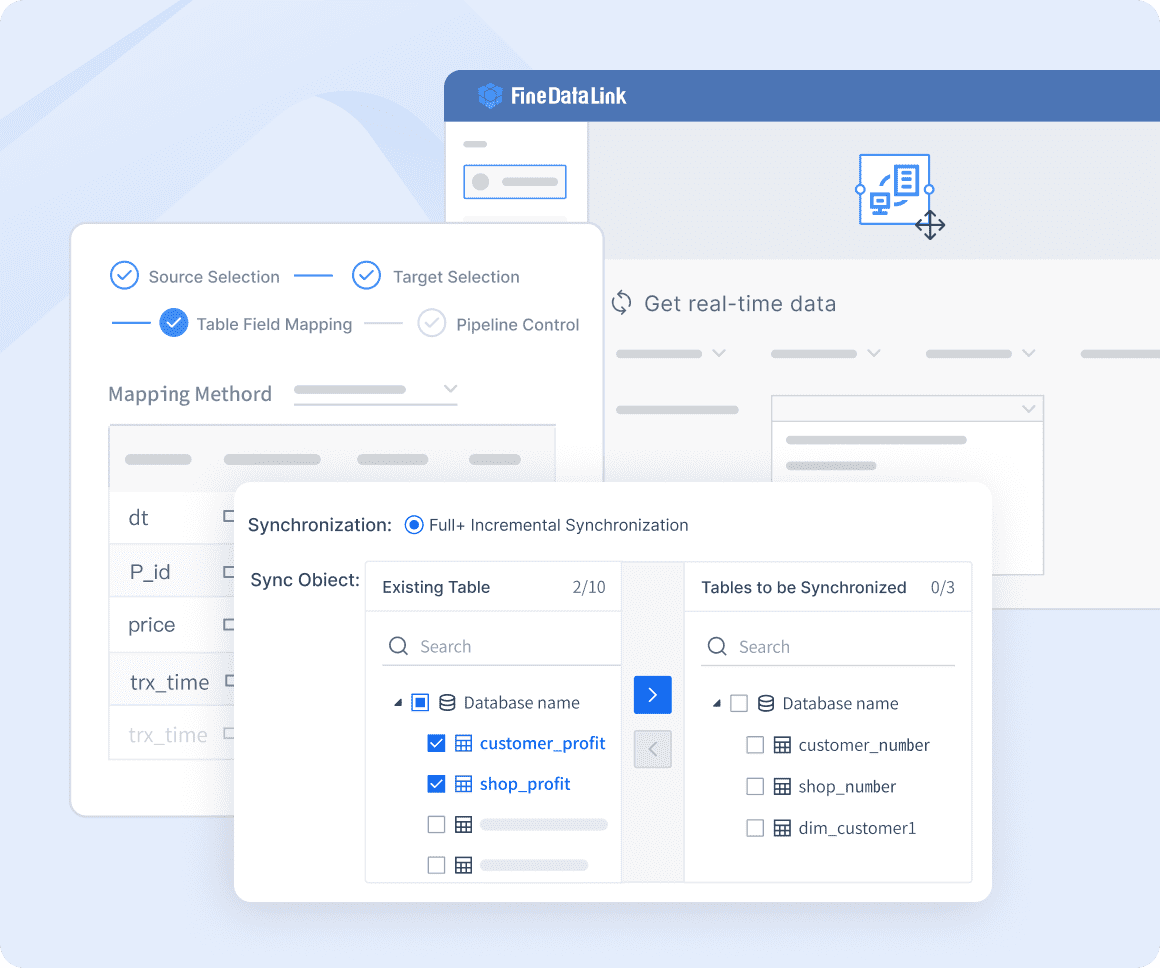

FineDataLink helps you manage data quality by providing a powerful data integration platform. You can collect data from many sources, synchronize it in real time, and transform it to meet your needs. FineDataLink supports multi-source data collection, non-intrusive real-time sync, and efficient data development. These features make it easier for you to maintain high-quality data and meet your data quality kpis.

You need data quality management because your business decisions depend on high-quality data. If your data is not accurate or consistent, you risk making costly mistakes. Reliable data quality management ensures you can trust your data for analytics, reporting, and daily operations.

Here are some ways data quality management supports your business and analytics:

A strong data governance framework works with your data quality management system. It sets clear standards for data quality, assigns data stewards, and makes sure everyone in your organization values high-quality data. This framework also mandates regular quality checks, so you can catch and fix issues before they affect your business.

The benefits of data quality management become clear when you look at decision-making. High-quality data leads to better decisions, faster responses to market changes, and more confident strategic planning. You can see these benefits in the following table:

| Benefit | Description |

|---|---|

| Accurate Data | You base decisions on correct information, reducing errors. |

| Consistent Data | You keep data uniform across datasets, making it more reliable. |

| Timely Data | You get up-to-date information for quick decision-making. |

| Enhanced Profitability | High-quality data helps you create better marketing and sales strategies. |

| Operational Efficiency | You reduce inefficiencies caused by poor data quality. |

When you use a data quality strategy, you drive smarter decisions and support sustainable growth. Reliable data lets you respond quickly to changes and predict trends with confidence. Organizations that focus on data quality management see big improvements in their ability to make informed decisions and stay competitive.

FineDataLink gives you the tools to put your data quality strategy into action. You can use its dual-core ETL and ELT engine, flexible scheduling, and real-time monitoring to keep your data quality kpis high. FineDataLink also supports five data synchronization methods, so you can choose the best way to keep your data accurate and up to date.

A strong data governance framework ties everything together. It defines what counts as master data, sets criteria for data quality, and guides your data quality management processes. With regular monitoring and improvement, you can maintain high-quality data and support every part of your business.

Understanding the main dimensions of data quality helps you build trust in your data and make better decisions. Fisher and Kingma (2001) identified accuracy, completeness, consistency, and timeliness as key dimensions. You should also consider uniqueness and validity when you manage data quality.

Accuracy measures how well your data reflects real-world facts. In manufacturing quality control, you rely on accurate measurements to spot defects and keep product standards high. If your data is not accurate, you risk making wrong decisions. FineDataLink supports accuracy by synchronizing data in real time and transforming it through ETL/ELT processes. This ensures you always work with the most precise information.

Completeness checks if all required data is present. Missing data can delay decisions and increase costs, especially in sectors like healthcare and finance. Machine learning models also need complete data for reliable results. FineDataLink helps you collect and integrate data from many sources, reducing the risk of gaps. When you use complete data, you meet regulatory requirements and avoid compliance issues.

Consistency means your data stays the same across different systems. If you find differences in the same data from multiple sources, you may face reporting errors. FineDataLink keeps your data consistent by integrating and updating it across platforms. BOE improved consistency by standardizing metrics and using a unified analysis framework.

Timeliness shows how current your data is. Real-time data lets you act fast and avoid costly problems. In supply chain management, delays in data can lead to missed sales or stockouts. FineDataLink’s real-time synchronization ensures you always have up-to-date data for quick decisions.

Uniqueness ensures each record appears only once. Duplicate records can waste resources and harm customer relationships. You avoid sending multiple messages to the same person and reduce errors in reporting when your data is unique.

Validity checks if your data meets defined rules and formats. Valid data is essential for regulatory compliance and accurate reporting. FineDataLink helps you validate data during integration, so you can trust your reports and meet legal standards.

When you focus on these data quality dimensions, you improve your analytics, reduce risks, and support business growth.

You face significant risks when you ignore data quality management. Poor data quality can disrupt your business operations and weaken your analytics. Many organizations experience wasted budgets, flawed decision-making, and compliance risks because of unreliable data. You may also lose customer trust and struggle with operational inefficiency.

Here are some of the most common business risks associated with poor data quality:

You also risk financial loss. For example, Unity Technologies lost 400 million in fines in 2020 for inadequate data governance, with an additional $136 million in penalties in 2024 for insufficient progress. These real-world cases show how data quality issues can have a direct impact on your bottom line.

Poor data quality often creates a cycle of rework. Your teams may spend hours manually correcting and reconciling reports. This disrupts operations, causes delays in supply chain management, and affects customer service. Over 25% of data employees say poor data quality is a barrier to data literacy, leading to losses of more than 25 million or more due to these issues.

You also face legal and regulatory risks. Inability to fulfill Data Subject Rights or breaches of data minimization principles can result in legal penalties and reputational damage. These risks highlight why you need to prioritize data quality management in your organization.

When you invest in effective data quality management, you unlock a range of benefits that support your business growth and resilience. High-quality data improves your decision-making, increases operational efficiency, and helps you comply with regulations. You also gain a competitive advantage and build stronger relationships with your customers.

Here are some of the key benefits you can expect:

| Benefit | Description |

|---|---|

| Improved decision-making | Accurate and reliable data supports informed business choices. |

| Enhanced operational efficiency | Fewer errors and less rework save time and resources. |

| Regulatory compliance | Clean data helps you meet industry standards and avoid penalties. |

| Better revenue and profitability | High-quality data drives better marketing, sales, and financial outcomes. |

You also see improvements in customer satisfaction, data security, and scalability. Effective data quality management ensures your data is ready for future growth and new business opportunities.

FineDataLink supports these benefits through its value pillars and advanced features. The platform helps you build an efficient and high-quality data layer for business intelligence. You can integrate data from over 100 sources, synchronize it in real time, and transform it with low-code ETL and ELT tools. FineDataLink’s visual interface and drag-and-drop functionality make data quality reporting and management accessible to your entire team.

You can also automate data quality reporting, monitor key metrics, and set up alerts for potential data quality issues. FineDataLink reduces manual work and helps you maintain high standards across all your systems. This leads to faster, more accurate analytics and better business outcomes.

Real-world examples show the impact of effective data quality management. BOE Technology Group, a leader in the IoT and semiconductor display industry, improved operational efficiency by 50% after implementing a comprehensive data integration solution. They reduced inventory costs by 5% and enabled data-driven decision-making through KPI dashboards and cross-factory benchmarking.

“Moving to a more robust software system boosted productivity. We were able to get more audits out per auditor, with the ability to focus on specific questions and get more discretionary type audits fulfilled,” said Lindsey Hendricks, Quality Control Manager at BOE Mortgage.

“The monthly updates are a huge reassurance for us. We certainly keep up with all the guidelines, but having the software also push out updates, we can choose what we want to keep and what we want to remove. That’s very helpful for fine-tuning and getting more detailed audit input,” Hendricks explained.

“The reporting is great. There are hundreds of different reports that you can pull, and the customization of the reports is super helpful. We have many different audit requests, thus creating the need for different reports. It’s nice just to go in, pull what you need and then it’s right there for you,” Hendricks said.

You can achieve similar results by adopting effective data quality management practices. FineDataLink gives you the tools to automate data quality reporting, monitor your data in real time, and address data quality issues before they affect your business. This approach helps you build a strong foundation for growth, compliance, and innovation.

You start your data quality management journey with data profiling. This step helps you understand your data sources and the structure of your datasets. You use data profiling tools to examine the content and relationships within your data. The process involves several steps:

Data profiling tools help you spot errors early and set a strong foundation for other data quality practices.

Data cleansing tools remove errors and inconsistencies from your datasets. You need to establish clear data governance policies and automate data ingestion and ETL processes. Regular profiling, standardization, and normalization keep your data unified. Deduplication ensures accuracy by removing data duplication. Handle missing data wisely and monitor data quality continuously. Advanced data enrichment tools, including machine learning, can further improve your cleansing process.

You use data validation to check if your data meets business rules and standards. In financial systems, you identify high-risk validation points, set up validation rules, and choose the right technology for automation. The table below shows a typical validation process:

| Step | Description |

|---|---|

| 1 | Identify high-risk validation points, such as compliance and large volumes. |

| 2 | Establish validation rules and parameters. |

| 3 | Select technology for automation and compatibility. |

Ongoing data quality monitoring ensures your data stays accurate and reliable. Best practices include setting clear ownership, automated profiling, and quality checks. You optimize performance and manage resources to align with business needs. Data enrichment tools support continuous improvement and alert you to potential issues.

Data governance supports compliance and data quality in regulated industries. You simplify compliance, address risks, and establish data stewards. Good governance reduces complexity, maintains documentation, and ensures traceability. You also monitor data quality management practices and prepare for audits.

FineDataLink stands out among data quality tools. You can integrate data from multiple sources, maintain consistency, and automate synchronization. The platform transforms data for analysis and supports real-time pipelines. In manufacturing quality control, FineDataLink enables non-intrusive real-time sync, efficient ETL/ELT, and incremental log monitoring. These features help you build a robust data layer and improve your data quality management.

You need to follow recognized standards to ensure your data quality management program delivers results. The most widely adopted global standard is ISO 8000. This standard defines requirements for exchanging master data and sets clear expectations for accuracy, completeness, consistency, timeliness, and relevance. ISO 8000 also introduces the concept of portability, which helps you share master data efficiently with business partners. By aligning your processes with these standards, you create a strong foundation for data quality best practices.

Building a data quality culture starts with leadership support. When leaders value data quality, everyone in your organization pays attention to it. You can use data literacy programs to help employees understand how to manage and use data effectively. Integrating data governance into daily operations ensures that data quality remains a priority. The table below shows strategies for building a strong data quality culture:

| Strategy | Description |

|---|---|

| Leadership Support | Gain buy-in from leaders by showing how data quality improves decisions. |

| Data Literacy | Train employees to handle and interpret data correctly. |

You can use industry frameworks to guide your data quality management efforts. Some of the most common frameworks include:

These frameworks help you structure your approach and ensure you cover all aspects of data quality management.

Continuous improvement is essential for sustaining high data quality. You should standardize data collection procedures and use a data catalog to prevent misunderstandings. Automate repetitive tasks with tools like FineDataLink to detect and fix data defects quickly. Provide regular training on data quality assessment so everyone knows what to look for. Lean, Six Sigma, and Kaizen methods all support ongoing improvement. Regular audits and validations help you catch errors early and keep your data quality best practices up to date.

Implement standardized data collection procedures to minimize errors and inconsistencies. Conduct regular audits and validations of the data to identify and correct any inaccuracies.

FineDataLink supports your improvement efforts by automating data integration, monitoring, and validation. This makes it easier to maintain high standards and adapt to new business needs.

You drive business success when you prioritize data quality management. High-quality data supports better decisions and boosts operational efficiency. To improve data quality, you should:

You create lasting improvements when you combine people, processes, and technology. FineDataLink helps you build a strong foundation for data integration and quality.

FanRuan

https://www.fanruan.com/en/blogFanRuan provides powerful BI solutions across industries with FineReport for flexible reporting, FineBI for self-service analysis, and FineDataLink for data integration. Our all-in-one platform empowers organizations to transform raw data into actionable insights that drive business growth.

Data Quality Management means you set up processes and standards to keep your data accurate, complete, consistent, and reliable. You use it to make sure your data supports your business goals and decision-making.

You need Data Quality Management to trust your data. High-quality data helps you make better decisions, avoid costly mistakes, and stay compliant with regulations. It also improves efficiency and supports business growth.

FineDataLink helps you manage Data Quality Management by integrating data from many sources, synchronizing it in real time, and transforming it with low-code ETL and ELT tools. You can automate monitoring and reporting to keep your data quality high.

You start with data profiling, then move to data cleansing, validation, and monitoring. You also set up data governance to keep your data quality standards strong. Each step helps you maintain reliable and accurate data.

Yes. Data Quality Management ensures your data meets industry standards and legal requirements. You reduce the risk of fines and penalties by keeping your data accurate, complete, and traceable.