Data extraction is the process of retrieving data from various sources to prepare it for analysis. This practice is crucial in modern data management, enabling businesses to gain insights and make informed decisions. By using tools like FineDataLink, you can streamline data extraction, ensuring accuracy and efficiency. FineBI further enhances this by transforming raw data into actionable insights. These tools not only simplify data handling but also improve business operations, making them indispensable in today's competitive landscape.

Understanding Data Extraction

Definition and Basic Concepts of Data Extraction

What is Data Extraction?

Data extraction involves retrieving data from various sources to prepare it for analysis. You gather information from databases, files, or APIs to use it for further processing. This step is essential in both ETL (Extract, Transform, Load) and ELT (Extract, Load, Transform) processes. By extracting data, you ensure that it is ready for transformation and integration, which leads to meaningful insights.

Key Components of Data Extraction

Several components make up the data extraction process:

- Data Sources: These include databases, spreadsheets, and web pages. You need to identify where the data resides.

- Extraction Methods: You can use manual or automated techniques to retrieve data. Automated methods often involve using tools or scripts.

- Data Quality: Ensuring the accuracy and completeness of the extracted data is crucial. You must clean and validate the data to maintain its integrity.

Types of Data Extraction

Structured Data Extraction

Structured data extraction deals with data that is organized in a predefined format, such as tables or spreadsheets. You can easily extract this type of data because it follows a consistent structure. For example, extracting customer information from a database involves retrieving data from specific fields like name, address, and phone number.

Unstructured Data Extraction

Unstructured data extraction involves dealing with data that lacks a predefined format. This includes text documents, emails, and social media posts. You need advanced techniques to extract meaningful information from unstructured data. Businesses collect vast amounts of unstructured data, requiring sophisticated extraction methods to gain insights and make informed decisions.

"Data extraction is crucial for gaining insights, making better decisions, and improving efficiency."

By understanding these concepts and types, you can effectively extract data to support your business needs. This process not only enhances decision-making but also improves operational efficiency.

Tools for Data Extraction

Popular Data Extraction Tools

When it comes to data extraction tools, you have a variety of tools at your disposal. These tools can significantly enhance your ability to retrieve and process data efficiently.

Open-source Tools

Open-source tools offer flexibility and cost-effectiveness. They allow you to customize and adapt the software to meet your specific needs. Scrapestorm is a notable example. This AI-powered software excels in web scraping and data extraction. It provides a simple and intuitive visual operation, making it accessible even if you're not a tech expert. Scrapestorm automatically recognizes entities like emails, numbers, and images, streamlining the extraction process.

Commercial Tools

Commercial tools often come with robust support and advanced features. They are designed to handle large-scale data extraction tasks with ease. Cloud-based ETL solutions are a prime example. These tools connect structured and unstructured data sources to destinations without requiring any coding. They offer control, accuracy, and agility, making them ideal for businesses that need reliable data extraction capabilities.

Criteria for Choosing the Right Tool

Selecting the right data extraction tool is crucial for your success. Here are some key criteria to consider:

Scalability

Scalability ensures that your chosen tool can grow with your needs. As your data volume increases, the tool should handle the additional load without compromising performance. Data Extract Software provides the flexibility to manage a variety of data sources at scale. It supports both batch and continuous modes, allowing you to extract data efficiently regardless of volume.

Ease of Use

Ease of use is essential, especially if you want to minimize the learning curve. A user-friendly interface allows you to focus on extracting and analyzing data rather than struggling with complex configurations. Automated Data Extraction Solutions integrate seamlessly with databases and applications, enabling you to make better decisions faster. These solutions reduce manual coding and maintenance, making them accessible to users with varying technical expertise.

By considering these factors, you can choose a data extraction tool that aligns with your business objectives. Whether you opt for an open-source or commercial solution, the right tool will enhance your data management capabilities and support informed decision-making.

Techniques in Data Extraction

Manual vs. Automated Extraction

When you extract data, you can choose between manual and automated methods. Each approach has its own set of advantages.

Advantages of Manual Extraction

- Flexibility: You can adapt to unexpected changes in data structure. This flexibility proves useful when dealing with complex or poorly organized data sources.

- Control: You maintain complete control over the extraction process. This ensures that you capture exactly what you need without relying on predefined algorithms.

- Customization: You can tailor the extraction process to meet specific requirements. This customization allows for a more personalized approach to data handling.

"Manual extraction offers a level of precision and adaptability that automated methods may not always provide." - Data Extraction Process Overview

Benefits of Automated Extraction

- Efficiency: Automated extraction saves time by processing large volumes of data quickly. This efficiency reduces the workload and speeds up data availability.

- Consistency: You achieve consistent results with automated tools. This consistency minimizes errors and ensures data quality.

- Scalability: Automated methods handle increasing data volumes with ease. This scalability makes them ideal for growing businesses with expanding data needs.

"Automated extraction overcomes challenges like complexity and time consumption, providing a streamlined solution." - Challenges in Data Extraction

Web Scraping

Web scraping stands out as a popular technique for extracting data from websites. It involves using software to collect information from web pages.

How Web Scraping Works

- Identify Target Websites: You start by selecting the websites from which you want to extract data. These sites contain the information you need for analysis.

- Develop a Scraper: You create or use existing software to navigate the website. The scraper identifies and collects the desired data.

- Extract Data: The scraper retrieves the data and stores it in a structured format. This format makes it ready for further analysis and processing.

"Web scraping involves retrieving data from various sources and converting it into a usable format." - Data Extraction Process Overview

Legal Considerations

When you engage in web scraping, you must consider legal aspects. Some websites have terms of service that restrict data extraction. Violating these terms can lead to legal consequences. Always ensure compliance with relevant laws and regulations, such as copyright and data protection laws.

"Accurate data extraction leads to compliance, cost savings, and standardized digital format." - Data Extraction Importance in Healthcare

By understanding these techniques, you can choose the most suitable method for your data extraction needs. Whether you opt for manual or automated processes, or employ web scraping, each technique offers unique benefits that enhance your data management capabilities.

The ETL Process for Data Extraction

Overview of ETL

The ETL process stands for Extract, Transform, Load. It plays a crucial role in data management and analytics. You begin with the Extract Phase, where you retrieve data from various sources. This step is essential because it sets the foundation for the entire process. You gather data from databases, files, or APIs, ensuring that you have all the necessary information for further processing.

Next, you move to the Transform Phase. Here, you convert the extracted data into a suitable format. This transformation involves cleaning, filtering, and aggregating data to meet your analysis needs. You ensure that the data is consistent and ready for integration.

Finally, you reach the Load Phase. In this step, you transfer the transformed data into a target system, such as a data warehouse. This phase is vital for making the data accessible for analysis and decision-making. You ensure that the data is stored efficiently and securely.

Role of Data Extraction in ETL

Importance in Data Integration

Data extraction serves as the first step in the ETL process. It is crucial for data integration. By extracting data, you lay the groundwork for transforming and loading it into a unified system. This integration allows you to analyze data from multiple sources, providing a comprehensive view of your business operations. You gain insights that drive informed decisions and strategic growth.

"Data extraction is generally the first step in the ETL process, essential for rendering data usable for analysis."

Challenges in the Extract Phase

You may encounter several challenges during the extract phase. One common issue is dealing with data quality. Ensuring accuracy and completeness is vital. You must clean and validate the data to maintain its integrity. Handling large volumes of data can also be challenging. You need efficient methods to manage and process this data without compromising performance.

Another challenge is identifying changes in data structure. You must adapt to these changes to ensure successful extraction. By addressing these challenges, you enhance the effectiveness of the ETL process, leading to better data management and analysis.

Challenges in Data Extraction

Common Challenges of Data Extraction

Data Quality Issues

When you extract data, maintaining its quality becomes a significant challenge. Data often comes from various sources, each with its own format and structure. This diversity can lead to inconsistencies and inaccuracies. You might encounter missing values, duplicate entries, or outdated information. These issues can compromise the integrity of your analysis.

To ensure high-quality data, you must implement rigorous validation processes. Regularly check for errors and inconsistencies. By doing so, you maintain the reliability of your data, which is crucial for making informed decisions.

Handling Large Volumes of Data

Managing large volumes of data presents another challenge. As businesses grow, the amount of data they generate increases exponentially. You need efficient methods to process and store this data. Without proper management, you risk overwhelming your systems and slowing down operations.

To tackle this, consider using scalable data extraction tools. These tools can handle increasing data loads without compromising performance. By investing in the right technology, you ensure that your data extraction processes remain efficient and effective.

Solutions to Overcome Challenges

Data Cleaning Techniques

Data cleaning plays a vital role in overcoming quality issues. You can use several techniques to improve data accuracy. Start by removing duplicates and correcting errors. Standardize formats to ensure consistency across datasets. Implement automated tools to streamline the cleaning process.

By adopting these techniques, you enhance the quality of your data. Clean data leads to more accurate analysis and better decision-making.

Efficient Data Processing

Efficient data processing is essential for handling large volumes of data. You can achieve this by optimizing your extraction methods. Use parallel processing to speed up data retrieval. Implement batch processing to manage large datasets effectively.

Additionally, consider cloud-based solutions for scalability. These platforms offer the flexibility to expand your data processing capabilities as needed. By focusing on efficiency, you ensure that your data extraction processes remain robust and reliable.

"Efficient data processing ensures that businesses can handle large volumes of data without compromising performance." - Data Management Insights

By addressing these challenges and implementing effective solutions, you can improve your data extraction processes. This not only enhances the quality of your data but also supports better business outcomes.

Use Cases of Data Extraction

Data extraction plays a pivotal role in various industries, helping streamline operations and enhance decision-making. By understanding its applications, you can leverage data extraction to improve your business processes.

Industry Applications

E-commerce

In the e-commerce industry, data extraction helps you gather valuable insights from customer interactions, sales data, and market trends. By extracting data from online transactions, customer reviews, and social media, you can:

- Enhance Customer Experience: Understand customer preferences and tailor your offerings to meet their needs.

- Optimize Inventory Management: Analyze sales patterns to predict demand and manage stock levels efficiently.

- Improve Marketing Strategies: Identify successful campaigns and target audiences more effectively.

Healthcare

In healthcare, data extraction enables you to access critical patient information, research data, and operational metrics. This process supports:

- Patient Care Improvement: Extract data from electronic health records (EHRs) to provide personalized treatment plans.

- Research and Development: Analyze clinical trial data to accelerate drug development and innovation.

- Operational Efficiency: Monitor hospital performance metrics to optimize resource allocation and reduce costs.

"Accurate data extraction leads to compliance, cost savings, and standardized digital format." - Data Extraction Importance in Healthcare

Future Trends in Data Extraction

Emerging Technologies

AI and Machine Learning

Artificial Intelligence (AI) and Machine Learning (ML) are revolutionizing data extraction. You can leverage these technologies to enhance accuracy and efficiency. AI-driven tools adapt to varying data structures, ensuring precise extraction. For instance, Optical Character Recognition (OCR) combined with AI achieves a 99% accuracy rate. This technology recognizes text from images or scanned documents, making data extraction seamless. By using AI and ML, you can automate complex tasks, reducing manual effort and increasing productivity.

Cloud-based Solutions

Cloud-based solutions offer flexibility and scalability in data extraction. You can access data from anywhere, anytime, without relying on physical infrastructure. These solutions support real-time data processing, allowing you to make quick decisions. Cloud platforms also provide robust security measures, protecting your data from unauthorized access. By adopting cloud-based solutions, you ensure that your data extraction processes remain efficient and secure, even as your business grows.

Predictions for the Future

Increased Automation

Automation will play a significant role in the future of data extraction. You can expect more advanced tools that require minimal human intervention. Automated processes will handle large volumes of data swiftly, ensuring consistency and accuracy. With automation, you can focus on analyzing data rather than extracting it. This shift will lead to faster decision-making and improved business outcomes.

Enhanced Data Security

As data becomes more valuable, security will become a top priority. You must ensure that your data extraction methods comply with legal and ethical standards. Enhanced security measures will protect sensitive information from breaches and unauthorized access. By prioritizing data security, you build trust with your customers and stakeholders. This focus on security will drive the development of new technologies and practices, ensuring that your data remains safe and reliable.

By embracing these future trends, you can stay ahead in the ever-evolving data landscape. Whether through AI, cloud solutions, or enhanced security, these advancements will empower you to extract valuable insights and drive strategic growth.

FanRuan's Role in Data Extraction

FanRuan plays a pivotal role in enhancing data extraction processes through its innovative solutions. By leveraging advanced technologies, you can streamline data management and gain valuable insights. Let's explore how FineDataLink and FineBI contribute to efficient data extraction and analysis.

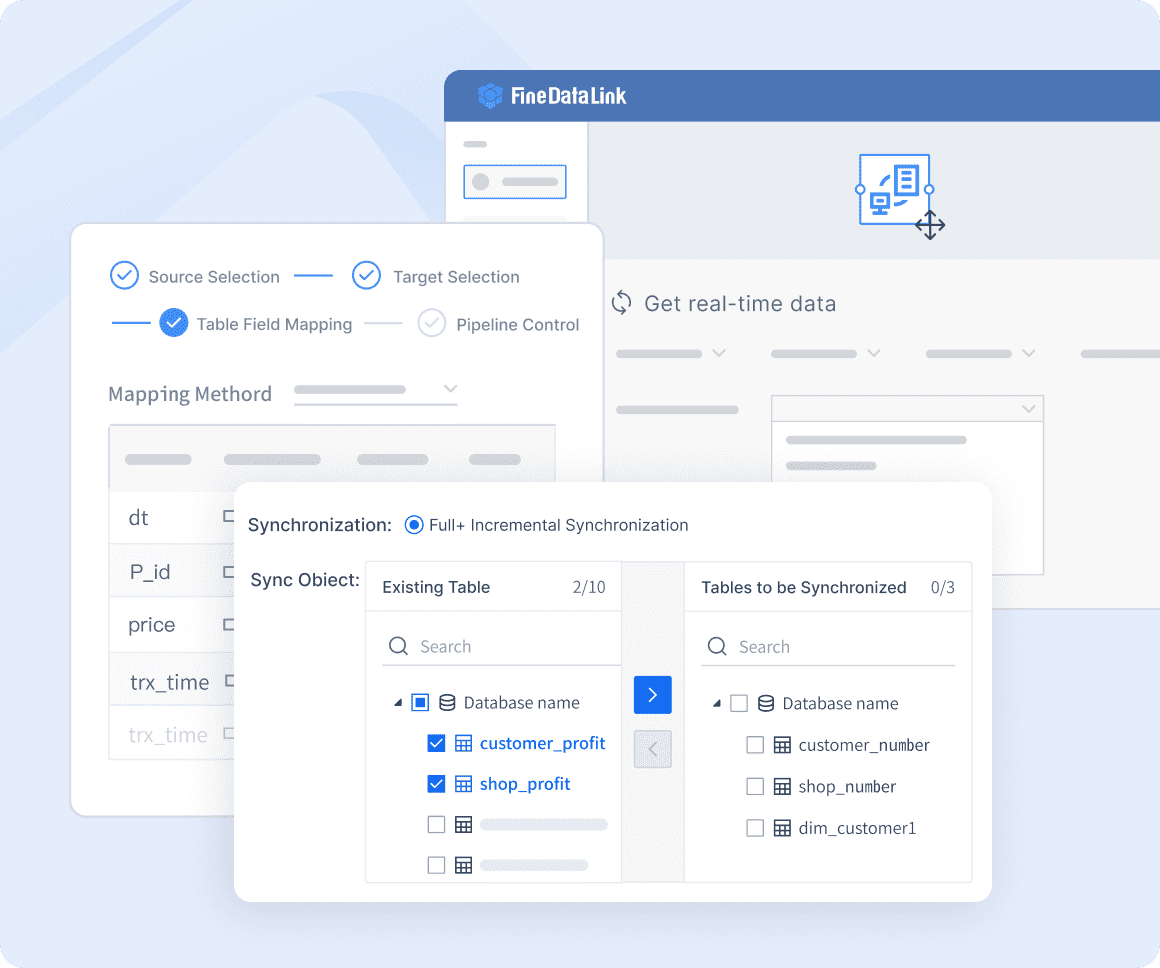

FineDataLink for Data Integration

FineDataLink serves as a comprehensive platform for data integration. It simplifies complex tasks, allowing you to focus on extracting meaningful insights from your data.

Real-time Data Synchronization

With FineDataLink, you achieve real-time data synchronization. This feature ensures that your data remains up-to-date across multiple systems. You can synchronize data with minimal latency, making it ideal for applications requiring timely information. This capability supports database migration and backup, ensuring seamless data flow.

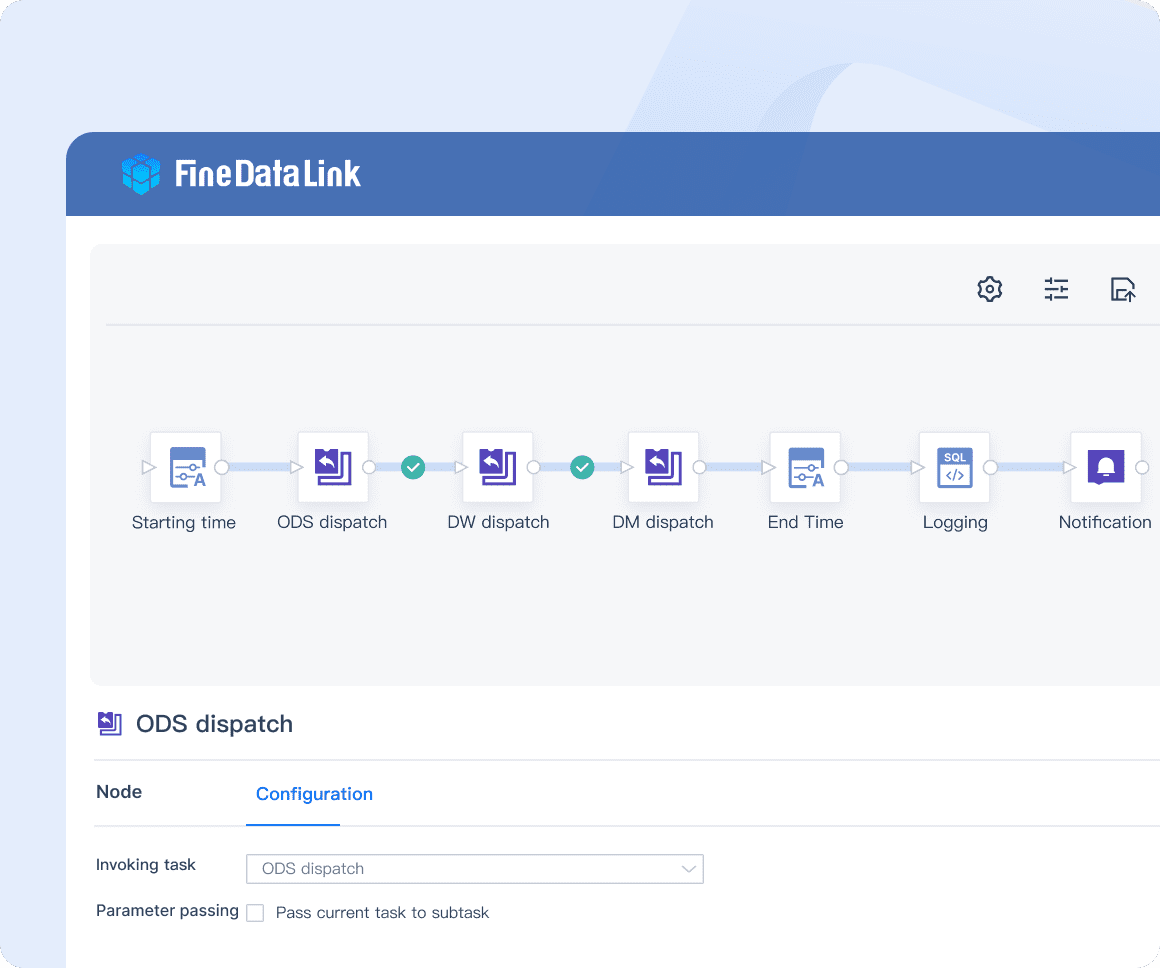

Advanced ETL & ELT Capabilities

FineDataLink offers advanced ETL & ELT Capabilities. These functions allow you to preprocess data efficiently. You can transform raw data into a structured format, ready for analysis. This process enhances data quality and supports the construction of both offline and real-time data warehouses.

"FineDataLink addresses the challenges of data integration, data quality, and data analytics through its core functions." - FanRuan Software Co., Ltd.

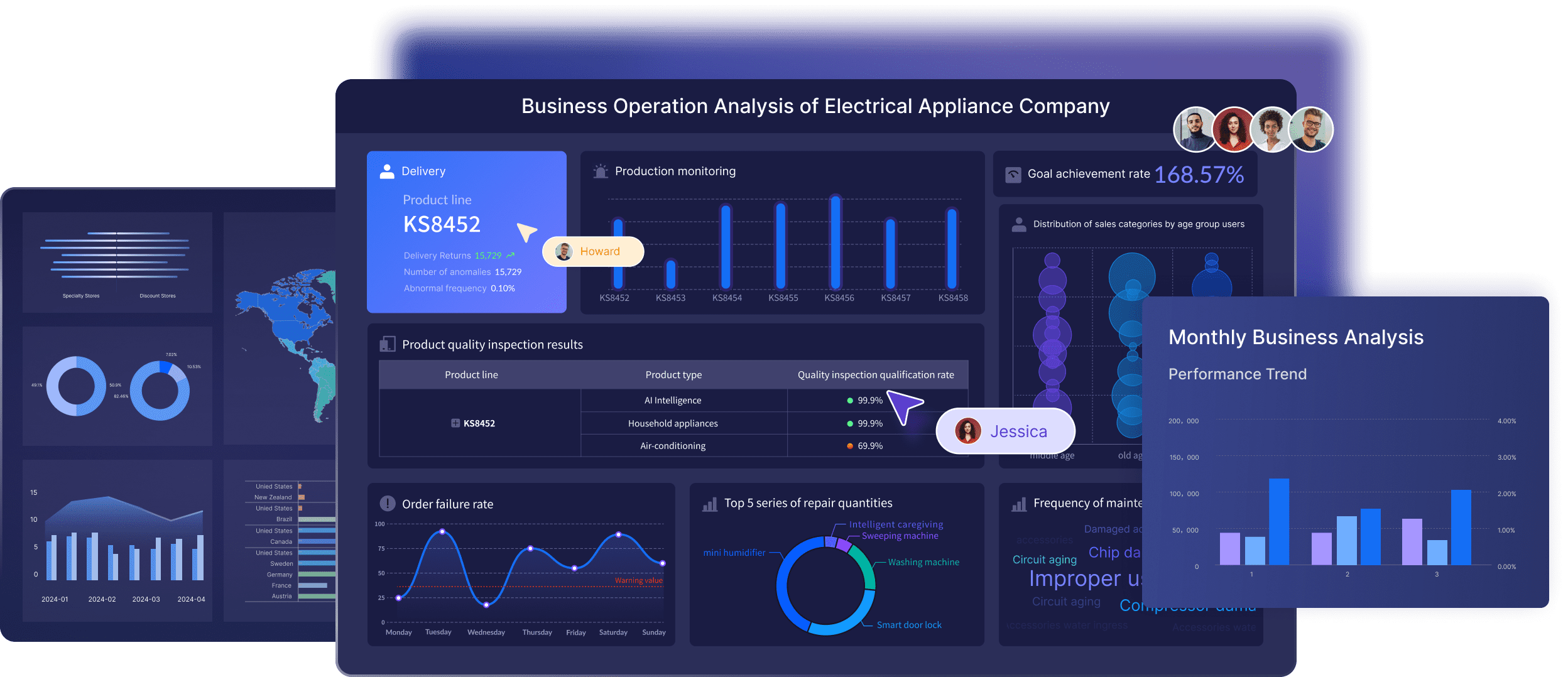

FineBI for Business Intelligence

FineBI empowers you to perform self-service data analysis and gain real-time insights. This tool transforms raw data into actionable information, supporting informed decision-making.

Self-service Data Analysis

With FineBI, you can conduct self-service data analysis. This feature enables you to explore data independently, without relying on IT support. You can connect to various data sources, analyze trends, and visualize results. This capability democratizes data access, allowing you to make data-driven decisions with ease.

Real-time Data Insights

FineBI provides real-time data insights, enhancing your ability to respond to changing business conditions. You can track key performance indicators (KPIs) and identify trends as they emerge. This real-time analysis boosts productivity and supports strategic growth.

By utilizing FineDataLink and FineBI, you can optimize your data extraction processes. These tools not only enhance data integration and analysis but also support your business in achieving strategic objectives. Embrace FanRuan's solutions to stay ahead in the competitive landscape.

In this blog, you explored the essential role of data extraction in modern business operations. You learned how it serves as the foundation for creating actionable insights and analytics. Staying updated with emerging trends like AI and cloud-based solutions ensures you remain competitive. By exploring various tools and techniques, you can enhance your data management capabilities. This empowers you to make informed decisions, improve operations, and minimize risks. Embrace these advancements to drive strategic growth and maintain a robust data-driven approach.

FAQ

Data extraction involves retrieving data from various sources to prepare it for analysis. You gather information from databases, files, or APIs to use it for further processing. This step is essential in both ETL (Extract, Transform, Load) and ELT (Extract, Load, Transform) processes. By extracting data, you ensure that it is ready for transformation and integration, which leads to meaningful insights.

Data extraction plays a crucial role in modern data management. It allows you to collect and organize data from multiple sources, enabling comprehensive analysis. By extracting data, you gain insights that drive informed decisions and strategic growth. This process enhances operational efficiency and supports better business outcomes.

You can use several methods for data extraction, including:

Manual Extraction: Involves manually retrieving data from sources. This method offers flexibility and control but can be time-consuming.

Automated Extraction: Uses software tools to extract data quickly and consistently. This method is efficient and scalable, ideal for handling large volumes of data.

Web Scraping: Involves using software to collect data from websites. This technique is useful for extracting information from online sources.

You may encounter several challenges during data extraction, such as:

Data Quality Issues: Ensuring accuracy and completeness is vital. You must clean and validate the data to maintain its integrity.

Handling Large Volumes of Data: Managing large datasets can be challenging. You need efficient methods to process and store this data without compromising performance.

Adapting to Changes in Data Structure: Identifying changes in data structure is crucial for successful extraction. You must adapt to these changes to ensure accurate [

](https://www.fanruan.com/en/glossary/big-data/what-is-data-retrieval).

To overcome data extraction challenges, consider the following solutions:

Data Cleaning Techniques: Implement rigorous validation processes to improve data accuracy. Use automated tools to streamline the cleaning process.

Efficient Data Processing: Optimize your extraction methods for better performance. Use parallel processing and batch processing to manage large datasets effectively.

Scalable Tools: Invest in scalable data extraction tools that can handle increasing data loads. These tools ensure that your processes remain efficient and effective.

Continue Reading About Data Extraction

What Does a Data Management Specialist Do?

A data management specialist ensures data integrity, quality, and accessibility, supporting decision-making and operational efficiency in organizations.

Howard

Nov 14, 2024

2025's Best Data Validation Tools: Top 7 Picks

Explore the top 7 data validation tools of 2025, featuring key features, benefits, user experiences, and pricing to ensure accurate and reliable data.

Howard

Aug 09, 2024

2025 Data Pipeline Examples: Learn & Master with Ease!

Unlock 2025’s Data Pipeline Examples! Discover how they automate data flow, boost quality, and deliver real-time insights for smarter business decisions.

Howard

Feb 24, 2025

Best Data Integration Platforms to Use in 2025

Explore the best data integration platforms for 2025, including cloud-based, on-premises, and hybrid solutions. Learn about key features, benefits, and top players.

Howard

Jun 20, 2024

Best Data Management Tools of 2025

Explore the best data management tools of 2025, including FineDataLink, Talend, and Snowflake. Learn about their features, pros, cons, and ideal use cases.

Howard

Aug 04, 2024

Business Data Analyst vs Business Analyst Key Differences Explained

Compare business data analyst vs business analyst roles, focusing on key differences, responsibilities, skills, and career paths to guide your career choice.

Lewis

Mar 11, 2025