Data completeness refers to the presence of all necessary data for a given purpose. It ensures that analyses, models, and decisions are based on the most accurate representation of the situation. Incomplete data can lead to faulty assumptions and missed opportunities. For instance, FineDataLink, FineReport, FineBI, and FineVis emphasize the importance of complete data in their analytics solutions. Complete data is crucial for making informed decisions and maintaining the reliability of data analytics. Without it, organizations risk skewed insights and flawed conclusions.

What is Data Completeness?

Definition of Data Completeness

Data completeness refers to the presence of all necessary information within a dataset. It ensures that the data is useful for analysis, modeling, and decision-making. When data is complete, it provides a comprehensive view of the subject matter, allowing for accurate insights and conclusions. Incomplete data, on the other hand, can lead to biased or inaccurate results. This concept is crucial across various domains, including healthcare, finance, technology, and social research. Organizations rely on complete data to derive meaningful insights and make informed decisions.

Characteristics of Complete Data

Complete data possesses several key characteristics that enhance its reliability and usefulness:

- Accuracy: Complete data ensures that all required information is present, reducing the risk of errors in analysis. Missing attributes can lead to erroneous analyses and incorrect conclusions.

- Consistency: Data completeness contributes to consistency across datasets. Consistent data allows for seamless integration and comparison, which is essential for comprehensive analysis.

- Reliability: Reliable data is foundational for informed decision-making. Complete data provides a dependable basis for drawing conclusions and making strategic choices.

- Comprehensiveness: Complete data covers all aspects of a problem or situation, ensuring a thorough understanding. This comprehensiveness is vital for accurate modeling and decision-making.

In various industries, from e-commerce to healthcare, data completeness ensures data accuracy, consistency, and reliability. Measuring data completeness is vital for organizations aiming to make data-driven decisions. Specific metrics and KPIs help monitor data completeness over time and improve data quality.

Why is Data Completeness Important?

Data completeness plays a pivotal role in various aspects of data management and utilization. It ensures that all necessary information is available, which is crucial for making informed decisions, conducting accurate data analytics, and adhering to compliance and regulatory standards.

Impact on Decision-Making

Complete data is essential for making accurate and informed decisions. When decision-makers have access to all relevant data, they can understand the full scope of a situation. This comprehensive understanding minimizes the risk of biased or faulty analyses. In contrast, incomplete data can lead to guesswork, resulting in unreliable insights and skewed outcomes. For instance, businesses relying on incomplete data may overlook critical factors, leading to poor decision-making and potential revenue loss. According to Gartner, poor data quality, including incomplete data, can cost organizations an average of $12.9 million annually. Therefore, ensuring data completeness is vital for business success, especially in today's competitive environment.

Role in Data Analytics

Data analytics relies heavily on the completeness of data. Analysts need complete datasets to draw accurate conclusions and generate reliable insights. Incomplete data can hinder analysis, leading to false conclusions and costly mistakes. For example, missing data points can introduce bias, affecting the overall quality and reliability of the analysis. Complete data provides a comprehensive view, allowing analysts to uncover patterns and trends that might otherwise go unnoticed. This comprehensive analysis is crucial for strategic planning and operational adjustments across various industries, from healthcare to finance.

Importance for Compliance and Regulation

Data completeness is also critical for compliance and regulatory purposes. Many industries operate under strict regulations that require accurate and complete data reporting. Incomplete data can lead to non-compliance, resulting in legal penalties and reputational damage. For instance, in the healthcare sector, incomplete patient records can compromise patient safety and violate regulatory standards. Similarly, financial institutions must maintain complete transaction records to comply with anti-money laundering regulations. Ensuring data completeness helps organizations meet these regulatory requirements, safeguarding them against potential legal and financial repercussions.

Examples of Incomplete Data and Their Consequences

Incomplete data can have significant repercussions across various sectors. It often leads to flawed conclusions, missed opportunities, and inefficiencies. Understanding these consequences helps highlight the importance of data completeness.

Case Studies of Incomplete Data

Case Study: Finance and Insurance Sectors

In the finance and insurance sectors, incomplete data can result in inadequate risk assessments. Companies may develop misinformed risk management strategies due to missing information. For instance, an insurance company might underestimate the risk associated with a particular policyholder if crucial data points are absent. This oversight can lead to financial losses and increased liability.

Case Study: Resource Allocation in Businesses

Businesses often rely on data to allocate resources efficiently. Incomplete data can lead to inefficiencies in this process. A company might allocate more resources to a project based on partial data, overlooking other critical areas that require attention. This misallocation can result in wasted resources and missed growth opportunities.

Case Study: Healthcare Sector

In healthcare, incomplete patient records can compromise patient safety. Missing medical history or treatment details can lead to incorrect diagnoses or inappropriate treatments. This not only affects patient outcomes but also increases the risk of legal repercussions for healthcare providers.

Consequences in Business and Research

Incomplete data can skew analysis and lead to unreliable insights. Businesses relying on such data may make poor decisions, resulting in lost revenue and an erosion of trust in their data systems. For example, a retail company using incomplete sales data might misinterpret consumer trends, leading to ineffective marketing strategies and inventory management.

In research, incomplete data can lead to inaccurate or misleading analysis. Researchers might draw erroneous conclusions, affecting the validity of their studies. This can have far-reaching implications, especially in fields like social sciences and technology, where accurate data is crucial for developing new theories and innovations.

"Incomplete data often leads to wrong or misleading conclusions," impacting decision-making and future predictions. Ensuring data completeness is essential for maintaining accuracy and reliability in both business and research contexts.

How is Data Completeness Measured?

Measuring data completeness involves evaluating whether a dataset contains all necessary information for its intended purpose. This process ensures that the data is reliable and suitable for analysis, decision-making, and reporting. Various tools and techniques help organizations assess and improve data completeness.

Tools for Measuring Data Completeness

Organizations use a variety of tools to measure data completeness effectively. These tools often integrate into existing data pipelines, providing ongoing assessments and improvements.

- Data Quality Platforms: Comprehensive platforms like Melissa Data Quality offer features for data profiling, cleansing, enrichment, and monitoring. They assess data completeness by identifying missing values and suggesting ways to fill gaps.

- Data Profiling Tools: These tools analyze datasets to detect missing values and assess record-level and table-level completeness. They provide insights into data coverage and highlight areas needing improvement.

- Data Completeness Scorecards: Scorecards offer a visual representation of data completeness metrics. They help organizations track progress over time and identify trends in data quality.

- SQL Server Integration Services (SSIS), Oracle’s Data Quality Suite, and IBM’s Information Analyser: These tools provide completeness analysis and duplication checks. They ensure comprehensive data coverage by flagging missing data and offering solutions for data enrichment.

Metrics and Indicators of Completeness

Metrics and indicators play a crucial role in measuring data completeness. They provide quantifiable measures to evaluate the extent of data coverage.

- Record-Level Completeness: This metric assesses whether individual records contain all necessary fields. It identifies missing attributes that could affect data reliability.

- Table-Level Completeness: This indicator evaluates the completeness of entire tables within a dataset. It ensures that all expected datasets are present and accounted for.

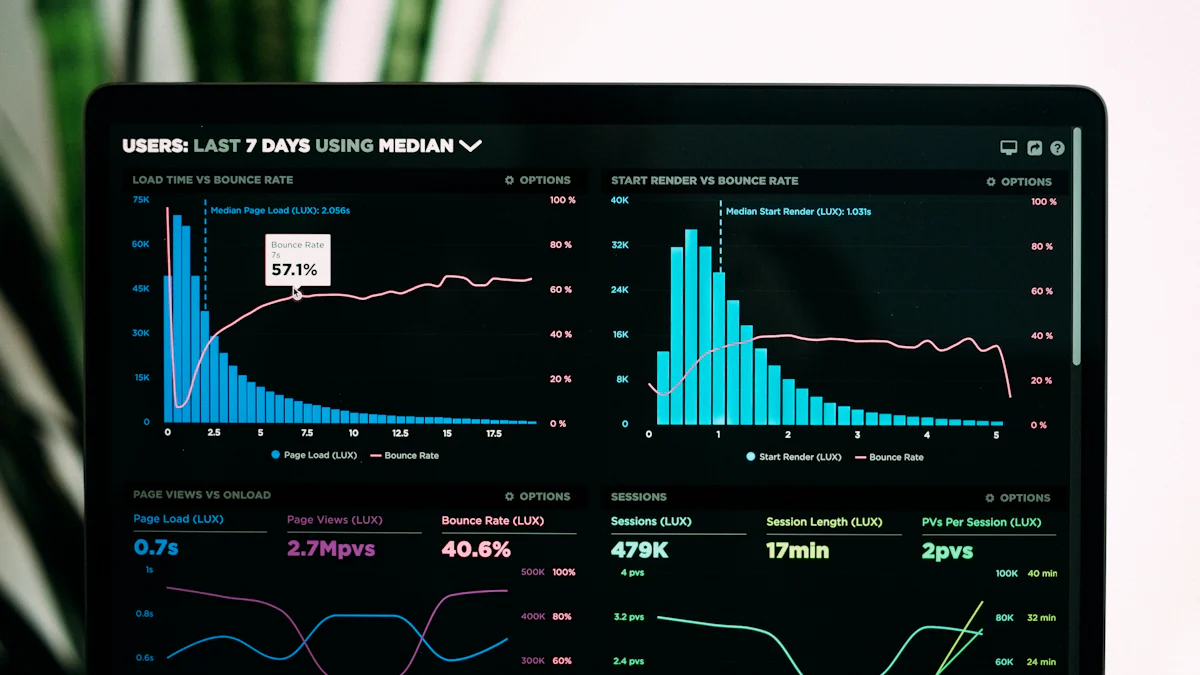

- Data Quality Dashboards: Dashboards offer a centralized view of data completeness metrics. They allow organizations to monitor data quality in real-time and make informed decisions based on comprehensive data.

- Automated Monitoring and Alerts: Automated systems continuously check for data completeness, sending alerts when gaps or inconsistencies arise. This proactive approach helps maintain high data quality standards.

By employing these tools and metrics, organizations can ensure data completeness, enhancing the overall quality and reliability of their data. This comprehensive approach supports accurate analysis, informed decision-making, and compliance with regulatory standards.

Strategies to Ensure Data Completeness

Ensuring data completeness requires a strategic approach that involves implementing best practices and regular monitoring. Organizations can adopt several strategies to maintain the integrity and reliability of their data.

Data Governance Practices

Data governance plays a pivotal role in ensuring data completeness. Establishing standardized processes and guidelines for data collection helps maintain consistency and reduces the risk of missing data. Organizations should develop comprehensive data governance frameworks that outline roles, responsibilities, and procedures for data management. These frameworks ensure that all necessary data elements are captured and maintained throughout the data lifecycle. By adhering to these practices, organizations can enhance the reliability of their analytical insights and support informed decision-making.

Data Validation Techniques

Implementing robust data validation techniques is essential for maintaining data completeness. Validation checks help identify and rectify errors or omissions in datasets. Organizations should design data collection processes that incorporate validation mechanisms to capture all relevant information accurately. These checks can include range checks, format checks, and consistency checks to ensure that data meets predefined criteria. By integrating validation techniques into data collection workflows, organizations can mitigate issues related to incomplete data and improve overall data quality.

Regular Data Audits

Regular data audits are crucial for assessing and maintaining data completeness over time. These audits involve systematically reviewing datasets to identify gaps, inconsistencies, or missing elements. Organizations should establish a schedule for conducting data audits and use automated tools to streamline the process. During audits, data quality metrics and indicators can be evaluated to ensure that datasets remain comprehensive and reliable. By conducting regular audits, organizations can proactively address data quality issues and uphold high standards of data completeness.

"Regular assessment and monitoring are necessary to maintain data quality over time." This proactive approach ensures that organizations can rely on their data for accurate analysis and decision-making.

Tools and Technologies for Improving Data Completeness

In the digital age, ensuring data completeness has become a priority for organizations. Various tools and technologies have emerged to address this need, enhancing the quality and reliability of data. These innovations streamline data collection, integration, and analysis, providing businesses with comprehensive datasets for informed decision-making.

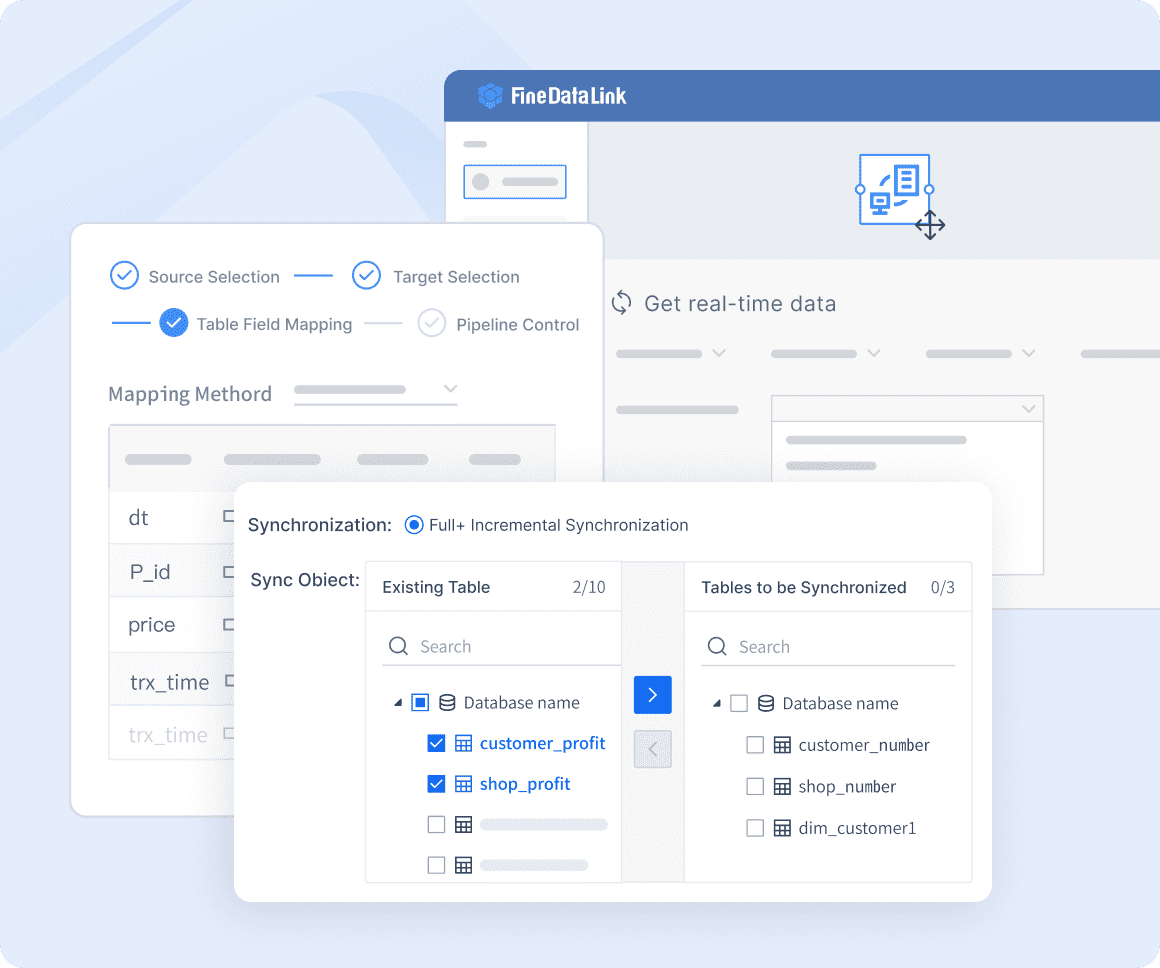

Role of FanRuan's FineDataLink in Data Completeness

FineDataLink plays a pivotal role in achieving data completeness. This platform simplifies complex data integration tasks with its low-code approach. Users can enjoy seamless real-time data synchronization and advanced ETL & ELT data development. The intuitive drag-and-drop functionality of FineDataLink's web-based interface delivers efficiency ten times greater than traditional ETL methods. By enabling the successful delivery of over 1,000 data projects, FineDataLink ensures that organizations maintain complete and accurate datasets. This capability is crucial for businesses aiming to derive meaningful insights and make strategic decisions.

Automation tools significantly contribute to data completeness by streamlining data collection processes. These tools reduce manual intervention, minimizing errors and ensuring that all necessary data is captured. Automation tools facilitate real-time data updates, allowing organizations to maintain up-to-date and comprehensive datasets. For instance, platforms like FineReport, FineBI, and FineVis offer robust automation features that enhance data collection and integration. FineReport provides dynamic reporting capabilities, FineBI empowers users with self-service analytics, and FineVis offers advanced data visualization. Together, these tools support organizations in achieving data completeness, enabling them to harness the full potential of their data assets.

"Automation tools play a crucial role in achieving data completeness by streamlining and optimizing data integration workflows." This approach ensures that organizations can rely on their data for accurate analysis and decision-making.

Future of Data Completeness

The future of data completeness promises exciting advancements as technology continues to evolve. Organizations increasingly recognize the importance of maintaining complete datasets to drive informed decision-making and strategic growth. Emerging trends and innovations in data management are set to redefine how businesses approach data completeness.

Emerging Trends of Data Completeness

Several emerging trends are shaping the future of data completeness. One significant trend is the integration of artificial intelligence (AI) and machine learning (ML) into data management processes. These technologies enhance data quality by automating data collection, validation, and integration tasks. AI and ML algorithms can identify patterns and anomalies in datasets, ensuring that all necessary information is captured and maintained. According to a study, 75% of data professionals consider data quality a primary goal, highlighting the growing emphasis on complete and accurate data.

Another trend is the adoption of data mesh architecture. This decentralized approach to data management allows organizations to treat data as a product, with dedicated teams responsible for its quality and completeness. Data mesh promotes collaboration and accountability, ensuring that data remains comprehensive and reliable across different domains. Additionally, edge computing and cloud technologies are playing a crucial role in enhancing data completeness. These technologies enable real-time data processing and integration, reducing latency and ensuring that datasets remain up-to-date and complete.

Innovations in Data Management

Innovations in data management are driving improvements in data completeness. Advanced data analytics tools are leveraging natural language processing (NLP) to enhance data understanding and interpretation. NLP enables organizations to extract valuable insights from unstructured data sources, ensuring that no critical information is overlooked. This innovation is particularly beneficial in industries like healthcare, where robust data architecture and governance are prioritized.

Furthermore, forward-thinking companies are implementing robust processes for data collection, storage, and processing. These processes ensure that data remains complete and accurate throughout its lifecycle. Automation tools, such as those offered by FanRuan's FineDataLink, FineReport, FineBI, and FineVis, streamline data integration workflows, reducing manual intervention and minimizing errors. By embracing these innovations, organizations can maintain high standards of data completeness, supporting accurate analysis and decision-making.

"The future of data quality is rapidly evolving, propelled by AI and machine learning integration." This evolution underscores the importance of data completeness in achieving reliable and meaningful insights.

Data completeness ensures that all necessary information is present, providing a foundation for accurate and reliable data analysis. It plays a crucial role in various domains, from healthcare to finance, by guaranteeing that decisions are based on comprehensive datasets. Organizations must prioritize ongoing efforts to maintain data completeness, as it impacts business success and decision-making. Future developments, such as AI and machine learning, promise to enhance data management processes, ensuring even greater accuracy and reliability in data-driven insights.

FAQ

Data completeness refers to the extent to which all required data is present in a dataset. It ensures that no critical data is missing, which is essential for accurate analysis and decision-making. Incomplete data can lead to incorrect conclusions and affect the reliability of business intelligence and analytics efforts.

Data completeness is crucial in business intelligence because it ensures that all necessary data is available for analysis, leading to accurate and reliable insights. Incomplete data can result in misleading conclusions, poor decision-making, and missed opportunities. Comprehensive data allows businesses to have a full view of their operations, customer behaviors, and market trends, enabling them to make informed decisions, optimize processes, and drive strategic growth. Additionally, complete data supports better data quality and integrity, which are essential for building trust in the analytics and reports generated.

FineDataLink contributes to data completeness by addressing key challenges in data integration and management. It enables real-time data synchronization across multiple tables with minimal latency, ensuring that data is up-to-date and comprehensive. The platform supports a wide range of data formats and sources, allowing for seamless integration and transformation of diverse data types. Additionally, its ETL/ELT capabilities automate data extraction, transformation, and loading processes, reducing manual intervention and errors. By providing robust connectivity options and real-time data updates, FineDataLink ensures that all relevant data is integrated and accessible, thereby enhancing data completeness.

Organizations face several challenges regarding data completeness, including dealing with data silos, where data is stored in separate systems or departments, making it difficult to access and integrate data from different sources. They also encounter complex data formats, which require manual transformation and mapping efforts, and manual and time-consuming processes for data extraction, transformation, and loading (ETL), which are prone to errors and inefficiencies. Additionally, there is often a lack of automation in data integration workflows, leading to increased maintenance efforts, and difficulties in handling large volumes of data while ensuring optimal performance. Real-time data integration and limited connectivity options to different data sources and systems, especially legacy or on-premises systems, further complicate achieving data completeness.

Automation plays a crucial role in achieving data completeness by streamlining and optimizing data integration workflows. It reduces the need for manual intervention, minimizes errors, and ensures that data from various sources is consistently and accurately integrated. Automated processes can handle complex data formats, synchronize real-time data updates, and manage large volumes of data efficiently, thereby enhancing the overall quality and completeness of the data.

Continue Reading About Data Completeness

2025 Best Data Integration Solutions and Selection Guide

Explore top data integration solutions for 2025, enhancing data management and operational efficiency with leading platforms like Fivetran and Talend.

Howard

Dec 19, 2024

2025's Best Data Validation Tools: Top 7 Picks

Explore the top 7 data validation tools of 2025, featuring key features, benefits, user experiences, and pricing to ensure accurate and reliable data.

Howard

Aug 09, 2024

2025 Data Pipeline Examples: Learn & Master with Ease!

Unlock 2025’s Data Pipeline Examples! Discover how they automate data flow, boost quality, and deliver real-time insights for smarter business decisions.

Howard

Feb 24, 2025

Best Data Integration Platforms to Use in 2025

Explore the best data integration platforms for 2025, including cloud-based, on-premises, and hybrid solutions. Learn about key features, benefits, and top players.

Howard

Jun 20, 2024

Best Data Management Tools of 2025

Explore the best data management tools of 2025, including FineDataLink, Talend, and Snowflake. Learn about their features, pros, cons, and ideal use cases.

Howard

Aug 04, 2024

Best Data Integration Vendors for Seamless Workflows

Discover the top 20 data integration vendors of 2025 for seamless workflows. Compare tools like Talend, AWS Glue, and Fivetran to optimize your data processes.

Howard

Jan 22, 2025