Data integration can be measured using several key factors: Data Quality, Data Consistency, Data Availability, Data Timeliness, and Data Relevance. Among these, Data Quality is crucial as it ensures that the integrated data is accurate, complete, and reliable, which directly impacts decision-making processes.

I. DATA QUALITY

Data quality is the cornerstone of data integration. It refers to the accuracy, completeness, consistency, and reliability of the data being integrated. High-quality data ensures that the integrated system functions correctly and that the insights derived from the data are trustworthy. Evaluating data quality involves checking for errors, missing values, and inconsistencies across different data sources. Implementing data quality assessment tools and processes can help maintain high standards, ensuring that the integrated data is usable and beneficial for business operations.

II. DATA CONSISTENCY

Data consistency is another vital measure of data integration. It ensures that data across different systems and databases are in harmony. Consistent data implies that there are no conflicting or duplicated records, and the data is uniform across various sources. Techniques like data cleansing, normalization, and synchronization are used to achieve data consistency. Regular audits and validations are essential to maintain data consistency over time, as new data gets integrated into the system.

III. DATA AVAILABILITY

Data availability measures how easily and quickly data can be accessed by authorized users. It ensures that the integrated data is readily accessible when needed, without significant delays. High data availability is achieved through robust data architecture, redundancy, and failover mechanisms. Data availability is crucial for real-time analytics and operational systems that rely on timely data for effective functioning.

IV. DATA TIMELINESS

Data timeliness refers to how current and up-to-date the integrated data is. Timely data integration is essential for making informed decisions based on the latest information. This involves real-time data processing, frequent updates, and synchronization of data from various sources. Monitoring data flows and employing technologies like ETL (Extract, Transform, Load) processes can help maintain data timeliness, ensuring that the data reflects the most recent state of the business environment.

V. DATA RELEVANCE

Data relevance measures the importance and usefulness of the integrated data for the intended purposes. Relevant data should meet the specific needs of the business, providing insights that are actionable and beneficial. This involves understanding the business requirements and ensuring that the data being integrated aligns with these needs. Regularly reviewing and updating data integration processes can help maintain data relevance, ensuring that the data supports business objectives effectively.

VI. DATA INTEGRATION TOOLS AND TECHNOLOGIES

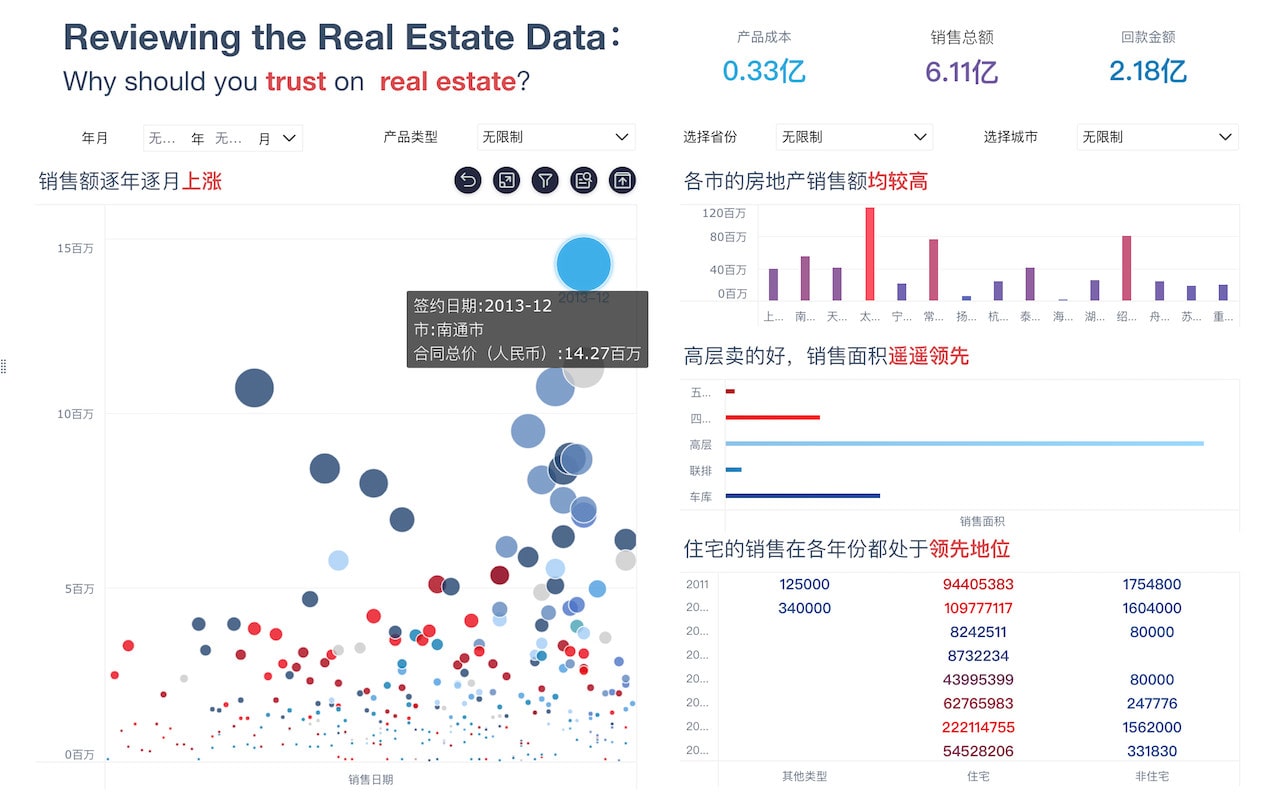

Various tools and technologies are available to facilitate and enhance data integration. These include ETL tools, data integration platforms, and middleware solutions. FineDatalink, for example, is a powerful data integration tool offered by FanRuan. It provides robust features for data extraction, transformation, and loading, ensuring high data quality and consistency. By leveraging such tools, businesses can streamline their data integration processes, ensuring that all the key factors are adequately addressed. For more information on FineDatalink, visit the [FineDatalink website](https://s.fanruan.com/agbhk).

VII. BEST PRACTICES FOR DATA INTEGRATION

Adopting best practices for data integration can significantly improve the effectiveness of the process. These include defining clear data integration goals, ensuring data governance, employing data quality management strategies, and continuously monitoring and improving data integration processes. Additionally, involving stakeholders from various departments can help ensure that the integrated data meets the needs of all parts of the organization.

VIII. CHALLENGES IN DATA INTEGRATION

Despite the benefits, data integration poses several challenges, such as dealing with data silos, managing large volumes of data, and ensuring data security and privacy. Addressing these challenges requires a well-planned strategy, the right tools, and ongoing efforts to improve data integration processes. Overcoming these challenges can lead to more efficient operations, better decision-making, and a competitive advantage in the market.

IX. FUTURE TRENDS IN DATA INTEGRATION

The field of data integration is continuously evolving, with new trends and technologies emerging. These include the increasing use of artificial intelligence and machine learning for data integration, the rise of cloud-based data integration solutions, and the growing importance of real-time data integration. Staying abreast of these trends can help businesses stay competitive and make the most of their data integration efforts.

In conclusion, measuring data integration involves evaluating multiple factors such as data quality, consistency, availability, timeliness, and relevance. By focusing on these areas and leveraging advanced tools and best practices, businesses can achieve effective data integration, leading to improved operations and strategic decision-making.

相关问答FAQs:

How to Measure the Degree of Data Integration?

1. What Are the Key Metrics to Assess Data Integration?

To evaluate the degree of data integration effectively, several key metrics are commonly used:

-

Data Consistency: This metric measures the uniformity of data across different systems. It involves checking whether similar data items are represented in a consistent manner throughout the integrated systems. High consistency indicates a well-integrated dataset where data discrepancies are minimal.

-

Data Accuracy: Accuracy assesses the correctness of data after integration. It involves comparing integrated data with source data to ensure that no errors or distortions have occurred during the integration process. Accurate data is critical for reliable analysis and decision-making.

-

Data Completeness: This refers to the extent to which all necessary data has been integrated. A complete dataset includes all required data fields and records, without any missing or incomplete entries. Measuring completeness involves checking if the integrated data meets the necessary criteria for comprehensive analysis.

-

Data Redundancy: Redundancy metrics evaluate how often the same data is duplicated across systems. Excessive redundancy can lead to inefficiencies and inconsistencies. Effective data integration aims to minimize redundancy while ensuring that all data is accurately captured and represented.

-

Data Timeliness: This metric examines how current the integrated data is. Timely data integration ensures that the information reflects the most recent updates from source systems. Measuring timeliness involves assessing the frequency of data updates and synchronization across systems.

-

Integration Complexity: This refers to the complexity of the integration process itself, including the number of data sources involved, the methods used for integration, and the level of effort required. Simpler integration processes generally lead to higher integration efficiency and fewer errors.

2. What Techniques Are Used to Evaluate Data Integration Efficiency?

Several techniques and methodologies are employed to evaluate the efficiency of data integration:

-

Data Profiling: This technique involves analyzing data from source systems to understand its structure, content, and quality before integration. Profiling helps identify potential issues and areas for improvement, enabling a smoother integration process.

-

Data Mapping: Mapping involves creating a detailed schema that illustrates how data from various sources corresponds to the integrated schema. It helps ensure that data is correctly aligned and transformed during integration, reducing errors and inconsistencies.

-

Data Quality Assessment: Regular assessments of data quality metrics, such as accuracy, completeness, and consistency, provide insights into the effectiveness of data integration. Quality assessment tools can automate this process, providing ongoing monitoring and reporting.

-

Integration Testing: Rigorous testing is essential to verify that integrated data meets the required standards. This includes functional testing to ensure that data integration processes work as intended, and performance testing to assess the speed and efficiency of data integration.

-

Feedback Mechanisms: Collecting feedback from end-users about the quality and usability of integrated data can provide valuable insights. User feedback helps identify areas where integration may need improvement and ensures that the integrated data meets the needs of its intended audience.

-

Performance Metrics: Monitoring performance metrics related to data integration, such as processing time and resource usage, helps evaluate the efficiency of the integration process. High performance typically indicates an effective and streamlined integration approach.

3. How Can Organizations Improve Their Data Integration Processes?

Organizations can enhance their data integration processes through several strategies:

-

Invest in Advanced Tools: Utilizing advanced data integration tools and platforms can significantly improve integration efficiency. Tools that offer automation, real-time processing, and robust data management features can streamline the integration process and reduce manual effort.

-

Standardize Data Formats: Adopting standardized data formats and protocols helps ensure consistency and compatibility across different systems. Standardization simplifies the integration process and reduces the likelihood of errors caused by format discrepancies.

-

Implement Data Governance Policies: Establishing clear data governance policies ensures that data is managed consistently and effectively throughout the integration process. Policies should cover data quality standards, security measures, and procedures for handling data discrepancies.

-

Enhance Data Management Practices: Improving data management practices, such as data cleansing and normalization, can enhance the quality of data before and after integration. Effective data management ensures that integrated data is accurate, complete, and usable.

-

Provide Training and Support: Ensuring that staff are well-trained in data integration processes and tools is crucial for success. Providing ongoing support and resources helps team members effectively manage and execute integration tasks, leading to better outcomes.

-

Monitor and Refine Processes: Continuously monitoring data integration processes and gathering feedback allows organizations to identify areas for improvement. Regularly refining integration practices based on performance metrics and user feedback helps maintain high levels of efficiency and quality.

Implementing these strategies can lead to more effective data integration, resulting in better data quality, improved decision-making, and greater overall efficiency.

本文内容通过AI工具匹配关键字智能整合而成,仅供参考,帆软不对内容的真实、准确或完整作任何形式的承诺。具体产品功能请以帆软官方帮助文档为准,或联系您的对接销售进行咨询。如有其他问题,您可以通过联系blog@fanruan.com进行反馈,帆软收到您的反馈后将及时答复和处理。