To excel in data mining, one should focus on understanding the data, selecting the right algorithms, ensuring data quality, and continuous learning. Among these, understanding the data is crucial. It involves knowing the source, nature, and structure of the data you are working with. This foundational step helps in identifying relevant patterns and insights, ensuring that the subsequent steps are more effective and accurate.

I. UNDERSTANDING THE DATA

Understanding the data is the cornerstone of effective data mining. It involves comprehensively analyzing the source, structure, and nature of the data. This step includes identifying the variables, their relationships, and the context in which the data was collected. Data can come from various sources such as databases, spreadsheets, or even real-time streaming data. Each source requires a different approach to extraction and preprocessing.

For example, transactional data from a retail store needs to be analyzed differently than data from a social media platform. Transactional data often involves structured data with clear fields such as product ID, quantity, and price. In contrast, social media data might be unstructured, containing text, images, and videos. A thorough understanding of the data helps in choosing the right tools and techniques for data mining.

II. SELECTING THE RIGHT ALGORITHMS

Choosing the appropriate algorithms is critical for successful data mining. Different algorithms are suited for different types of data and objectives. For instance, decision trees are excellent for classification tasks, while k-means clustering works well for grouping similar data points.

To select the right algorithm, one must consider the nature of the problem, the size of the dataset, and the computational resources available. For large datasets, algorithms that can handle high-dimensional data efficiently are preferred. Moreover, understanding the strengths and limitations of each algorithm is essential. For example, while neural networks are powerful for complex pattern recognition, they require significant computational power and a large amount of data for training.

III. ENSURING DATA QUALITY

High-quality data is paramount for accurate and reliable results in data mining. Ensuring data quality involves data cleaning, transformation, and normalization. Data cleaning entails removing noise, handling missing values, and correcting inconsistencies. Transformation may include converting data types, aggregating data, or creating new variables.

Normalization, on the other hand, involves scaling data to a common range, which is crucial for algorithms that are sensitive to the scale of data, such as k-nearest neighbors. Additionally, data quality assurance involves continuous monitoring and validation to detect and correct any issues that may arise during the data mining process. High-quality data not only improves the accuracy of the results but also enhances the interpretability and usability of the insights gained.

IV. CONTINUOUS LEARNING

The field of data mining is constantly evolving with new techniques, tools, and best practices emerging regularly. Continuous learning is essential to stay updated with the latest advancements and to apply them effectively. This involves keeping abreast of new research, attending workshops and conferences, and participating in online forums and communities.

Moreover, hands-on practice with real-world datasets is invaluable. It helps in gaining practical experience and understanding the nuances of different data mining techniques. Continuous learning also involves experimenting with different approaches, validating results, and refining models. It is a dynamic and iterative process that contributes to the development of robust and efficient data mining solutions.

V. DATA PREPROCESSING

Data preprocessing is a crucial step that prepares raw data for analysis. It involves several sub-steps, including data cleaning, integration, transformation, reduction, and discretization. Data cleaning addresses issues such as missing values, noise, and inconsistencies. Techniques like imputation, smoothing, and outlier detection are employed to clean the data. Data integration combines data from multiple sources, providing a unified view. This is particularly important in scenarios where data is fragmented across different systems.

Data transformation involves converting data into a suitable format for analysis. This can include normalization, scaling, and encoding categorical variables. Data reduction techniques like principal component analysis (PCA) and feature selection are used to reduce the dimensionality of the data, making it more manageable and improving computational efficiency. Data discretization transforms continuous data into discrete intervals, which can be useful for certain types of analysis, such as decision tree algorithms.

VI. DATA VISUALIZATION

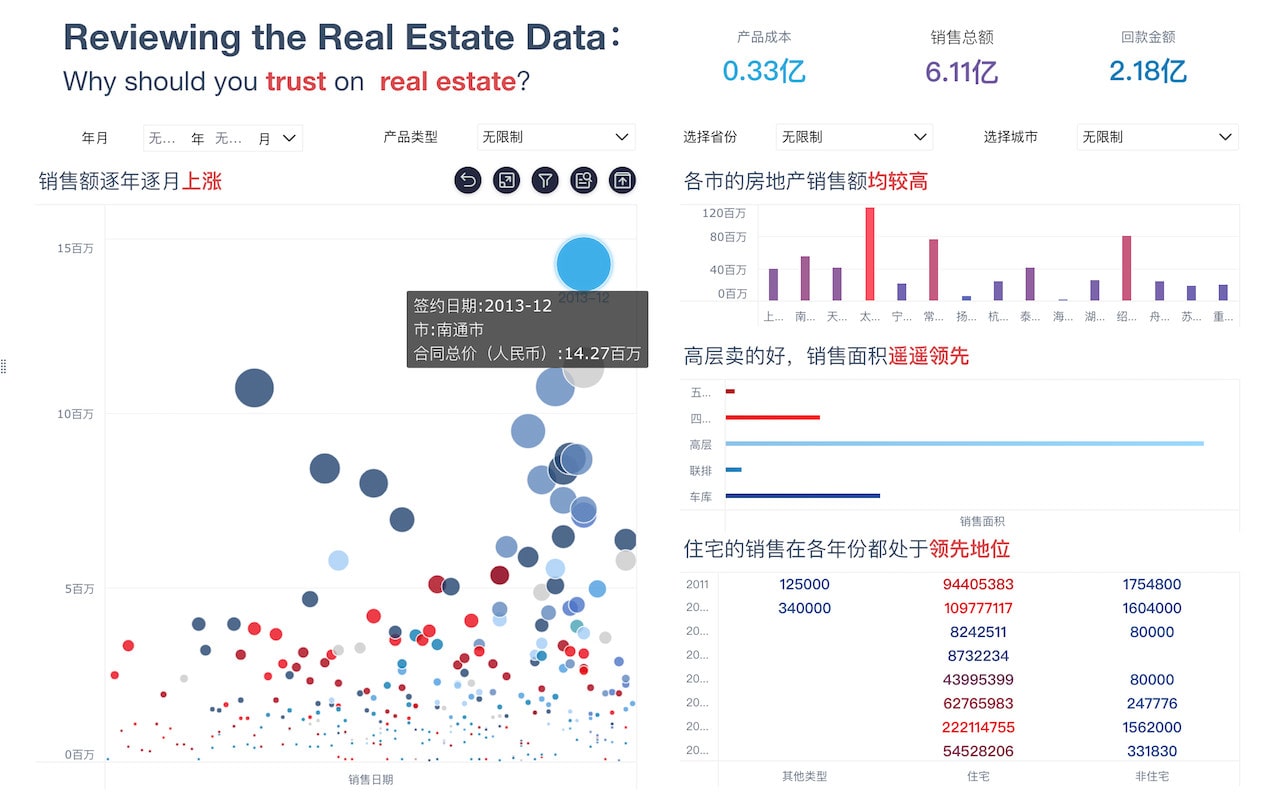

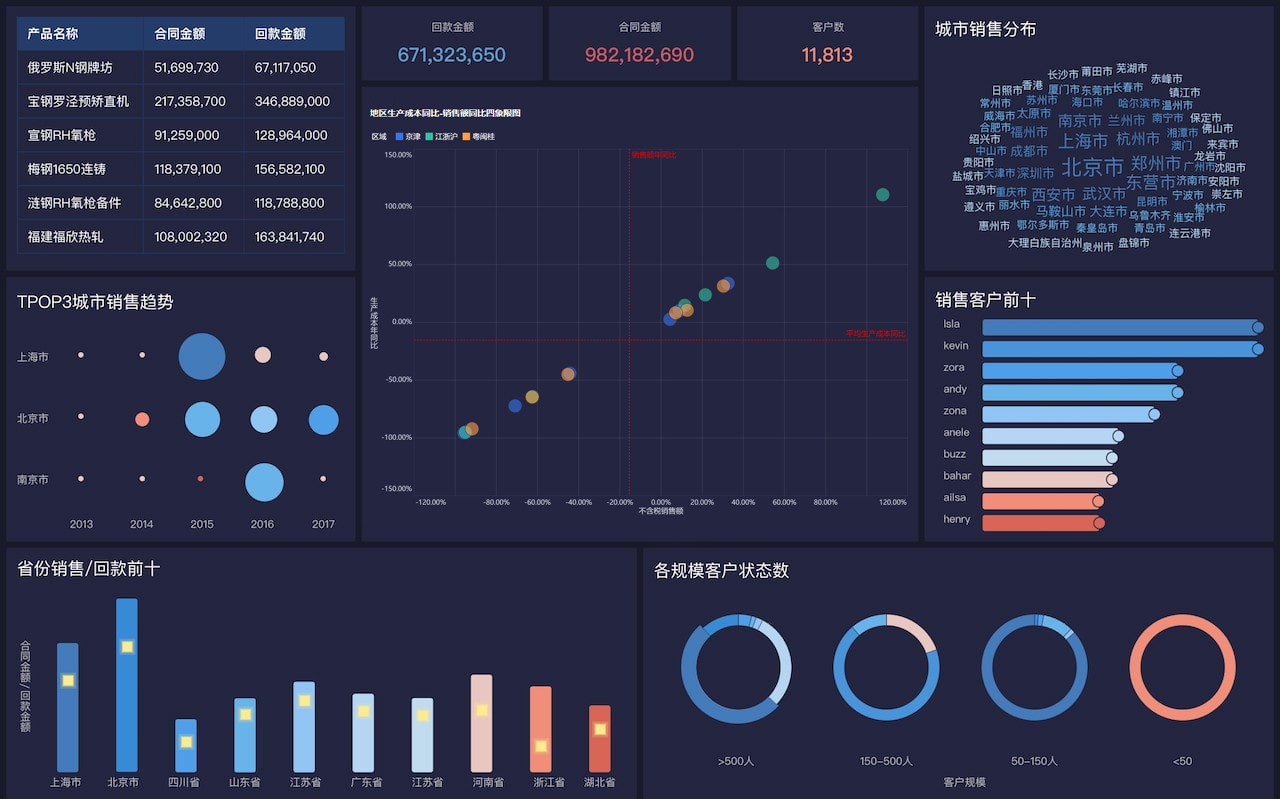

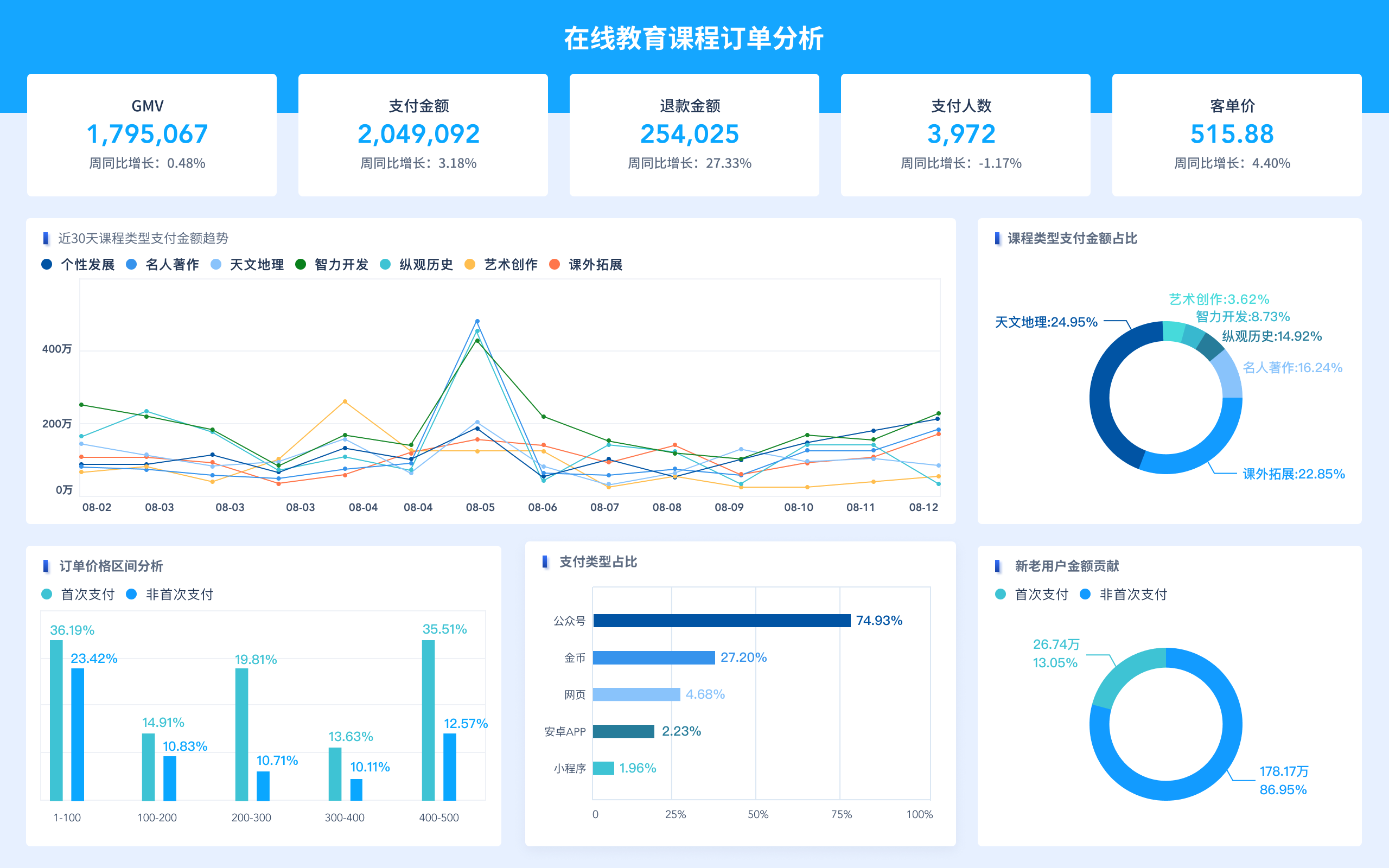

Data visualization is a powerful tool that helps in understanding and interpreting the data. It involves creating graphical representations of the data, such as charts, graphs, and plots. Visualization aids in identifying patterns, trends, and outliers that may not be apparent from raw data. Tools like Tableau, Power BI, and D3.js are widely used for creating interactive and intuitive visualizations.

Effective data visualization requires choosing the right type of chart or graph that best represents the data and the insights to be conveyed. For example, a line chart is suitable for showing trends over time, while a scatter plot is useful for displaying relationships between two variables. Additionally, good visualization practices involve ensuring clarity, simplicity, and accuracy, avoiding clutter, and providing meaningful labels and annotations.

VII. MODEL BUILDING AND EVALUATION

Building and evaluating models is a critical phase in data mining. This involves selecting appropriate modeling techniques, training the models, and evaluating their performance. Common modeling techniques include regression, classification, clustering, and association rule mining. Regression models predict continuous outcomes, while classification models predict categorical outcomes. Clustering groups similar data points, and association rule mining identifies interesting relationships between variables.

Model evaluation is crucial to ensure the model's accuracy and reliability. Techniques like cross-validation, confusion matrix, precision, recall, F1 score, and ROC curve are used to evaluate the model's performance. Cross-validation helps in assessing the model's generalizability, while metrics like precision and recall provide insights into the model's effectiveness in different scenarios.

VIII. FEATURE ENGINEERING

Feature engineering involves creating new features or modifying existing ones to improve the performance of machine learning models. This step is critical as the quality and relevance of features directly impact the model's effectiveness. Techniques for feature engineering include polynomial features, interaction terms, and domain-specific transformations.

Polynomial features involve creating new features by raising existing features to a power. Interaction terms capture interactions between different features, providing additional insights. Domain-specific transformations involve applying knowledge from the specific domain to create meaningful features. For example, in a retail scenario, combining product price and quantity sold to create a "revenue" feature can be highly informative.

IX. HANDLING IMBALANCED DATA

Imbalanced data, where one class is significantly underrepresented, poses a challenge for many machine learning algorithms. Techniques to handle imbalanced data include resampling, cost-sensitive learning, and anomaly detection. Resampling involves either oversampling the minority class or undersampling the majority class to achieve a balanced dataset. Cost-sensitive learning assigns different misclassification costs to different classes, making the model more sensitive to the minority class. Anomaly detection treats the minority class as an anomaly and uses specialized algorithms to detect it.

X. DEPLOYMENT AND MONITORING

Deploying the data mining model into a production environment is a critical step that involves integrating the model with existing systems and workflows. This phase includes setting up APIs, creating user interfaces, and ensuring the model's scalability and reliability. Continuous monitoring is essential to ensure the model's performance over time. This involves tracking key metrics, identifying any degradation in performance, and retraining the model as needed.

Moreover, monitoring helps in detecting any changes in the data distribution, known as data drift, which can impact the model's accuracy. Implementing automated alerts and regular audits can help in maintaining the model's effectiveness and ensuring that it continues to provide valuable insights.

XI. ETHICAL CONSIDERATIONS

Ethical considerations play a crucial role in data mining. This involves ensuring data privacy, avoiding bias, and maintaining transparency. Data privacy requires adhering to regulations like GDPR and CCPA, ensuring that personal data is handled responsibly. Avoiding bias involves ensuring that the data and algorithms do not perpetuate or exacerbate existing biases. This requires careful examination of the data sources, feature selection, and model evaluation.

Maintaining transparency involves providing clear explanations of the models and their decisions. This is particularly important in sensitive applications like finance and healthcare, where decisions can have significant consequences. Ensuring ethical considerations not only builds trust with stakeholders but also enhances the credibility and reliability of the data mining process.

XII. COLLABORATION AND COMMUNICATION

Effective collaboration and communication are vital for successful data mining projects. This involves working closely with domain experts, stakeholders, and team members to ensure a comprehensive understanding of the problem and the data. Clear and effective communication helps in setting expectations, sharing insights, and making informed decisions.

Using collaboration tools like Jupyter notebooks, Git, and project management software can enhance teamwork and streamline workflows. Regular meetings, updates, and presentations help in keeping everyone aligned and informed. Moreover, documenting the data mining process, including assumptions, methodologies, and results, ensures transparency and facilitates future reference.

XIII. CASE STUDIES AND APPLICATIONS

Examining case studies and real-world applications provides valuable insights into the practical aspects of data mining. For example, in the healthcare industry, data mining is used to predict disease outbreaks, personalize treatments, and optimize resource allocation. In the retail sector, it helps in inventory management, customer segmentation, and personalized marketing.

Studying successful case studies helps in understanding the challenges faced, the methodologies applied, and the outcomes achieved. It provides a roadmap for implementing similar solutions and highlights best practices and lessons learned. Moreover, analyzing diverse applications across different industries showcases the versatility and potential of data mining.

XIV. FUTURE TRENDS

The field of data mining is continuously evolving, with new trends and advancements shaping its future. Automated machine learning (AutoML) is gaining traction, enabling non-experts to build and deploy models with minimal effort. Explainable AI (XAI) is becoming increasingly important, providing insights into how models make decisions and enhancing transparency.

Edge computing is another emerging trend, enabling data processing closer to the source, reducing latency, and improving efficiency. Federated learning allows for training models across decentralized data sources while preserving privacy. Staying updated with these trends and incorporating them into data mining practices ensures that one remains at the forefront of the field and continues to deliver cutting-edge solutions.

By focusing on these key areas, one can excel in data mining, uncovering valuable insights, and driving informed decision-making across various domains.

相关问答FAQs:

挖掘数据(Data Mining)是一个复杂而多层次的过程,涉及从大量数据中提取有价值的信息和知识。以下是关于如何做好数据挖掘的几个重要方面。

1. What is Data Mining and Why is it Important?

Data mining is the process of discovering patterns and extracting valuable insights from large sets of data using various analytical techniques and algorithms. It's important because it helps organizations make informed decisions, understand customer behaviors, identify trends, and enhance operational efficiency. By effectively utilizing data mining, businesses can gain a competitive edge and drive innovation.

2. What Are the Key Steps in the Data Mining Process?

The data mining process typically involves several critical steps:

-

Data Collection: Gather data from various sources, which could include databases, online sources, or third-party providers. The quality and quantity of data collected play a significant role in the success of the mining process.

-

Data Preprocessing: Clean and prepare the data for analysis. This step includes handling missing values, removing duplicates, and normalizing data. It's essential to ensure that the dataset is accurate and reliable.

-

Data Transformation: Transform data into a suitable format for analysis. This could involve aggregation, generalization, or constructing new attributes to enhance the dataset.

-

Data Mining: Apply algorithms and statistical methods to discover patterns, correlations, and trends within the data. Techniques may include clustering, classification, regression, and association rule mining.

-

Pattern Evaluation: Assess the patterns and insights generated during the mining process. This step involves validating the findings against business objectives to ensure relevance and applicability.

-

Knowledge Representation: Present the discovered knowledge in an understandable format, such as reports, visualizations, or dashboards, making it easier for stakeholders to interpret and act upon.

3. What Tools and Techniques are Commonly Used in Data Mining?

Several tools and techniques are widely used in data mining, each serving different purposes:

-

Statistical Methods: Techniques such as regression analysis, hypothesis testing, and time series analysis are fundamental in understanding relationships between variables.

-

Machine Learning Algorithms: Algorithms like decision trees, neural networks, support vector machines, and k-means clustering are employed to make predictions and classify data.

-

Data Visualization Tools: Tools such as Tableau, Power BI, and Python libraries like Matplotlib and Seaborn help in visualizing data patterns and trends, making it easier to communicate insights.

-

Database Management Systems: SQL databases, NoSQL databases, and big data technologies like Hadoop and Spark are essential for storing and processing large volumes of data efficiently.

-

Programming Languages: Languages like Python and R are popular for data mining due to their extensive libraries and frameworks designed for data analysis and machine learning.

4. How to Ensure Data Quality and Integrity During Mining?

Ensuring data quality and integrity is crucial in the data mining process. Consider these strategies:

-

Implement Validation Rules: Set up validation checks to ensure data accuracy. This might include range checks, format checks, and consistency checks.

-

Regular Audits: Conduct periodic audits of the data to identify and rectify issues such as duplicates, inconsistencies, or inaccuracies.

-

Data Governance Policies: Establish clear data governance policies that outline data ownership, data access, and data usage guidelines. This ensures that all stakeholders adhere to best practices in data management.

-

Training and Awareness: Educate team members about the importance of data quality and the potential consequences of poor data. Foster a culture of data stewardship within the organization.

5. What Are Some Common Challenges in Data Mining?

Data mining is not without its challenges, which may include:

-

Data Overload: Organizations often struggle with the sheer volume of data available. Filtering out irrelevant data while focusing on meaningful insights can be daunting.

-

Complexity of Data: Data can come in various formats and structures, making it difficult to analyze. Unstructured data, such as text and images, requires specialized techniques for processing.

-

Privacy Concerns: With increasing regulations on data privacy, organizations must navigate legal and ethical considerations when collecting and analyzing data.

-

Interpreting Results: Translating complex data mining outcomes into actionable business strategies can be challenging. It requires a blend of analytical skills and business acumen.

6. How Can Businesses Benefit from Data Mining?

Businesses can derive numerous benefits from effective data mining practices:

-

Enhanced Decision-Making: Data-driven insights enable organizations to make better decisions, reducing the reliance on intuition and guesswork.

-

Customer Insights: Understanding customer preferences and behaviors helps tailor marketing strategies, improve customer satisfaction, and foster loyalty.

-

Operational Efficiency: By identifying inefficiencies and areas for improvement, data mining can lead to cost savings and optimized resource allocation.

-

Risk Management: Data mining can help identify potential risks and fraud, allowing organizations to take proactive measures to mitigate them.

7. What Industries Can Benefit from Data Mining?

Data mining can be applied across various industries, including:

-

Retail: Analyzing customer purchase behavior to optimize inventory management and marketing strategies.

-

Healthcare: Using data mining to identify trends in patient care, predict disease outbreaks, and enhance treatment outcomes.

-

Finance: Detecting fraudulent transactions and assessing credit risk through predictive modeling.

-

Telecommunications: Understanding customer churn and developing strategies to improve retention rates.

8. What Are Best Practices for Effective Data Mining?

To maximize the effectiveness of data mining efforts, organizations should consider the following best practices:

-

Define Clear Objectives: Establish specific goals for what you want to achieve with data mining. This focus will guide the entire process and ensure relevant insights.

-

Engage Stakeholders: Involve key stakeholders throughout the data mining process to ensure that the findings align with business needs and objectives.

-

Iterative Approach: Data mining should be an iterative process, where insights lead to further exploration and refinement. Regularly revisit and update models to adapt to changing data dynamics.

-

Invest in Training: Equip your team with the necessary skills and knowledge in data mining techniques, tools, and best practices to enhance their capabilities.

9. What Future Trends Are Emerging in Data Mining?

The field of data mining is constantly evolving, with several emerging trends:

-

Artificial Intelligence: The integration of AI with data mining techniques is enhancing the accuracy and efficiency of data analysis.

-

Automated Data Mining: Automation tools are simplifying the data mining process, allowing non-experts to perform complex analyses without deep technical knowledge.

-

Real-time Data Processing: The demand for real-time insights is growing, leading to advancements in streaming data processing technologies.

-

Ethical Data Mining: As concerns about data privacy grow, ethical considerations in data mining practices are becoming increasingly important.

10. How to Get Started with Data Mining?

For those looking to embark on data mining initiatives, here are steps to consider:

-

Start Small: Begin with a manageable dataset and a specific problem to solve. This approach allows for quick wins and builds confidence in data mining capabilities.

-

Leverage Online Resources: Utilize online courses, tutorials, and communities to learn about data mining concepts, tools, and techniques.

-

Experiment and Iterate: Don’t be afraid to experiment with different algorithms and methodologies. Use feedback from initial attempts to refine and improve your approach.

-

Build a Cross-Functional Team: Assemble a team with diverse skills, including data scientists, domain experts, and business analysts, to enhance the data mining process.

In conclusion, effective data mining involves a combination of technical expertise, strategic thinking, and a keen understanding of business objectives. By leveraging the right tools, techniques, and practices, organizations can unlock valuable insights that drive growth and innovation.

本文内容通过AI工具匹配关键字智能整合而成,仅供参考,帆软不对内容的真实、准确或完整作任何形式的承诺。具体产品功能请以帆软官方帮助文档为准,或联系您的对接销售进行咨询。如有其他问题,您可以通过联系blog@fanruan.com进行反馈,帆软收到您的反馈后将及时答复和处理。