How to Extract Data from Sister

Extracting data from various sources is crucial for analysis, reporting, and decision-making. The phrase "挖掘姐姐的数据" translates to "extract data from sister" in English, where 'sister' likely refers to a specific data source or entity. This process involves several steps including data collection, data cleaning, data transformation, and data loading. Let's dive into each of these steps in detail to understand how to effectively extract data from a source.

一、DATA COLLECTION

Data collection is the initial phase of data extraction. This step involves identifying the data sources, which in this case, would be 'sister.' The data could reside in various forms such as databases, APIs, web pages, or even files. Choosing the right data collection method is critical for successful data extraction. For instance, if 'sister' represents a database, you might use SQL queries to extract the data. If it's an API, you would make HTTP requests to gather the data. Proper data collection ensures that you capture all necessary information for your analysis. In today's digital age, APIs have become a popular method for data collection due to their flexibility and ease of use. APIs can provide real-time data and are usually well-documented, making it easier to understand the data structure and how to access it.

二、DATA CLEANING

Once the data is collected, the next step is data cleaning. This involves removing or correcting any inaccuracies, inconsistencies, or incomplete records from the dataset. Data cleaning is crucial for ensuring the accuracy and reliability of the analysis. For example, if the data contains missing values, you might need to fill them in using statistical methods or remove the affected records altogether. Similarly, you might need to correct any typographical errors or standardize the data formats. Cleaning the data helps in improving the quality of the dataset, making it more usable for further analysis. There are various tools and techniques available for data cleaning, such as Python libraries like Pandas, which offer numerous functions for handling missing data, detecting outliers, and more.

三、DATA TRANSFORMATION

Data transformation involves converting the collected and cleaned data into a suitable format for analysis. This could involve normalizing the data, aggregating it, or even enriching it with additional information. For instance, you might need to convert categorical data into numerical format or aggregate the data at different levels (e.g., daily to monthly). Data transformation is essential for making the data compatible with the analytical tools you plan to use. Techniques such as data normalization help in bringing all data points to a common scale, making it easier to compare and analyze them. There are various ETL (Extract, Transform, Load) tools like Talend and Informatica that can automate the data transformation process, saving time and reducing the likelihood of errors.

四、DATA LOADING

The final step in data extraction is data loading, which involves storing the transformed data into a data warehouse, database, or another storage solution. Data loading ensures that the data is readily available for analysis and reporting. Depending on the volume of data, this step can be quite resource-intensive and may require specialized tools to handle large datasets efficiently. Data loading is crucial for enabling real-time analytics and decision-making. For example, if you are loading data into a data warehouse, you might use tools like Amazon Redshift or Google BigQuery, which are designed to handle large-scale data loads. These tools also offer various features for optimizing data storage and retrieval, ensuring that your analysis is both fast and efficient.

五、DATA SECURITY AND PRIVACY

When extracting data, it is essential to consider data security and privacy. Ensuring that the data is protected from unauthorized access and breaches is critical. This involves implementing various security measures such as encryption, access controls, and regular audits. Data privacy is equally important, especially if the data contains sensitive or personal information. Compliance with regulations like GDPR (General Data Protection Regulation) and CCPA (California Consumer Privacy Act) is mandatory to avoid legal repercussions. Data security and privacy measures not only protect the data but also build trust with stakeholders and customers. Various tools and frameworks are available to help organizations implement robust data security and privacy practices, ensuring that the data remains secure throughout its lifecycle.

六、DATA INTEGRATION

Once the data is loaded, it often needs to be integrated with other datasets for comprehensive analysis. Data integration involves combining data from different sources to provide a unified view. This step is crucial for gaining deeper insights and making informed decisions. Effective data integration can reveal hidden patterns and correlations that might not be apparent when analyzing individual datasets. Techniques such as data warehousing, data lakes, and data virtualization are commonly used for data integration. Integration tools like Apache NiFi and Microsoft Azure Data Factory can automate and streamline the data integration process, ensuring that the data is consistently and accurately combined.

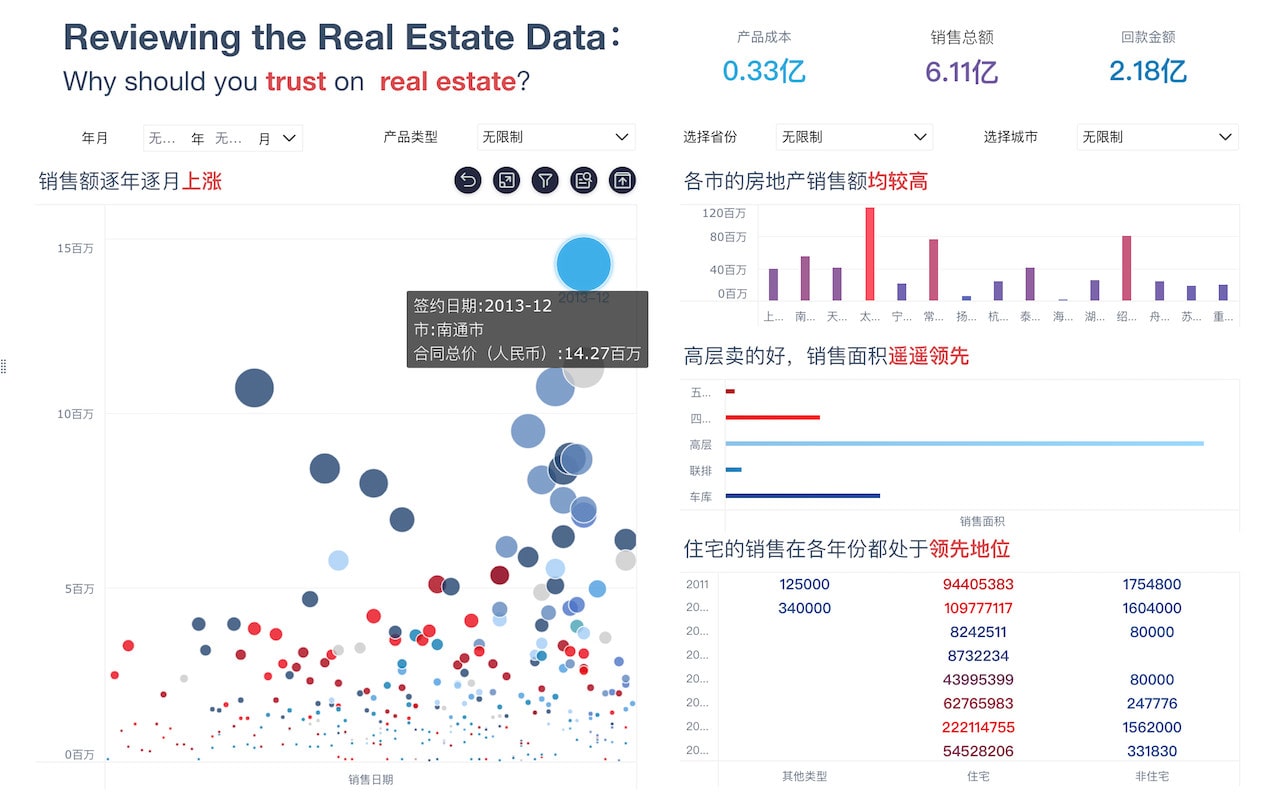

七、DATA ANALYSIS AND VISUALIZATION

After the data is integrated, the next step is data analysis and visualization. This involves using various analytical techniques to extract insights from the data. Data visualization tools like Tableau, Power BI, and D3.js can help in creating interactive and intuitive visual representations of the data. Visualization aids in understanding complex data patterns and trends, making it easier to communicate findings to stakeholders. Advanced analytical techniques such as machine learning and predictive analytics can also be employed to uncover deeper insights and make data-driven predictions. Effective data analysis and visualization are key to making informed business decisions and driving strategic initiatives.

八、CONTINUOUS MONITORING AND MAINTENANCE

Data extraction is not a one-time process but requires continuous monitoring and maintenance. Regularly updating the data ensures that the analysis remains relevant and accurate. This involves setting up automated data pipelines that can handle new data as it becomes available. Continuous monitoring helps in identifying any issues or discrepancies in the data extraction process, allowing for timely corrections. Tools like Apache Airflow and Kubernetes can help in orchestrating and managing data workflows, ensuring that the data extraction process remains efficient and reliable. Ongoing maintenance is crucial for adapting to changes in data sources, formats, and requirements, ensuring that the data extraction process remains robust and effective.

九、BEST PRACTICES AND FUTURE TRENDS

Adopting best practices is essential for successful data extraction. This includes following standardized data extraction procedures, using reliable tools, and ensuring data quality at every step. Keeping up with the latest trends and technologies in data extraction can also provide a competitive edge. Emerging technologies like artificial intelligence and machine learning are revolutionizing the data extraction process, making it more efficient and accurate. For instance, AI-driven data extraction tools can automatically identify and extract relevant data from unstructured sources like documents and emails. Staying updated with these advancements can help organizations improve their data extraction capabilities and drive better business outcomes.

十、CASE STUDIES AND REAL-WORLD EXAMPLES

Learning from case studies and real-world examples can provide valuable insights into effective data extraction strategies. Many organizations have successfully implemented data extraction processes to drive business growth and innovation. For instance, e-commerce companies use data extraction to analyze customer behavior and optimize their marketing strategies. Healthcare organizations extract data from electronic health records to improve patient care and streamline operations. These case studies highlight the importance of data extraction in various industries and demonstrate how it can be leveraged for achieving business objectives. Real-world examples provide practical insights and lessons learned, helping organizations refine their data extraction processes and achieve better results.

In conclusion, extracting data from 'sister' involves a systematic process comprising data collection, cleaning, transformation, loading, and continuous monitoring. Implementing best practices and staying updated with the latest trends can significantly enhance the efficiency and effectiveness of the data extraction process. By understanding the importance of each step and leveraging the right tools and techniques, organizations can successfully extract valuable insights from their data and drive informed decision-making.

相关问答FAQs:

“挖掘姐姐”的英文可以翻译为“Data Mining Sister”。在这个短语中,“挖掘”指的是“data mining”,而“姐姐”则翻译为“sister”。这个短语在特定的上下文中可能会有不同的意义,通常与数据分析或技术领域相关。如果您有特定的语境或用途,可以提供更多信息,以便获得更准确的翻译或解释。

本文内容通过AI工具匹配关键字智能整合而成,仅供参考,帆软不对内容的真实、准确或完整作任何形式的承诺。具体产品功能请以帆软官方帮助文档为准,或联系您的对接销售进行咨询。如有其他问题,您可以通过联系blog@fanruan.com进行反馈,帆软收到您的反馈后将及时答复和处理。