To effectively mine large amounts of data, key approaches include data preprocessing, dimensionality reduction, clustering, and advanced machine learning techniques. Data preprocessing involves cleaning and organizing raw data to make it suitable for analysis. Dimensionality reduction helps in simplifying data by reducing the number of variables under consideration, making it easier to analyze. Clustering divides datasets into meaningful or useful groups (clusters) for better understanding and analysis. Advanced machine learning techniques like neural networks, decision trees, and support vector machines can discover complex patterns within vast datasets. Data preprocessing is essential as it ensures the quality and consistency of the data, thereby enabling more accurate and insightful analysis. For instance, removing duplicates, handling missing values, and normalization of data are critical steps in data preprocessing that can significantly influence the outcomes of your mining efforts.

I. DATA PREPROCESSING

Data preprocessing is a crucial initial step in the data mining process. This phase involves several key tasks to ensure the data is clean, reliable, and formatted properly for analysis. Handling missing values is one of the primary tasks. This can be done by either removing incomplete records or imputing missing values using methods like mean, median, or more sophisticated techniques like k-nearest neighbors. Data normalization ensures that different features of the data are on a similar scale, which is particularly important for algorithms that compute distances between data points, such as k-means clustering or k-nearest neighbors. Data transformation involves converting data into a suitable format or structure for mining, such as converting categorical variables into numerical ones through encoding techniques. Outlier detection and removal is another critical step, as outliers can skew results and lead to incorrect conclusions. Techniques like Z-score, IQR, and DBSCAN can be used to identify and handle outliers. Data integration involves combining data from different sources into a coherent dataset, which may require resolving inconsistencies and duplications. By meticulously carrying out these preprocessing steps, the quality of the data is enhanced, leading to more accurate and meaningful insights from subsequent analysis.

II. DIMENSIONALITY REDUCTION

Dimensionality reduction is a technique used to reduce the number of variables under consideration in a dataset, thereby simplifying the model without losing significant information. Principal Component Analysis (PCA) is a widely used method that transforms the original variables into a new set of uncorrelated variables, called principal components, which capture the maximum variance in the data. Linear Discriminant Analysis (LDA) is another technique, particularly useful for classification problems, which finds the linear combinations of features that best separate different classes. t-Distributed Stochastic Neighbor Embedding (t-SNE) is a nonlinear dimensionality reduction technique particularly effective for visualizing high-dimensional data by reducing it to two or three dimensions. Feature selection methods like forward selection, backward elimination, and recursive feature elimination (RFE) help in selecting the most relevant features for the model, thereby improving model performance and interpretability. Autoencoders, a type of neural network, can also be used for dimensionality reduction by learning a compressed representation of the data. These techniques not only improve computational efficiency but also help in visualizing and understanding complex data structures.

III. CLUSTERING

Clustering is an unsupervised learning technique used to group similar data points together based on certain characteristics. K-means clustering is one of the most popular methods, where data is partitioned into k clusters, each represented by the mean of the points in that cluster. The algorithm iteratively assigns each data point to the nearest cluster center and then recalculates the cluster centers. Hierarchical clustering builds a tree-like structure of clusters by either merging smaller clusters into larger ones (agglomerative) or splitting larger clusters into smaller ones (divisive). DBSCAN (Density-Based Spatial Clustering of Applications with Noise) is another powerful clustering method that groups together data points that are closely packed and marks points that lie alone in low-density regions as outliers. Gaussian Mixture Models (GMM) assume that the data is generated from a mixture of several Gaussian distributions and use probabilistic methods to assign data points to clusters. Spectral clustering involves using the eigenvalues of a similarity matrix to reduce dimensions before applying a clustering algorithm. Each clustering method has its strengths and is suitable for different types of data and problems, making it crucial to choose the right method based on the specific characteristics of the dataset.

IV. ADVANCED MACHINE LEARNING TECHNIQUES

Advanced machine learning techniques can uncover complex patterns and relationships within large datasets. Neural networks, particularly deep learning models like Convolutional Neural Networks (CNNs) and Recurrent Neural Networks (RNNs), are capable of learning intricate patterns in image and sequential data, respectively. Support Vector Machines (SVM) are effective for both classification and regression tasks, especially in high-dimensional spaces. Decision Trees and their ensemble methods like Random Forests and Gradient Boosting Machines (GBM) are powerful tools for capturing non-linear relationships and interactions between features. Natural Language Processing (NLP) techniques are crucial for extracting meaningful insights from textual data, involving tasks like tokenization, part-of-speech tagging, named entity recognition, and sentiment analysis. Reinforcement learning involves training models to make a sequence of decisions by rewarding desirable actions and penalizing undesirable ones, proving useful in dynamic environments. Transfer learning allows models to leverage pre-trained knowledge from one task to improve performance on another related task, significantly reducing the need for large labeled datasets. These advanced techniques, when applied effectively, can lead to groundbreaking insights and improvements in various domains.

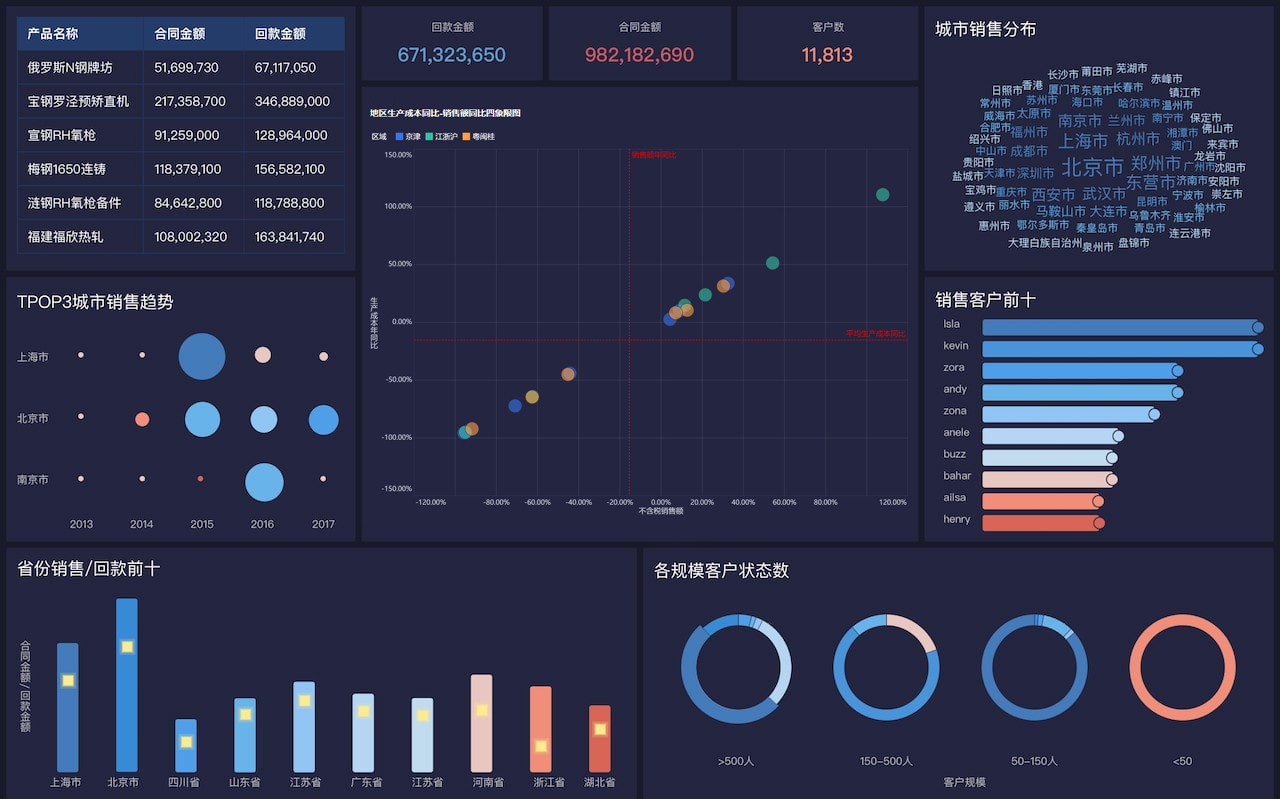

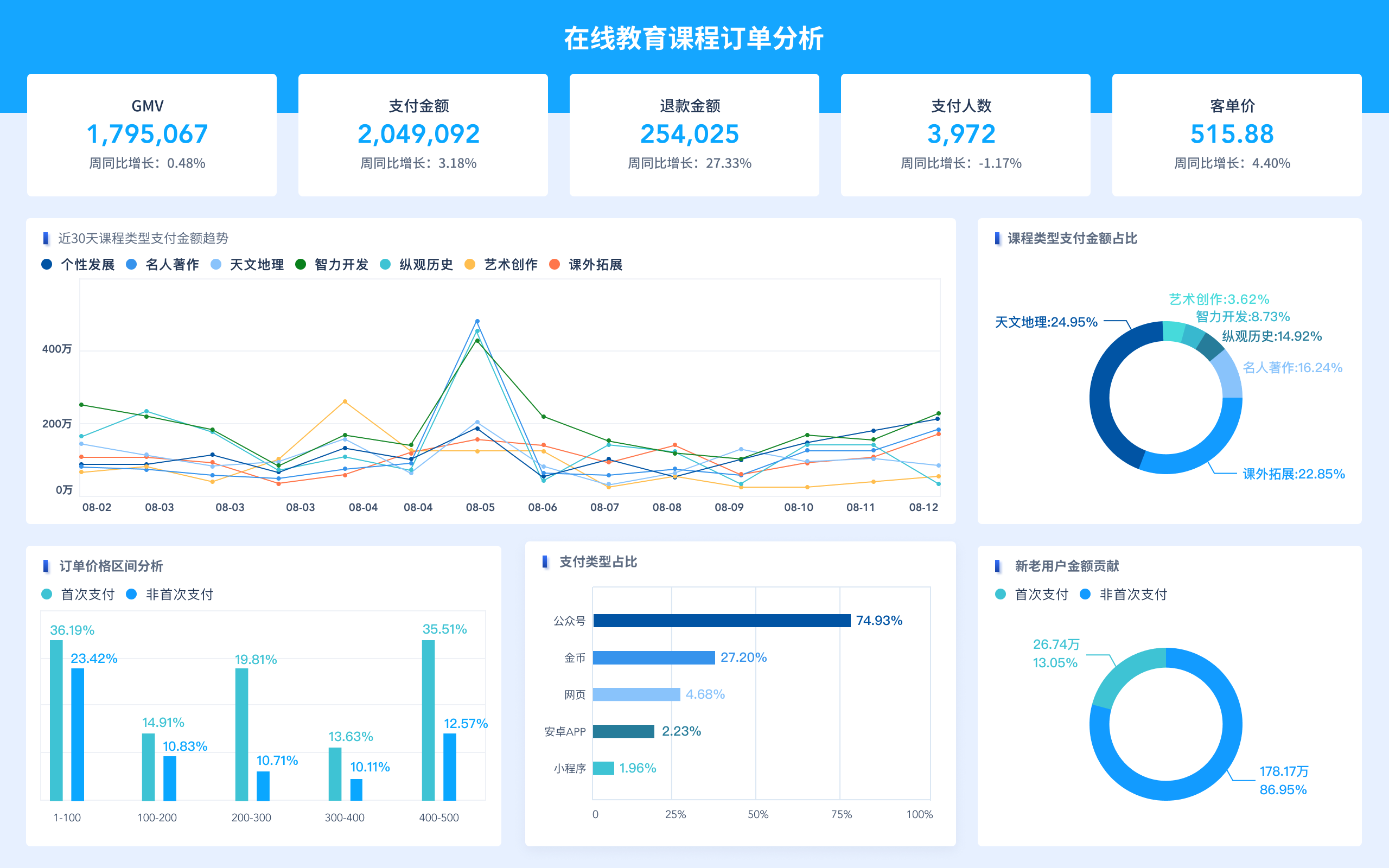

V. DATA VISUALIZATION

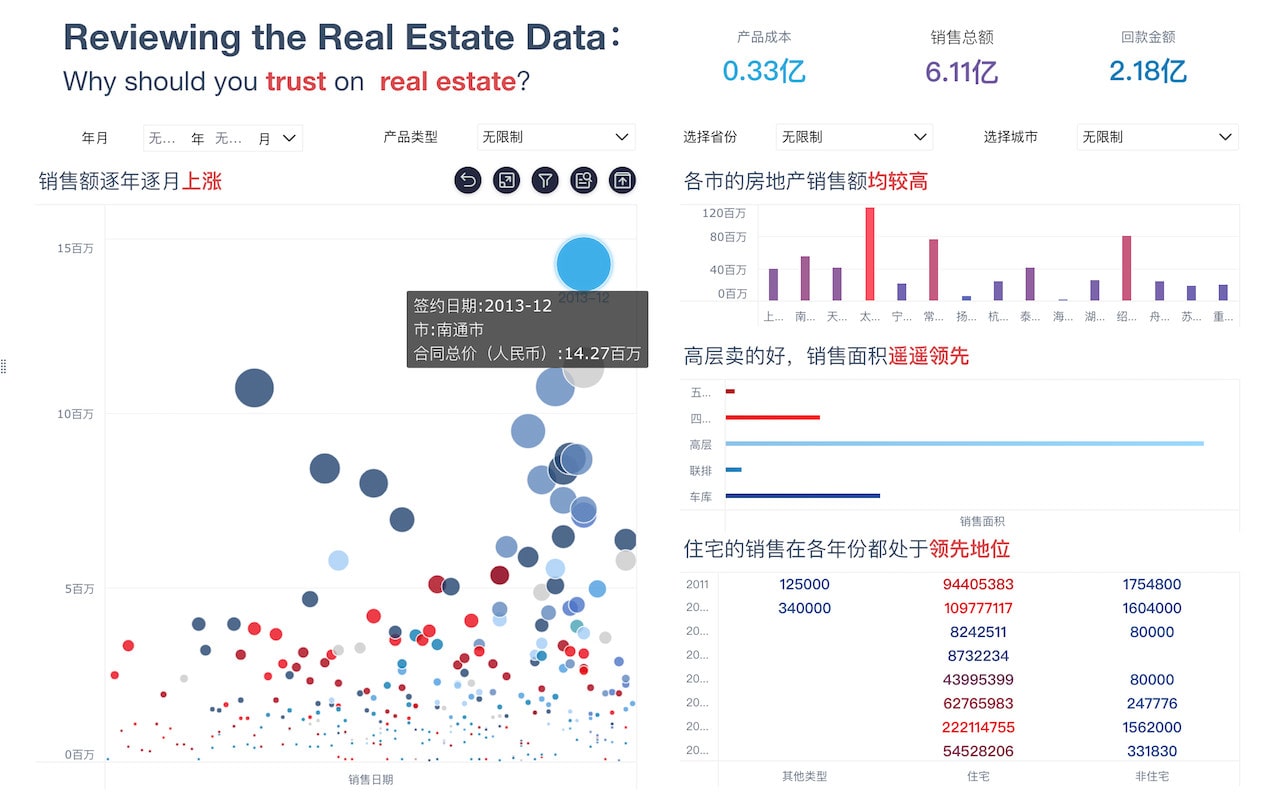

Data visualization is an essential component of data mining as it provides a visual context that helps in understanding complex data patterns and insights. Scatter plots and line charts are basic visualization tools for understanding relationships between two continuous variables. Bar charts and histograms are useful for categorical data and frequency distributions. Heatmaps provide a graphical representation of data where individual values are represented by colors, making them useful for visualizing correlation matrices or spatial data. Box plots are valuable for summarizing the distribution of a dataset and identifying outliers. Network graphs visualize relationships and interactions within data, such as social network connections or molecular structures. Geospatial visualizations map data points to geographic locations, providing insights into spatial patterns and trends. Advanced visualization tools like Tableau, Power BI, and D3.js offer interactive and dynamic visualizations, enabling users to explore data in depth. Effective visualization not only makes data more accessible but also aids in communicating findings to stakeholders in an intuitive and impactful manner.

VI. BIG DATA TECHNOLOGIES

Handling and processing massive datasets require specialized big data technologies. Hadoop, an open-source framework, allows for the distributed storage and processing of large datasets across clusters of computers using simple programming models. Apache Spark is another powerful big data processing framework that provides in-memory processing capabilities, making it significantly faster than Hadoop for certain tasks. NoSQL databases like MongoDB, Cassandra, and HBase are designed for horizontal scaling and can handle large volumes of unstructured data more efficiently than traditional relational databases. Data warehouses like Amazon Redshift, Google BigQuery, and Snowflake enable the storage and query of large datasets, supporting complex analytical queries. Streaming data platforms like Apache Kafka and Apache Flink allow for the real-time processing of data streams, making them suitable for applications that require real-time analytics. Cloud-based solutions offer scalable and flexible resources for big data processing, with services like Amazon Web Services (AWS), Microsoft Azure, and Google Cloud Platform (GCP) providing a suite of tools for data storage, processing, and analysis. These technologies are essential for managing and extracting insights from vast amounts of data in an efficient and scalable manner.

VII. ETHICAL CONSIDERATIONS IN DATA MINING

Ethical considerations are paramount in data mining to ensure that data analysis practices are fair, transparent, and respect individual privacy. Data privacy involves protecting personal information from unauthorized access and ensuring that data collection practices comply with regulations like GDPR and CCPA. Bias and fairness in algorithms need to be addressed to prevent discrimination and ensure equitable outcomes across different demographic groups. Transparency and interpretability of models are crucial for building trust and understanding how decisions are made, particularly in critical applications like healthcare and finance. Consent and informed usage require that individuals are aware of how their data is being used and have given explicit permission for its use. Data security involves implementing robust measures to protect data from breaches and cyber-attacks. Accountability and governance frameworks are necessary to ensure that data mining activities are conducted responsibly and that there are mechanisms in place to address any ethical issues that arise. By adhering to ethical principles, organizations can build trust with their stakeholders and ensure that their data mining practices contribute positively to society.

VIII. CASE STUDIES AND APPLICATIONS

Real-world applications and case studies highlight the practical impact of data mining across various industries. In healthcare, data mining is used for predictive analytics to forecast disease outbreaks, patient risk factors, and treatment outcomes. Retail and e-commerce leverage data mining for customer segmentation, personalized marketing, and inventory management. In the financial sector, data mining techniques are employed for fraud detection, credit scoring, and algorithmic trading. Manufacturing uses data mining for predictive maintenance, quality control, and supply chain optimization. Telecommunications companies analyze customer data to reduce churn, optimize network performance, and develop new services. In the energy sector, data mining helps in demand forecasting, grid management, and optimizing energy consumption. Social media platforms utilize data mining for sentiment analysis, trend detection, and targeted advertising. Government and public sector applications include crime prediction, public health monitoring, and urban planning. These case studies demonstrate the diverse and transformative potential of data mining in driving innovation, efficiency, and informed decision-making across various domains.

相关问答FAQs:

How can I effectively mine large datasets?

To effectively mine large datasets, it is important to first understand the nature of your data and the specific goals of your analysis. Here are some strategies to consider:

-

Data Preprocessing: Start with cleaning the data to remove duplicates, fill in missing values, and correct inconsistencies. This step is crucial because the quality of your analysis heavily depends on the quality of your data.

-

Exploratory Data Analysis (EDA): Use statistical methods and visualization tools to explore your data. EDA helps identify patterns, trends, and anomalies that can guide your analysis. Tools like Python's Pandas and Matplotlib or R's ggplot2 are great for this.

-

Feature Selection: With large datasets, not all features will be relevant. Utilize techniques like correlation matrices or feature importance scores from machine learning models to select the most impactful features for your analysis.

-

Data Sampling: If the dataset is overwhelmingly large, consider using sampling techniques to analyze a representative subset of the data. This can speed up the mining process while still providing valuable insights.

-

Utilize Machine Learning Algorithms: Implement machine learning algorithms that can handle large datasets effectively. Techniques such as clustering, classification, and regression can uncover hidden patterns and relationships within your data.

-

Parallel Processing: Leverage modern computing capabilities by utilizing parallel processing. This allows you to split large datasets into smaller chunks and process them simultaneously, significantly speeding up the mining process.

-

Use Big Data Technologies: Consider using big data frameworks like Apache Hadoop or Apache Spark. These technologies are designed to handle large-scale data processing and can enhance your data mining capabilities.

-

Data Visualization: After mining your data, utilize visualization tools to present your findings in an understandable way. Tools such as Tableau or Power BI can help create insightful dashboards that make the data accessible to stakeholders.

-

Continuous Learning: Stay updated with the latest data mining techniques and tools. The field of data science is rapidly evolving, and continuous learning will keep you competitive and knowledgeable.

What are the best tools for mining large datasets?

When it comes to mining large datasets, the choice of tools can significantly impact the efficiency and effectiveness of your analysis. Here are some of the best tools available:

-

Apache Hadoop: This open-source framework allows for distributed storage and processing of large datasets across clusters of computers. It is highly scalable and supports various data processing tasks.

-

Apache Spark: Known for its speed and ease of use, Spark is designed for large-scale data processing. It provides APIs for programming languages such as Java, Scala, Python, and R, making it versatile for data scientists.

-

Python Libraries: Libraries such as Pandas for data manipulation, NumPy for numerical computations, and Scikit-learn for machine learning are invaluable for data analysis. Python is widely used for its simplicity and the extensive community support.

-

R Programming: R is a powerful language for statistical analysis and data visualization. It offers a wide array of packages for data mining, such as dplyr for data manipulation and ggplot2 for visualization.

-

Tableau: This data visualization tool allows users to create interactive and shareable dashboards. It can connect to various data sources, making it easy to visualize large datasets.

-

Microsoft Power BI: Similar to Tableau, Power BI helps users visualize data and share insights across their organization. It integrates well with other Microsoft products, enhancing its usability for businesses already using Microsoft services.

-

Google BigQuery: A serverless data warehouse that allows for super-fast SQL queries using the processing power of Google’s infrastructure. It is particularly useful for analyzing large datasets with minimal setup.

-

RapidMiner: This data science platform offers a user-friendly interface for data preparation, machine learning, and model deployment. It is suitable for users who may not have extensive programming knowledge.

-

KNIME: An open-source data analytics platform that supports data mining and machine learning. It provides a visual programming interface and is great for building data workflows without coding.

-

Orange: This is another open-source data visualization and analysis tool. It offers a range of components for machine learning and is particularly user-friendly for beginners.

What are common challenges in mining large datasets and how can I overcome them?

Mining large datasets presents a unique set of challenges that can hinder the analysis process. Here are some common challenges along with strategies to overcome them:

-

Data Quality Issues: Large datasets often contain errors, inconsistencies, and missing values. To tackle this, implement robust data cleaning processes before analysis. Automated tools can assist in identifying and rectifying common data quality issues.

-

Computational Limitations: Processing large datasets can require significant computational resources. Consider using cloud computing platforms like AWS, Google Cloud, or Azure that provide scalable resources on demand, allowing you to process large amounts of data efficiently.

-

Complexity of Data: Large datasets may include various data types, structures, and sources, making them complex to analyze. Utilize data integration tools to harmonize different data types and sources, ensuring a more coherent dataset for analysis.

-

Overfitting in Machine Learning: When working with large datasets, there is a risk of overfitting models to the training data. Employ cross-validation techniques and ensure that your model is tested on unseen data to validate its performance accurately.

-

Interpreting Results: Large datasets can yield complex results that are difficult to interpret. Utilize visualization tools to translate complex data findings into accessible insights. Clear and concise visualizations can help stakeholders understand the implications of the data.

-

Security and Privacy Concerns: Handling large datasets often involves sensitive information. Ensure that you comply with data protection regulations, such as GDPR, and implement necessary security measures to protect data integrity and privacy.

-

Integration of Real-Time Data: If your analysis requires real-time data, this can complicate the mining process. Consider using stream processing technologies that allow for the continuous ingestion and processing of data, ensuring that your analysis remains current.

-

Scalability of Solutions: As data volumes grow, so must your mining solutions. Design systems and processes that can scale with your data needs, allowing for flexibility as your datasets expand.

-

Skill Gaps: The field of data mining requires a range of skills, and there may be gaps in your team’s expertise. Invest in training and development programs to upskill your team or consider collaborating with data science professionals who possess the necessary experience.

-

Time Constraints: Mining large datasets can be time-consuming, and deadlines can be tight. Utilize efficient algorithms and tools designed for speed, and consider dividing tasks among team members to streamline the process.

By addressing these challenges with proactive strategies, you can enhance your data mining efforts and derive meaningful insights from large datasets.

本文内容通过AI工具匹配关键字智能整合而成,仅供参考,帆软不对内容的真实、准确或完整作任何形式的承诺。具体产品功能请以帆软官方帮助文档为准,或联系您的对接销售进行咨询。如有其他问题,您可以通过联系blog@fanruan.com进行反馈,帆软收到您的反馈后将及时答复和处理。