Data Verification ensures the accuracy, consistency, and completeness of your data. It plays a crucial role in data management by preventing errors that could lead to misguided strategies and financial losses. You must recognize its importance to maintain data quality. Data Verification acts as a quality control mechanism, safeguarding your data's integrity. Tools like FineDataLink, FineReport, and FineBI enhance this process by providing efficient data integration and analysis solutions. By prioritizing Data Verification, you can avoid costly mistakes and make informed decisions.

Understanding Data Verification

Definition and Explanation of Data Verification

What is Data Verification?

Data Verification refers to the process of ensuring that your data is accurate, complete, and consistent. It acts as a quality control mechanism, confirming that the data you use for decision-making is reliable. This process involves checking data against predefined rules or criteria to identify any discrepancies or errors. By verifying data, you can trust the information you rely on for strategic decisions.

Key Components of Data Verification

Data Verification consists of several key components:

- Accuracy: Ensures that data reflects the real-world values it represents.

- Completeness: Confirms that all necessary data is present and accounted for.

- Consistency: Checks that data remains uniform across different datasets and systems.

These components work together to maintain the integrity of your data, preventing costly mistakes and enhancing decision-making processes.

Historical Context of Data Verification

Evolution of Data Verification Practices

Data Verification has evolved significantly over the years. Initially, manual methods dominated the process, requiring individuals to painstakingly check data entries. However, the advent of technology brought about automated systems, revolutionizing how data verification is conducted. Today, machine learning algorithms play a pivotal role in automating this process, improving accuracy and efficiency. Companies like Google and Microsoft have harnessed these technologies to enhance their data verification practices.

Milestones in Data Verification

Several milestones mark the evolution of data verification:

- Introduction of Automated Systems: The shift from manual to automated verification marked a significant leap in efficiency.

- Integration of Machine Learning: Machine learning algorithms now detect anomalies in historical data, identifying errors, fraud, or inconsistencies with remarkable precision.

- Development of Data Integration Platforms: These platforms streamline data validation, ensuring accurate results promptly.

These milestones highlight the continuous advancements in data verification, underscoring its importance in maintaining data quality and reliability.

Importance of Data Verification

Ensuring Data Accuracy

Data accuracy stands as a cornerstone in your decision-making process. When you verify data, you ensure that the information you rely on is precise and trustworthy. This accuracy directly impacts the quality of your decisions.

Impact on Decision Making

Accurate data empowers you to make informed decisions. When you base your strategies on verified data, you minimize the risk of errors. This leads to more effective outcomes and enhances your ability to achieve your goals. For instance, in sales, verified data ensures that your sales forecasts are reliable, helping you allocate resources efficiently.

Role in Data Integrity

Data integrity refers to maintaining and assuring the accuracy and consistency of data over its lifecycle. Verification plays a vital role in this process. By regularly checking your data, you prevent corruption and ensure that your data remains intact. This integrity builds trust in your data systems, allowing you to use them confidently.

Enhancing Data Reliability

Reliable data forms the backbone of any data-driven process. Verification enhances this reliability by ensuring that your data is consistent and dependable.

Trust in Data-Driven Processes

When you verify data, you build trust in your data-driven processes. This trust is crucial for making decisions based on data insights. Verified data assures you that the information you use is accurate and up-to-date, fostering confidence in your analytical outcomes.

Prevention of Data Errors

Verification acts as a safeguard against data errors. By identifying and correcting discrepancies early, you prevent errors from propagating through your systems. This proactive approach reduces the risk of costly mistakes and ensures that your data remains a reliable asset.

Methods of Data Verification

Manual Verification Techniques

Pros and Cons

Manual verification involves human intervention to check data accuracy. You might find this method beneficial for small datasets or when dealing with complex data that requires human judgment. However, it can be time-consuming and prone to human error. The manual process often lacks scalability, making it less suitable for large datasets. Despite these drawbacks, manual verification allows for a nuanced understanding of data, which automated systems might miss.

Common Practices

In manual verification, you typically follow a set of established practices. These include cross-referencing data with original sources, checking for consistency across different datasets, and validating data entries against predefined criteria. You might also conduct spot checks or audits to ensure data integrity. These practices help maintain data quality but require significant time and effort.

Automated Verification Tools

Benefits of Automation

Automated verification tools offer a more efficient alternative to manual methods. They quickly identify inconsistencies, missing values, or anomalies in large datasets. By using these tools, you can reduce the time spent on data verification and minimize human error. Automation enhances scalability, allowing you to handle vast amounts of data with ease. It also ensures consistent application of verification rules, improving overall data quality.

Popular Tools and Technologies

Several modern tools and algorithms facilitate automated data validation. These systems perform data quality checks, flagging anomalies, missing values, or outliers. Automated Verification Tools and Algorithms streamline the verification process, making it more efficient and reliable. You might consider using these tools to enhance your data verification efforts, ensuring that your data remains accurate and trustworthy.

Applications of Data Verification

In Business

Data Verification in Financial Reporting

In the realm of business, data verification plays a pivotal role in financial reporting. You rely on accurate financial data to make informed decisions. Verification ensures that the financial statements reflect true and fair values. This process involves checking the accuracy of transactions, balances, and other financial data. By verifying this data, you can prevent errors that might lead to financial misstatements or fraud. Accurate financial reporting builds trust with stakeholders and complies with regulatory requirements.

Role in Customer Data Management

Customer data management benefits significantly from data verification. You need reliable customer information to tailor marketing strategies and improve customer service. Verification ensures that customer data is accurate, complete, and up-to-date. This process involves cross-referencing customer details with original sources and checking for consistency across different systems. By maintaining verified customer data, you enhance customer satisfaction and loyalty. It also helps in personalizing customer interactions and improving overall business performance.

In Research

Ensuring Validity of Research Data

In research, data verification ensures the validity of your findings. You must verify data to confirm that it accurately represents the phenomena being studied. This process involves checking data for accuracy, completeness, and consistency. Verified data enhances the credibility of your research and supports sound conclusions. By ensuring data validity, you contribute to the advancement of knowledge and avoid misleading results.

Data Verification in Scientific Studies

Scientific studies rely heavily on data verification to maintain integrity. You need to verify data to ensure that it meets the standards of scientific rigor. This process involves validating data against predefined criteria and checking for anomalies or errors. Verified data supports the reproducibility of scientific experiments and strengthens the reliability of findings. By prioritizing data verification, you uphold ethical standards and contribute to the credibility of scientific research.

Challenges in Data Verification

Common Obstacles

Data Volume and Complexity

You often face challenges with the sheer volume and complexity of data. Large datasets can overwhelm your verification processes, making it difficult to ensure accuracy and consistency. Complex data structures add another layer of difficulty. You need to navigate through intricate relationships and dependencies within the data. This complexity can lead to errors if not managed properly.

"Data verification refers to the process of assessing the accuracy, consistency, and completeness of data." - Study on Challenges in Data Verification

Human Error and Bias

Human error and bias present significant obstacles in data verification. Manual processes increase the risk of mistakes. You might overlook discrepancies or misinterpret data. Bias can also skew your verification efforts. Personal judgments or assumptions may influence how you validate data. This can lead to inaccurate results and misguided decisions.

Overcoming Challenges

Strategies for Effective Verification

To overcome these challenges, you should implement effective strategies. Start by establishing clear verification protocols. Define specific criteria and rules for data validation. Regularly review and update these protocols to adapt to changing data needs. Training your team on best practices can also reduce errors and bias. Encourage a culture of accuracy and attention to detail.

Technological Solutions

Leveraging technology can significantly enhance your data verification efforts. Advanced tools like machine learning algorithms and data analytics can detect anomalies and inconsistencies in your datasets. These technologies automate the verification process, reducing human error and increasing efficiency.

"Entrepreneurs should leverage advanced technologies such as machine learning algorithms and data analytics to detect anomalies, inconsistencies, and errors in their datasets." - Study on Leveraging Technology for Data Verification

Additionally, consider privacy-enhancing technologies like differential privacy. These methods allow you to verify data without compromising individual privacy. By adding noise to aggregated data, you ensure that no specific user's information can be deduced. This balance between verification accuracy and data protection is crucial in today's privacy-conscious environment.

"Techniques like differential privacy allow organizations to validate data without compromising individual privacy." - Study on Privacy-Enhancing Technologies for Data Verification

By adopting these strategies and technologies, you can effectively tackle the challenges in data verification, ensuring your data remains a reliable asset for decision-making.

Role of FanRuan in Data Verification

FanRuan plays a pivotal role in enhancing data verification processes through its innovative solutions. By leveraging advanced technologies, FanRuan ensures that businesses can maintain data accuracy and reliability.

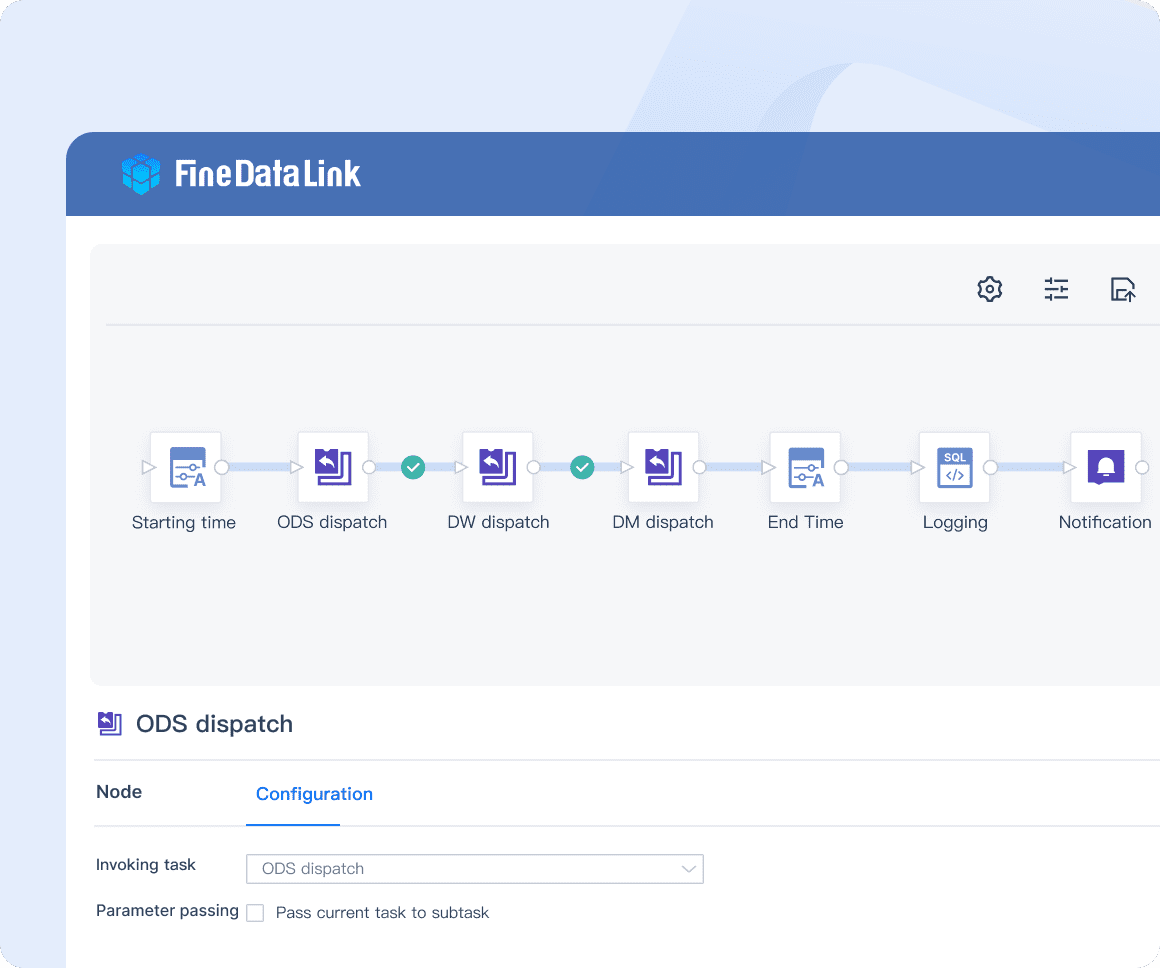

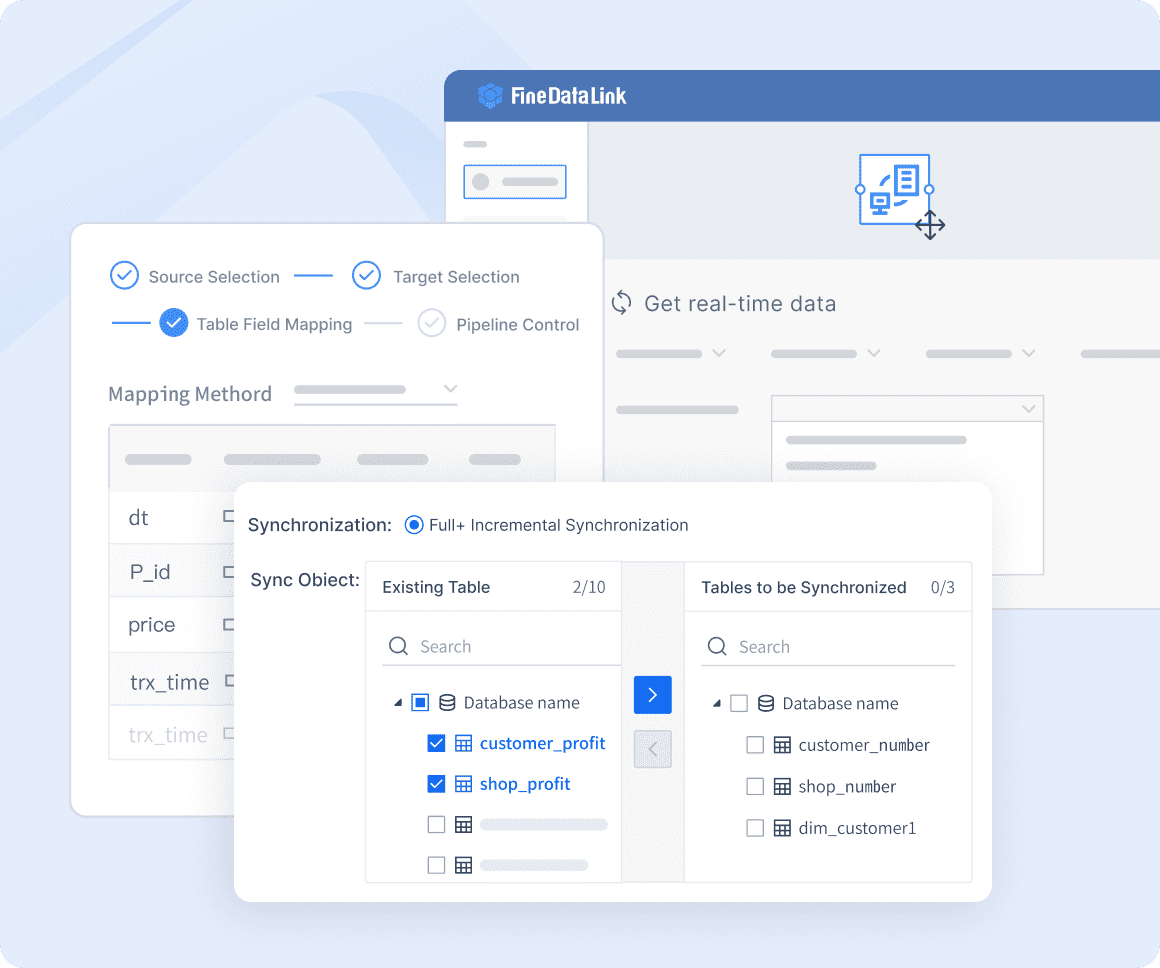

FineDataLink's Contribution

FineDataLink serves as a comprehensive platform for data integration and verification. It simplifies complex data tasks, making it easier for you to manage and verify data efficiently.

Real-Time Data Synchronization

With FineDataLink, you achieve real-time data synchronization. This feature ensures that your data remains up-to-date across various systems. By synchronizing data in real-time, you minimize discrepancies and enhance data accuracy. This capability is crucial for businesses that rely on timely data for decision-making.

ETL/ELT Processes

FineDataLink also excels in ETL (Extract, Transform, Load) and ELT (Extract, Load, Transform) processes. These processes are essential for preparing data for analysis. By automating these tasks, FineDataLink reduces manual errors and improves data quality. You benefit from streamlined data workflows, ensuring that your data is ready for verification and analysis.

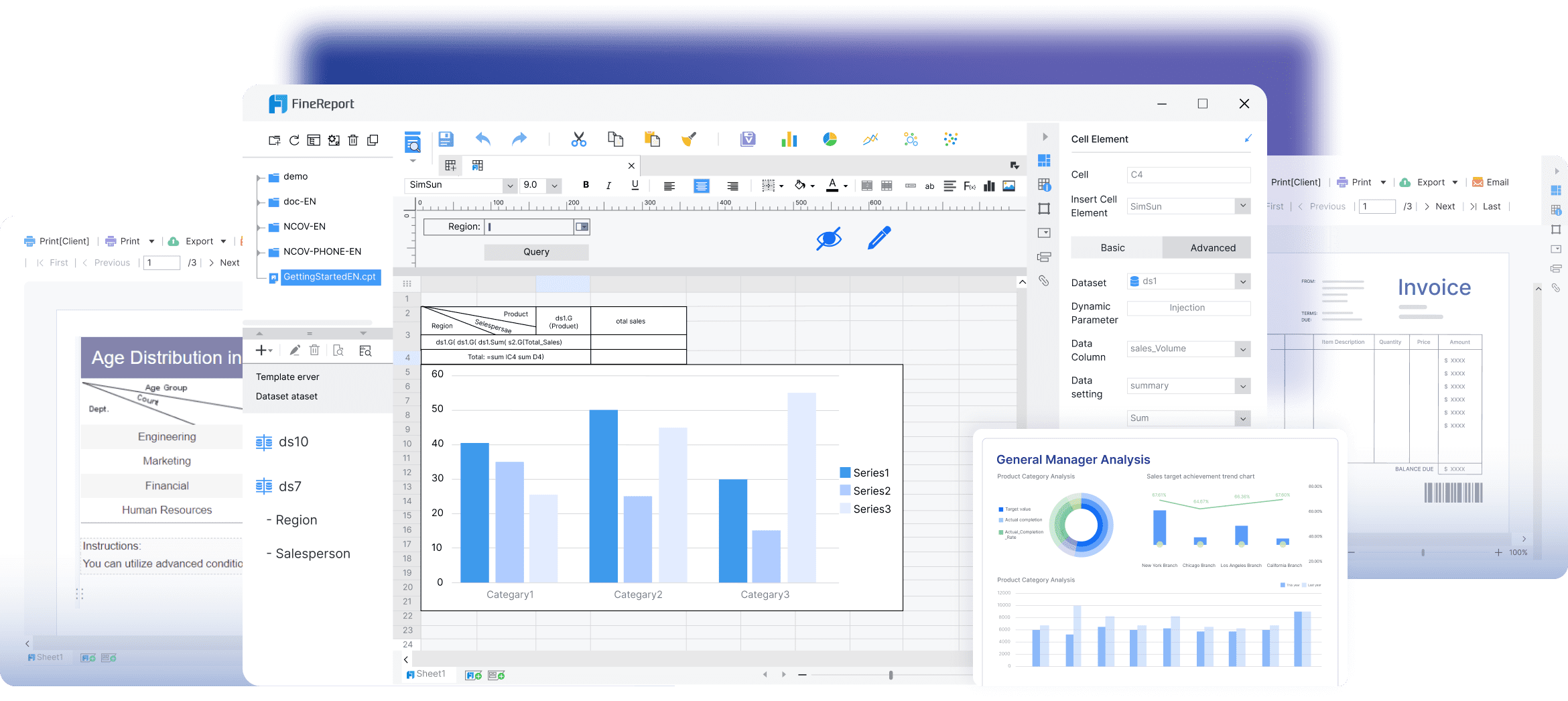

FineReport and FineBI's Impact

FineReport and FineBI further enhance data verification by providing robust tools for data analysis and visualization.

Enhancing Data Accuracy

FineReport allows you to create detailed reports that highlight data accuracy. By using this tool, you can identify and correct errors in your datasets. The ability to generate precise reports ensures that your data remains reliable and trustworthy.

Improving Data Visualization

FineBI transforms raw data into insightful visualizations. This transformation helps you understand complex data patterns and trends. By visualizing data effectively, FineBI aids in identifying anomalies and inconsistencies. This visual approach enhances your ability to verify data and make informed decisions.

FanRuan collaborates with leading companies to drive digital transformation and smart manufacturing. By integrating FineDataLink, FineReport, and FineBI into your data management strategy, you can achieve superior data verification and maintain high data quality standards.

Comparing Data Verification and Data Validation

Definitions and Differences

What is Data Validation?

Data validation ensures that your data meets specific criteria before processing. It checks for accuracy, format, and completeness. You use validation to confirm that data inputs align with expected values. This process prevents errors from entering your system. For example, when entering a date, validation ensures the format is correct. It acts as a gatekeeper, allowing only valid data to proceed.

Key Differences Between Verification and Validation

Data verification and validation serve distinct purposes. Verification confirms the accuracy, consistency, and completeness of data already collected. It checks if data aligns with predefined rules. Validation, on the other hand, ensures data meets specific criteria before entry. While verification focuses on existing data, validation targets data inputs. Both processes are essential for maintaining data quality.

When to Use Each Process

Scenarios for Data Verification

You should use data verification when you need to ensure data accuracy and reliability. It is crucial during data migration to confirm data integrity. Verification is also vital in auditing processes. It helps identify discrepancies in financial records. Use verification when analyzing historical data to detect anomalies. This process ensures your data remains a trustworthy asset.

Scenarios for Data Validation

Data validation is necessary when entering new data into your system. It prevents incorrect data from entering your database. Use validation when collecting data from external sources. It ensures data meets your standards before processing. Validation is also essential in user input forms. It checks for correct formats and required fields. By validating data, you maintain high data quality from the start.

Future of Data Verification

Emerging Trends

Impact of AI and Machine Learning

You will find that AI and machine learning are transforming data verification. These technologies automate processes, enhancing both accuracy and efficiency. AI-powered systems can validate data automatically, ensuring its accuracy and building trust with stakeholders. Machine learning algorithms identify patterns and recognize anomalies, streamlining data collection and organization. This evolution offers strategic advantages in a data-driven world.

Innovations in Verification Technologies

Innovations in verification technologies continue to emerge. You will see tools that integrate AI and machine learning to improve data accuracy. These tools automate data validation, reducing manual effort and minimizing errors. They also enhance the speed of verification processes, allowing you to handle large datasets efficiently. As these technologies evolve, they will play a crucial role in maintaining data quality.

Predictions and Expectations

Future Challenges

You may face several challenges in the future of data verification. The increasing volume and complexity of data can overwhelm verification processes. Ensuring data privacy while verifying data remains a significant concern. Balancing verification accuracy with privacy protection will require innovative solutions. Additionally, integrating new technologies into existing systems may pose technical challenges.

Opportunities for Improvement

Despite these challenges, opportunities for improvement abound. You can leverage advanced technologies to enhance verification processes. AI and machine learning offer potential solutions for handling complex data efficiently. By adopting these technologies, you can improve data accuracy and reliability. Furthermore, developing privacy-enhancing techniques will help balance verification needs with data protection. Embracing these opportunities will ensure that your data verification processes remain robust and effective.

Data verification stands as a cornerstone in maintaining the integrity and reliability of your data. It ensures that the information you rely on is accurate and trustworthy, preventing costly errors and missed opportunities. As technology evolves, so do the practices of data verification. You must stay informed about emerging trends like AI and machine learning to enhance your verification processes. Prioritizing data verification not only safeguards your data but also strengthens your decision-making capabilities. Embrace these practices to ensure your data remains a valuable asset in your strategic endeavors.

FAQ

Data verification ensures that your data is accurate, complete, and consistent. It acts as a quality control mechanism, confirming that the data you use for decision-making is reliable.

Data verification is vital because it prevents errors that could lead to misguided strategies and financial losses. It builds trust with stakeholders and fuels innovation by ensuring data integrity.

Data verification checks the accuracy and consistency of existing data, while data validation ensures that new data meets specific criteria before entry. Both processes are essential for maintaining data quality.

You may face challenges such as data volume and complexity, human error, and bias. These obstacles can hinder your ability to ensure data accuracy and consistency.

Advanced tools like machine learning algorithms and data analytics can detect anomalies and inconsistencies in your datasets. These technologies automate the verification process, reducing human error and increasing efficiency.

In business, data verification ensures accurate financial reporting and reliable customer data management. It helps prevent errors that might lead to financial misstatements or fraud.

In research, data verification ensures the validity of your findings. Verified data enhances the credibility of your research and supports sound conclusions.

Automated verification tools offer efficiency by quickly identifying inconsistencies in large datasets. They reduce the time spent on data verification and minimize human error.

Implement effective strategies like establishing clear verification protocols and leveraging technology. Training your team on best practices can also reduce errors and bias.

Emerging trends include the integration of AI and machine learning to automate processes. These technologies enhance accuracy and efficiency, offering strategic advantages in a data-driven world.

Continue Reading About Data Verification

10 Game-Changing Project Management Reporting Types!

Unlock project success with 10 must-know reporting types! Track progress, manage risks, and stay on budget like a pro.

Lewis

Mar 03, 2025

Which Data Analysis Projects Work Best for Beginners?

Ready to shine in 2025? Discover easy data analysis projects to boost your portfolio, learn data cleaning, visualization, and tackle real-world challenges!

Lewis

Mar 10, 2025

Best Data Integration Vendors for Seamless Workflows

Discover the top 20 data integration vendors of 2025 for seamless workflows. Compare tools like Talend, AWS Glue, and Fivetran to optimize your data processes.

Howard

Jan 22, 2025

Discover the Best 12 Data Automation Tools of 2025

Explore 2025's top 12 data automation tools to boost efficiency and streamline data management for your business.

Howard

Nov 07, 2024

Data Analysis vs Data Analytics: What’s the Real Difference?

Data Analysis vs Data Analytics: What’s the Difference? Discover How One Interprets History While the Other Shapes Tomorrow. Explore Here!

Lewis

Mar 10, 2025

Draft Dashboard Review - Is It the Ultimate DFS Tool?

Review the Draft Dashboard, a top DFS tool with real-time data, predictive analytics, and lineup optimization. Compare features and pricing with competitors.

Lewis

Oct 21, 2024