Data delivery is the process of transferring data from one place to another. You might not realize it, but it's a big deal in today's digital world. Imagine healthcare providers getting real-time insights from vast analytics. That's the power of efficient data delivery. It ensures you get the right information at the right time. Tools like FineDataLink, FineReport, and FineBI make this possible by streamlining data integration and visualization. These tools help businesses and individuals make informed decisions quickly and accurately.

Understanding Data Delivery

Definition and Components of Data Delivery

Data delivery involves moving data from one place to another. You might think of it as a digital courier service. It ensures that the right information reaches the right people at the right time. This process includes several key components:

Data Sources

Data sources are where your data journey begins. These can be databases, spreadsheets, or even real-time sensors. Each source provides the raw information needed for analysis. Imagine a hospital collecting patient data from various departments. Each department acts as a data source, feeding into a central system.

Data Destinations

Data destinations are where the data ends up. This could be a data warehouse, a business intelligence tool, or a simple report. The destination is crucial because it determines how you will use the data. For example, in healthcare, data might flow into a system that helps doctors make informed decisions about patient care.

Types of Data Delivery

Data delivery isn't one-size-fits-all. Different situations require different approaches. Let's explore two main types:

Batch Processing

Batch processing involves collecting data over a period and then processing it all at once. Think of it like doing laundry. You gather a week's worth of clothes and wash them in one go. This method works well when you don't need immediate results. Businesses often use batch processing for tasks like payroll or end-of-day reports.

Real-time Processing

Real-time processing is like streaming a live concert. You get the data as it happens. This type is essential when you need up-to-the-minute information. In healthcare, real-time data delivery can mean the difference between life and death. Doctors rely on real-time data to monitor patients and make quick decisions.

Mechanisms of Data Delivery

Understanding how data moves from one place to another is crucial. Let's dive into the mechanisms that make data delivery possible.

Data Transfer Protocols

Data transfer protocols are like the highways for your data. They ensure that information travels smoothly and securely from one point to another.

FTP

FTP (File Transfer Protocol) is a classic method for transferring files. It's been around for ages, making it a reliable choice for many businesses. You can think of it as a digital postman, delivering files from one computer to another. FTP is great for both single and bulk file transfers. Its long history means you won't face compatibility issues. Most systems can handle FTP, so exchanging information with partners is a breeze.

HTTP/HTTPS

When you browse the web, you're using HTTP (Hypertext Transfer Protocol). It's the backbone of the internet, allowing you to access websites and download content. But when security is a concern, you turn to HTTPS. The "S" stands for "Secure," meaning your data gets encrypted during transfer. This encryption keeps your information safe from prying eyes. HTTPS is essential for online transactions and any situation where data privacy matters.

Once your data reaches its destination, you need tools to make sense of it. Data integration tools help you gather, transform, and load data efficiently.

Once your data reaches its destination, you need tools to make sense of it. Data integration tools help you gather, transform, and load data efficiently.

ETL Tools

ETL (Extract, Transform, Load) Tools are the workhorses of data integration. They pull data from various sources, clean it up, and load it into a system where you can analyze it. Think of ETL as a chef preparing ingredients for a meal. FineDataLink excels in this area. It streamlines the data-gathering process, ensuring that your data is always fresh and ready for analysis. With FineDataLink, you can extract data from multiple sources, transform it to fit your needs, and load it into a data warehouse or analytics platform.

Data pipelines are like assembly lines for your data. They automate the flow of information from one stage to another. Imagine a factory where raw materials move through different stations until they become a finished product. Data pipelines work similarly, ensuring that your data moves smoothly from collection to analysis. They help you maintain real-time data synchronization, which is crucial for making informed decisions quickly.

Data pipelines are like assembly lines for your data. They automate the flow of information from one stage to another. Imagine a factory where raw materials move through different stations until they become a finished product. Data pipelines work similarly, ensuring that your data moves smoothly from collection to analysis. They help you maintain real-time data synchronization, which is crucial for making informed decisions quickly.

Importance of Data Delivery

Data delivery plays a crucial role in ensuring that you have the right information at your fingertips. Let's explore why it's so important.

Ensuring Data Accuracy

Accurate data is the backbone of any successful decision-making process. You need to trust the information you're working with.

Error Detection

Errors can creep into data at any stage. Detecting these errors early prevents them from affecting your decisions. Imagine you're analyzing sales data. If there's a mistake in the numbers, your conclusions could be way off. Error detection tools help you catch these mistakes before they cause problems.

Data Validation

Validation ensures that your data meets specific criteria. It's like a quality check for your information. For example, if you're collecting customer data, validation might ensure that email addresses are in the correct format. This step guarantees that the data you use is reliable and ready for analysis.

Enhancing Decision-Making

Data delivery doesn't just stop at accuracy. It also empowers you to make better decisions.

Timely Data Access

Having access to data when you need it is vital. Imagine you're running a business and need to decide on inventory levels. If you have real-time data, you can adjust your orders based on current demand. Timely access to data means you can respond quickly to changes and stay ahead of the competition.

Data-Driven Insights

Data-driven insights can reveal which campaigns are most effective. By focusing on data, you can optimize your strategies and achieve better results.

Challenges in Data Delivery

Data delivery isn't always smooth sailing. You face several challenges that can impact the efficiency and security of your data. Let's dive into some common hurdles you might encounter.

Data Security Concerns

Data security is a top priority. You need to protect sensitive information from unauthorized access and cyber threats. Here are two key aspects to consider:

Encryption

Encryption acts as a shield for your data. It transforms your information into a code, making it unreadable to anyone without the right key. Imagine sending a secret message that only the intended recipient can decode. That's what encryption does for your data. In today's digital age, encryption is essential for safeguarding your information during transfer and storage.

Access Control

Access control ensures that only authorized individuals can access your data. Think of it as a security checkpoint. You decide who gets in and who stays out. By implementing strong access control measures, you can prevent unauthorized users from tampering with or stealing your data. This step is crucial for maintaining the integrity and confidentiality of your information.

Scalability Issues

As your business grows, so does your data. You need systems that can handle increasing volumes of information without breaking a sweat. Let's explore two common scalability challenges:

Handling Large Data Volumes

Handling large data volumes can be daunting. Imagine trying to pour a gallon of water into a pint-sized cup. Without the right infrastructure, your systems can become overwhelmed. You need solutions that can scale with your data needs. This might involve upgrading your hardware or optimizing your software to process data more efficiently.

Infrastructure Limitations

Infrastructure limitations can hinder your ability to deliver data effectively. Outdated systems or insufficient resources can slow down your processes. You need to assess your current infrastructure and identify areas for improvement. Consider investing in modern technologies like cloud computing to enhance your data delivery capabilities.

By addressing these challenges head-on, you can ensure that your data delivery processes remain efficient and secure.

Solutions to Data Delivery Challenges

When it comes to data delivery, you might face some hurdles. But don't worry, there are ways to tackle these challenges head-on. Let's dive into some effective solutions.

Implementing Robust Security Measures

Keeping your data safe is crucial. You need to ensure that your information stays protected from unauthorized access and cyber threats. Here are two key strategies to enhance your data security:

Firewalls

Think of firewalls as your data's first line of defense. They act like a security guard, monitoring incoming and outgoing traffic. By setting up a firewall, you can block unauthorized access and keep your data safe from potential threats. It's like having a bouncer at the door, only letting in the right people.

Secure Protocols

Using secure protocols is another way to protect your data. These protocols encrypt your information during transfer, ensuring that only authorized parties can access it. Imagine sending a secret message that only the intended recipient can read. That's what secure protocols do for your data. They keep your information safe from prying eyes.

Optimizing Infrastructure

To handle increasing data volumes, you need a robust infrastructure. Let's explore two ways to optimize your systems for efficient data delivery:

Cloud Solutions

Cloud solutions offer a flexible and scalable way to manage your data. By moving your data to the cloud, you can access it from anywhere, anytime. This approach allows you to scale your resources up or down based on your needs. It's like having a storage unit that expands as you acquire more stuff. With cloud solutions offer a flexible, you can handle large data volumes without breaking a sweat.

Load Balancing

Load balancing ensures that your systems run smoothly, even during peak times. It distributes incoming data across multiple servers, preventing any single server from becoming overwhelmed. Imagine a busy restaurant with multiple chefs working together to prepare meals. Load balancing works similarly, ensuring that your data delivery processes remain efficient and reliable.

By implementing these solutions, you can overcome data delivery challenges and ensure that your information flows smoothly and securely.

Emerging Trends in Data Delivery

Data delivery is evolving rapidly, and staying updated with the latest trends can give you a competitive edge. Let's explore some of the exciting developments in this field.

AI and Machine Learning

Artificial Intelligence (AI) and Machine Learning (ML) are transforming how data is delivered and processed. These technologies offer powerful tools for analyzing and interpreting vast amounts of data.

Predictive Analytics

Predictive analytics uses AI and ML to forecast future trends based on historical data. Imagine having a crystal ball that helps you anticipate customer behavior or market shifts. By leveraging predictive analytics, you can make proactive decisions, optimize operations, and enhance customer experiences. This approach allows you to stay ahead of the curve and seize opportunities before your competitors do.

Automated Data Processing

Automated data processing streamlines the handling of data, reducing manual intervention and errors. AI and ML algorithms can process data faster and more accurately than humans. This automation frees up your time, allowing you to focus on strategic tasks rather than mundane data entry. With automated data processing, you can ensure that your data is always up-to-date and ready for analysis.

Edge Computing

Edge computing is another trend reshaping data delivery. It involves processing data closer to its source rather than relying on centralized servers. This approach offers several benefits that can enhance your data delivery processes.

Decentralized Data Processing

Decentralized data processing allows you to analyze data at the network's edge, reducing the need to send it to a central location. This method improves data privacy and security by keeping sensitive information local. You can make real-time decisions without waiting for data to travel back and forth. This capability is especially valuable in industries like healthcare, where timely insights can impact patient care.

Reduced Latency

Reduced latency is a significant advantage of edge computing. By processing data closer to its source, you minimize delays and ensure faster response times. Imagine a self-driving car that needs to make split-second decisions. Edge computing enables it to process data instantly, enhancing safety and performance. In your business, reduced latency means you can respond quickly to changes and deliver a seamless experience to your customers.

By embracing these emerging trends, you can enhance your data delivery capabilities and stay ahead in a rapidly changing digital landscape.

Role of FineDataLink in Data Delivery

FineDataLink plays a pivotal role in enhancing data delivery processes. It offers robust solutions that ensure seamless data integration and synchronization, making it an invaluable tool for businesses aiming to optimize their data workflows.

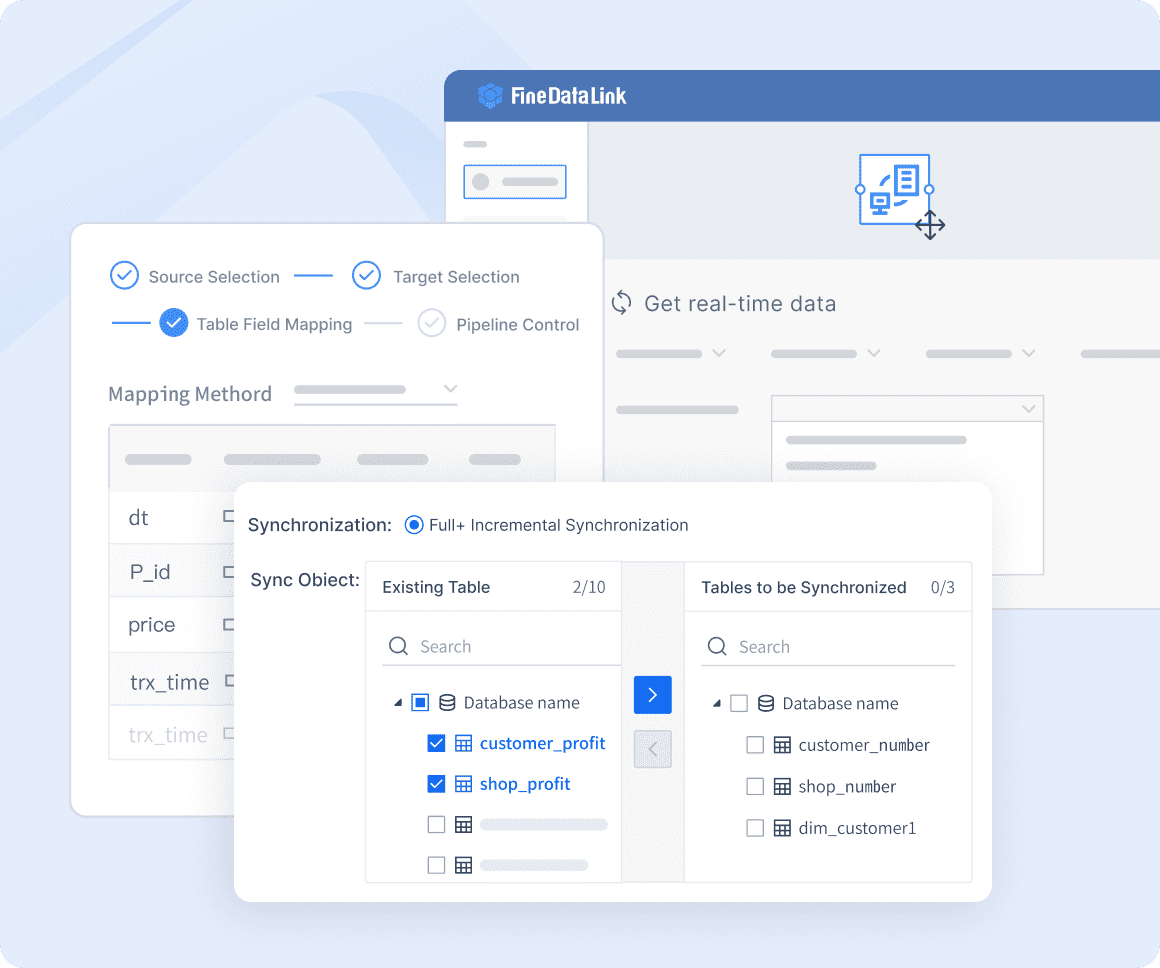

Real-time Data Synchronization

Real-time data synchronization is crucial for maintaining up-to-date information across various systems. FineDataLink excels in this area by providing tools that ensure data remains consistent and accessible.

Addressing Data Silos

Data silos can hinder effective decision-making by isolating information within different departments or systems. FineDataLink addresses this challenge by integrating data from multiple sources into a unified dataset. This integration eliminates silos, ensuring that all stakeholders have access to the same high-quality data. By breaking down these barriers, you can foster collaboration and coherence in your analysis processes.

Enhancing Data Connectivity

Enhancing data connectivity is essential for seamless information flow. FineDataLink facilitates this by connecting diverse data sources, allowing for real-time data synchronization. This connectivity ensures that your data is always current and ready for analysis, enabling you to make informed decisions quickly. With enhanced connectivity, you can streamline operations and improve overall efficiency.

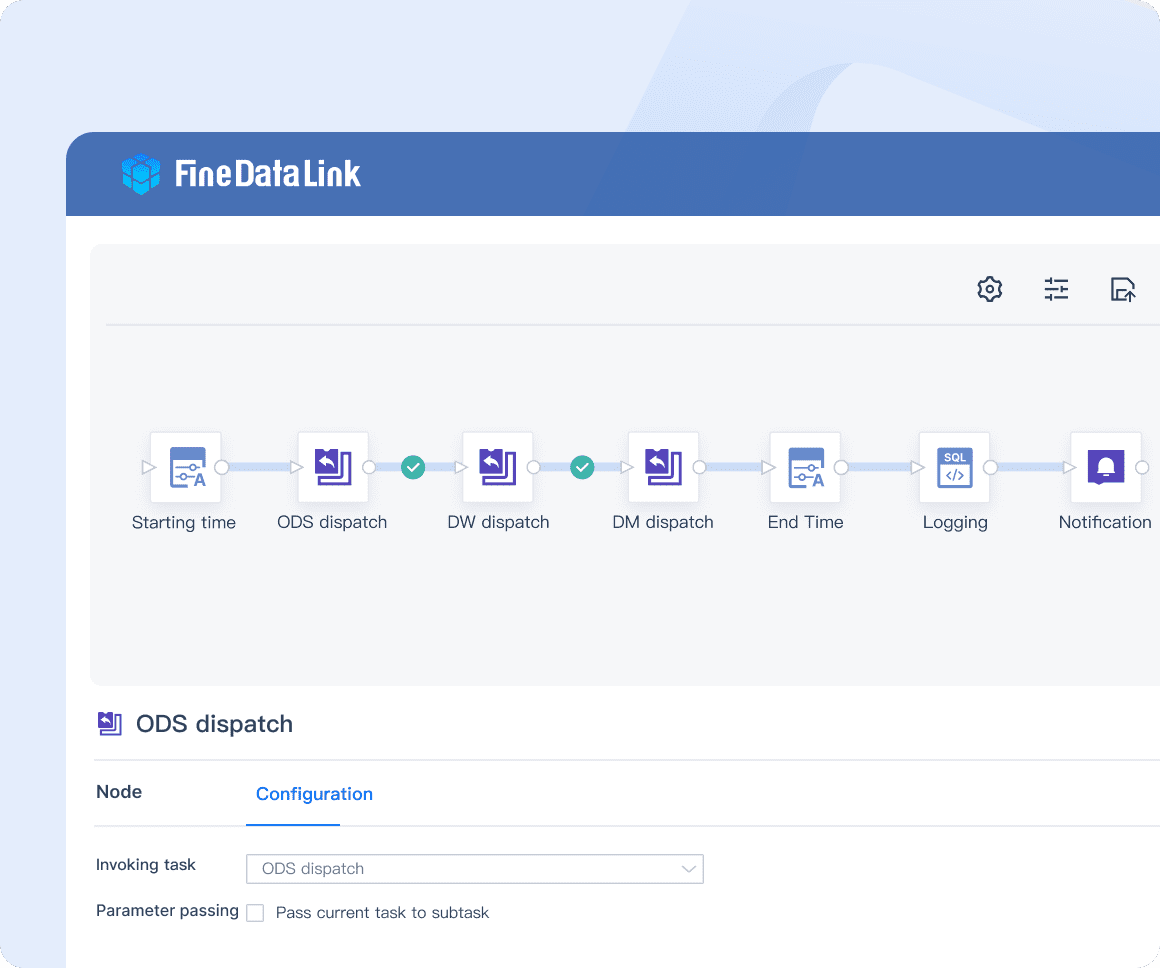

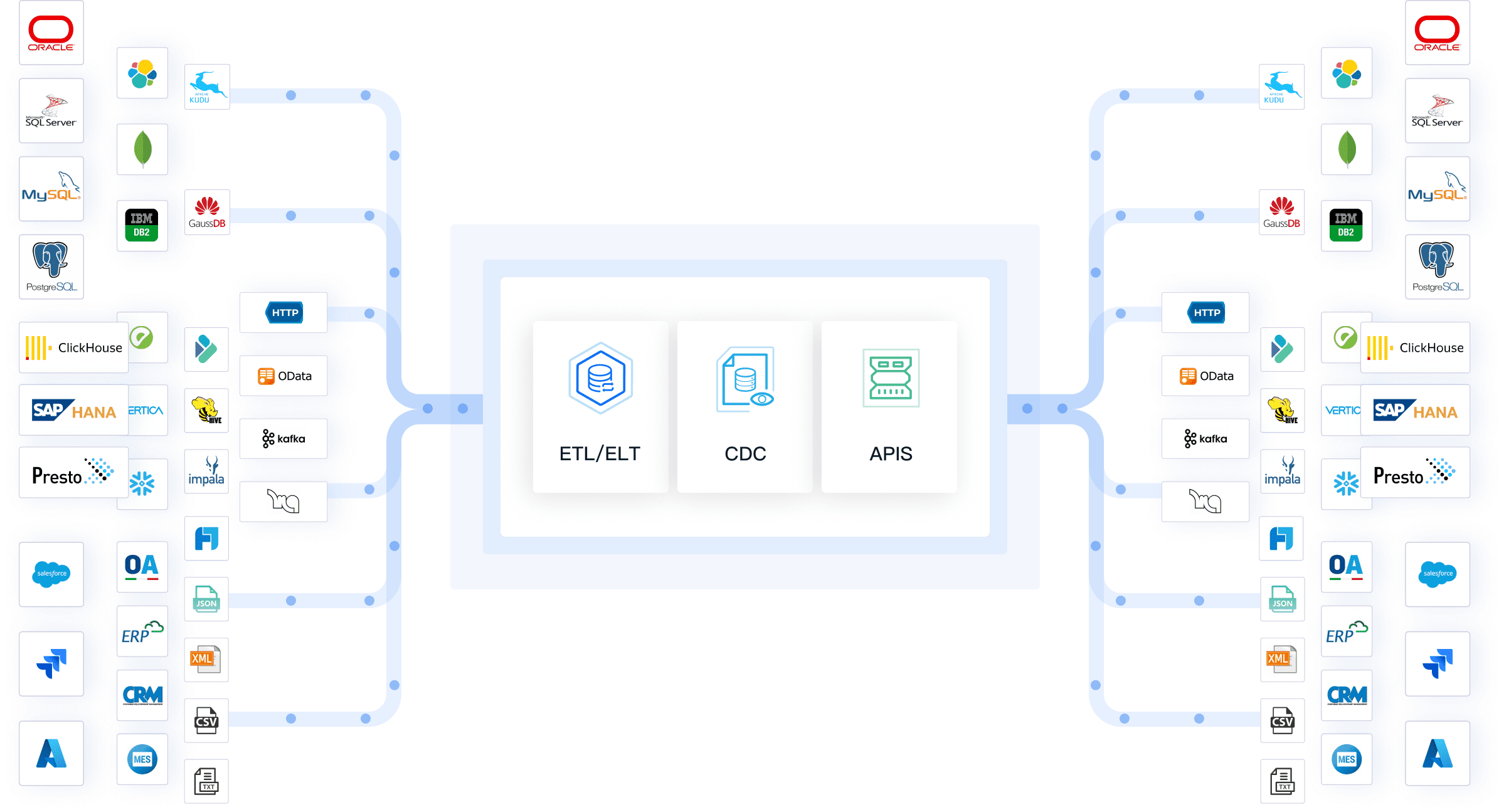

Advanced ETL & ELT Processes

FineDataLink offers advanced ETL (Extract, Transform, Load) and ELT (Extract, Load, Transform) processes that simplify data management. These processes are vital for transforming raw data into actionable insights.

Streamlining Data Workflows

Streamlining data workflows reduces manual intervention and minimizes errors. FineDataLink automates the data transformation process, ensuring that your data is standardized and ready for analysis. By applying consistent formatting rules across all data sources, you can achieve a higher level of data consistency. This automation not only saves time but also enhances the reliability of your analytics outcomes.

Supporting Diverse Data Sources

Supporting diverse data sources is crucial for comprehensive data analysis. FineDataLink connects to a wide range of data formats and systems, ensuring that you can aggregate all relevant information. Whether it's currency conversion, unit adjustments, or standardizing categorical data, FineDataLink ensures that all data is normalized before reaching your analytics platform. This capability enhances the integrity of your analysis, providing you with credible and actionable insights.

By leveraging the capabilities of FineDataLink, you can enhance your data delivery processes, ensuring that your information is accurate, consistent, and readily available for analysis.

Future of Data Delivery

The future of data delivery holds exciting possibilities. As technology advances, you can expect groundbreaking innovations and evolving business needs to shape how data is delivered and utilized.

Innovations on the Horizon

Quantum Computing

Quantum computing stands at the forefront of technological innovation. Unlike traditional computers, which use bits, quantum computers use qubits. This allows them to process vast amounts of data simultaneously. Imagine solving complex problems in seconds that would take today's computers years. Quantum computing could revolutionize data delivery by enabling faster processing and more efficient data handling. You might soon see applications in fields like cryptography, where secure data transmission becomes even more robust.

Blockchain Technology

Blockchain technology offers a decentralized approach to data management. It provides a secure and transparent way to record transactions. Each block in the chain contains a record of multiple transactions, and once added, it cannot be altered. This immutability ensures data integrity. For you, this means enhanced security and trust in data delivery processes. Blockchain can streamline operations in industries like finance and supply chain management by providing a reliable and tamper-proof record of transactions.

Evolving Business Needs

Customization

As businesses grow, the demand for customized data solutions increases. You need systems that cater to specific needs and preferences. Customization allows you to tailor data delivery processes to fit your unique requirements. Whether it's personalized dashboards or bespoke data integration tools, customization ensures that you get the most relevant insights. This flexibility empowers you to make informed decisions that align with your strategic goals.

Flexibility

For instance, cloud-based platforms offer scalable resources that can adjust to your needs.

By embracing these innovations and addressing evolving business needs, you can position yourself at the cutting edge of data delivery. The future promises exciting developments that will enhance how you access, process, and utilize data.

Data delivery plays a vital role in today's digital landscape. It ensures you receive accurate and timely information, empowering you to make informed decisions. Overcoming challenges like data security and scalability is crucial. Embracing future trends such as AI and edge computing can enhance your data processes. Prioritize efficient data delivery systems to stay competitive. By doing so, you unlock actionable insights and drive success in your endeavors.

FAQ

Data delivery involves transferring data from one place to another. It ensures that the right information reaches the right people at the right time. This process is crucial for making informed decisions in various fields, including healthcare and business.

Data delivery plays a vital role in today's digital world. It connects vast analytics to actionable insights, enabling individuals and organizations to make evidence-based decisions. In healthcare, timely data delivery can significantly impact patient care and operational efficiency.

You might face several challenges, such as ensuring data accuracy, maintaining security, and handling large data volumes. Overcoming these hurdles requires robust security measures, optimized infrastructure, and efficient data management practices.

To ensure data accuracy, you should implement error detection and data validation processes. These steps help identify and correct mistakes before they affect your decisions, ensuring that the information you use is reliable.

Technology plays a significant role in data delivery by providing tools and solutions for efficient data integration, synchronization, and analysis. Platforms like FineDataLink offer advanced ETL and ELT processes, real-time data synchronization, and support for diverse data sources.

You can enhance your data delivery processes by implementing robust security measures, optimizing your infrastructure, and embracing emerging trends like AI, machine learning, and edge computing. These strategies will help you stay ahead in a rapidly changing digital landscape.

Continue Reading About Data Delivery

Snowflake Data Integration: Your How-To Guide

Master Snowflake data integration with key tools and processes for seamless data management, ensuring scalability, performance, and security.

Howard

Nov 11, 2024

10 Game-Changing Project Management Reporting Types!

Unlock project success with 10 must-know reporting types! Track progress, manage risks, and stay on budget like a pro.

Lewis

Mar 03, 2025

2025 Best Data Integration Solutions and Selection Guide

Explore top data integration solutions for 2025, enhancing data management and operational efficiency with leading platforms like Fivetran and Talend.

Howard

Dec 19, 2024

2025's Best Data Validation Tools: Top 7 Picks

Explore the top 7 data validation tools of 2025, featuring key features, benefits, user experiences, and pricing to ensure accurate and reliable data.

Howard

Aug 09, 2024

2025 Data Pipeline Examples: Learn & Master with Ease!

Unlock 2025’s Data Pipeline Examples! Discover how they automate data flow, boost quality, and deliver real-time insights for smarter business decisions.

Howard

Feb 24, 2025

Best Data Integration Platforms to Use in 2025

Explore the best data integration platforms for 2025, including cloud-based, on-premises, and hybrid solutions. Learn about key features, benefits, and top players.

Howard

Jun 20, 2024