AWS Data Pipeline helps you manage data processing and movement across AWS services.It leverages data automation to streamline the transfer and transformation of data, ensuring seamless workflows across systems.You can easily integrate data from various sources, eliminating silos and enhancing analytics reliability. AWS Data Pipeline works with services like Amazon S3 and Amazon RDS, making data handling efficient. Its serverless nature means you focus on tasks without worrying about infrastructure. FineDataLink offers a modern alternative for real-time data integration. For analytics, FineBI empowers users with insightful visualizations and data-driven decisions.

AWS Data Pipeline is a web service that enables data automation by orchestrating and automating the movement and transformation of data across various AWS services and on-premises data sources. It simplifies creating complex data workflows and ETL (Extract, Transform, Load) tasks without needing manual scripting or custom code. With AWS Data Pipeline, you can schedule, manage, and monitor data-driven workflows, making it easier to integrate and process data across various systems.

Imagine you have data scattered across different locations. AWS Data Pipeline acts like a conductor, ensuring your data moves smoothly from one place to another. It handles everything from data movement and transformation to backups and automating analytics tasks. This service ensures that tasks depend on the successful completion of preceding tasks, making your data workflows reliable and efficient.

AWS Data Pipeline offers several key features that make it a powerful tool for managing data workflows:

By using AWS Data Pipeline, you can focus on analyzing your data rather than worrying about the underlying infrastructure. This service provides a reliable and efficient way to manage your data workflows, ensuring that your data is always ready for decision-making.

When you dive into the AWS Data Pipeline, understanding its core components and architecture is crucial. These elements work together to ensure your data flows smoothly and efficiently.

The pipeline definition acts as the blueprint for your data workflows. It specifies how your business logic should interact with the AWS Data Pipeline. Think of it as a detailed plan that outlines every step your data will take. This definition includes various components like data nodes, activities, and preconditions. By clearly defining these elements, you ensure that your data processes run without a hitch.

Data nodes serve as the starting and ending points for your data within the pipeline. They represent the locations where your data resides or where it needs to go. You can think of them as the addresses for your data. AWS Data Pipeline supports several types of data nodes, such as:

These nodes allow you to extract data from various sources and load it into destinations like data lakes or warehouses. This flexibility ensures that your data can be easily accessed and transformed as needed.

Activities are the actions that occur within the AWS Data Pipeline. They perform tasks like executing SQL queries, transforming data, or moving it from one source to another. You can schedule these activities to run at specific times or intervals, ensuring your data is always current. Activities also depend on preconditions, which must be met before they execute. For example, if you want to move data from Amazon S3, the precondition might be checking if the data is available there. Once the precondition is satisfied, the activity proceeds.

By understanding these core components, you can effectively design and manage your data workflows using AWS Data Pipeline. This service provides a robust framework for automating data movement and transformation, allowing you to focus on deriving insights from your data.

Before you dive into the activities of AWS Data Pipeline, you need to understand the concept of preconditions. These are the conditions that must be met before any activity can start. Think of them as checkpoints that ensure everything is in place before the pipeline moves forward.

By setting these preconditions, you create a robust framework for your data workflows. They act as safeguards, ensuring that each step in your AWS Data Pipeline is executed under the right conditions. This approach not only improves data reliability but also helps maintain the integrity of your data processes.

Understanding how AWS Data Pipeline operates can help you manage your data workflows more effectively. Let's break it down into two main aspects: workflow execution and scheduling with dependency management.

When you set up a workflow in AWS Data Pipeline, you essentially create a series of tasks that move and transform your data. Think of it as a conveyor belt in a factory. Each task on this belt performs a specific function, like extracting data from Amazon S3 or transforming it using Amazon EMR. You define these tasks in a pipeline definition, which acts as your blueprint.

Scheduling and managing dependencies are crucial for keeping your data workflows efficient and timely. AWS Data Pipeline provides tools to handle these aspects seamlessly.

By understanding these components, you can harness the full potential of AWS Data Pipeline. It automates complex data workflows, allowing you to focus on analyzing your data and making informed decisions.

When you use AWS Data Pipeline, you unlock a host of benefits that enhance your data management capabilities. Let's explore some of the key advantages:

AWS Data Pipeline ensures that your data workflows are reliable and consistent. You can automate the movement and transformation of data, which reduces the risk of human error. This automation guarantees that your data processes run smoothly, even if something unexpected happens. The service's fault-tolerant design means that your data tasks can withstand failures and continue without interruption. You can trust AWS Data Pipeline to keep your data flowing seamlessly, ensuring that your analytics and business operations remain unaffected.

With AWS Data Pipeline, you gain the flexibility to design complex data workflows tailored to your specific needs. You can integrate data from various sources, whether they're within AWS or on-premises. This flexibility allows you to create data-driven workflows that align with your business objectives. You can define custom logic for data transformations and schedule tasks to run at specific times. This adaptability ensures that your data processes are always aligned with your evolving business requirements.

Scalability is a significant advantage of using AWS Data Pipeline. As your data volume grows, you can scale your data processing tasks effortlessly. AWS Data Pipeline leverages Amazon's powerful computing resources, such as Elastic MapReduce (EMR), to handle large datasets efficiently. You can process and move data at scale, ensuring that your workflows remain efficient and cost-effective. This scalability allows you to focus on analyzing data rather than worrying about infrastructure limitations.

By harnessing these benefits, you can streamline your data workflows and enhance your organization's data-driven initiatives. AWS Data Pipeline provides a robust framework for managing data processes, allowing you to focus on deriving insights and making informed decisions.

When you choose AWS Data Pipeline, you tap into a cost-effective solution for managing your data workflows. This service helps you save money in several ways:

By utilizing AWS Data Pipeline, you gain a powerful tool that not only enhances your data management capabilities but also keeps your budget in check. This service provides a reliable and economical way to automate and streamline your data workflows, allowing you to focus on deriving insights and making informed decisions.

AWS Data Pipeline offers a versatile platform for managing your data workflows. Let's explore some practical scenarios where you can leverage its capabilities.

Imagine you have raw data scattered across different sources. AWS Data Pipeline helps you transform this data into a usable format. You can automate the extraction, transformation, and loading (ETL) processes. This automation ensures that your data is ready for analysis without manual intervention. For instance, you might need to convert sales data from a CSV file into a structured format for your analytics database. AWS Data Pipeline handles this task efficiently, allowing you to focus on deriving insights rather than managing data transformations.

Transferring data between various AWS services becomes seamless with AWS Data Pipeline. You can move data from a data lake to an analytics database or a data warehouse effortlessly. This capability is crucial when you need to integrate data from multiple sources for comprehensive analysis. For example, you might want to transfer customer data from Amazon S3 to Amazon RDS for detailed reporting. AWS Data Pipeline automates this process, ensuring that your data flows smoothly between services, enhancing the reliability and scalability of your data workflows.

Data backup and archiving are essential for maintaining data integrity and compliance. AWS Data Pipeline simplifies these tasks by automating the movement of data to secure storage locations. You can schedule regular backups of your critical data to Amazon S3, ensuring that you have a reliable copy in case of data loss. Additionally, you can archive historical data to reduce storage costs while keeping it accessible for future reference. This automation minimizes the risk of human error and ensures that your data remains safe and organized.

By utilizing AWS Data Pipeline, you can streamline your data management processes, making them more efficient and reliable. Whether you're transforming data, transferring it between services, or ensuring its safety through backups, AWS Data Pipeline provides a robust framework to support your data-driven initiatives.

Setting up AWS Data Pipeline might seem daunting, but with a clear guide, you can get it running smoothly. Let's walk through the steps and best practices to ensure your data workflows are efficient and reliable.

By following these steps and best practices, you can set up AWS Data Pipeline effectively. This service streamlines your data workflows, allowing you to focus on analyzing data and making informed decisions.

When you're exploring data integration and workflow automation, AWS Data Pipeline stands out. However, it's not the only tool available. Let's dive into how it compares with other popular options like AWS Glue, Apache Airflow, and FineDataLink by FanRuan.

AWS Glue is another powerful tool in the AWS ecosystem. It focuses on ETL (Extract, Transform, Load) processes. You might find AWS Glue appealing if you need a serverless ETL service that automatically discovers and catalogs your data. It simplifies data preparation for analytics by providing a fully managed environment. Unlike AWS Data Pipeline, which excels in orchestrating complex workflows, AWS Glue shines in transforming and preparing data for analysis. If your primary goal is to clean and prepare data, AWS Glue could be your go-to choice.

Apache Airflow offers a different approach. It's an open-source platform for orchestrating complex workflows. You define workflows as code, which gives you flexibility and control. Airflow's strength lies in its ability to handle intricate dependencies and scheduling. If you prefer a customizable solution with a strong community backing, Apache Airflow might suit your needs. However, keep in mind that it requires more setup and maintenance compared to AWS Data Pipeline, which provides a more streamlined experience within the AWS environment.

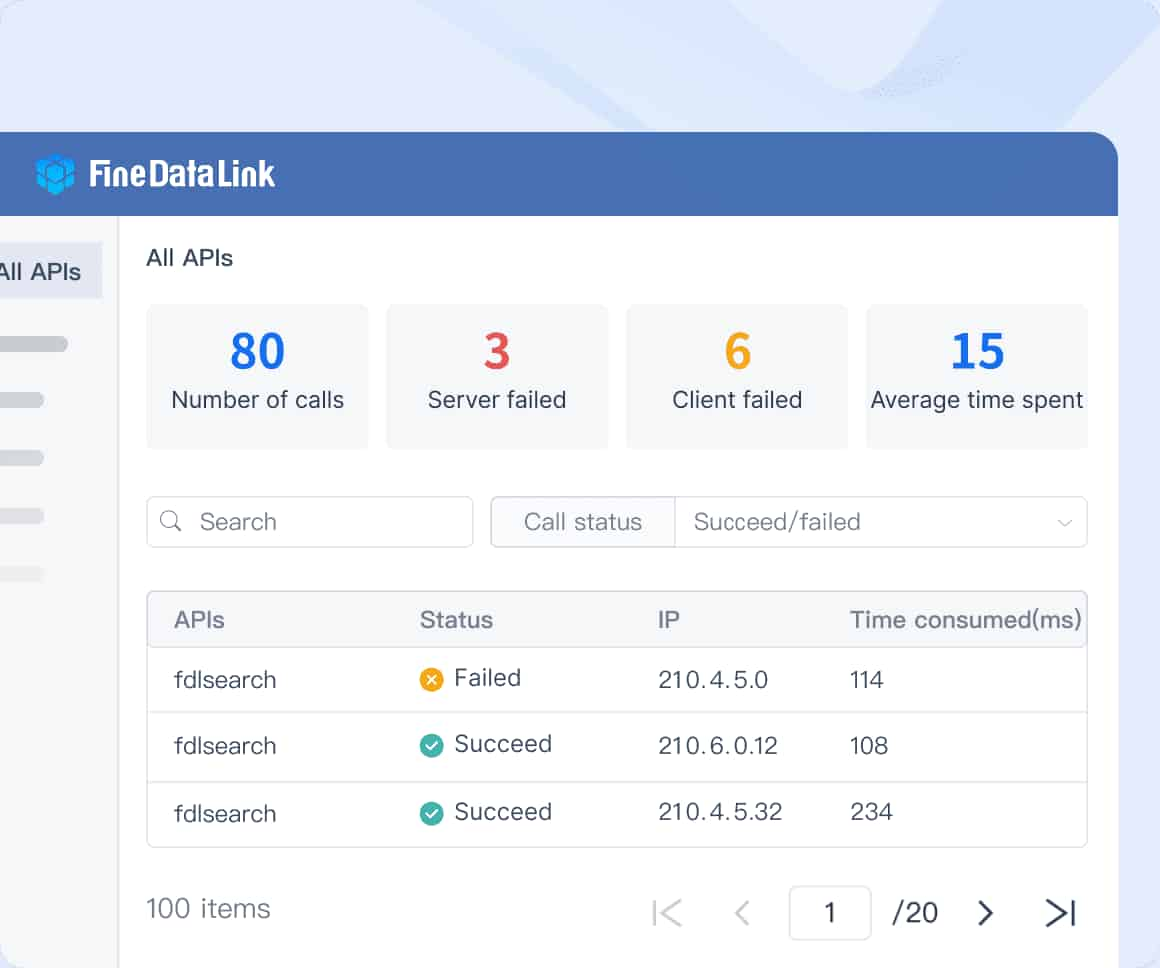

FineDataLink by FanRuan presents a modern alternative for data integration. It emphasizes real-time data synchronization and advanced ETL/ELT capabilities. FineDataLink offers a user-friendly interface with drag-and-drop functionality, making it accessible even if you're not a coding expert. It supports over 100 common data sources, allowing seamless integration across various platforms. If you're dealing with diverse data formats and need a low-code solution, FineDataLink could be a game-changer. Its focus on real-time data integration sets it apart from AWS Data Pipeline, which excels in automating data movement within the AWS ecosystem.

"FineDataLink offers a modern and scalable data integration solution that addresses challenges such as data silos, complex data formats, and manual processes."

When you explore data integration tools, understanding the differences and similarities between AWS Data Pipeline and other options like AWS Glue, Apache Airflow, and FineDataLink by FanRuan can help you make informed decisions.

1. Purpose and Functionality

AWS Data Pipeline focuses on automating data movement and transformation across AWS services and on-premises sources. It simplifies creating complex workflows without manual scripting. This tool excels in orchestrating data processes, ensuring consistent data processing and transportation.

AWS Glue, on the other hand, specializes in ETL tasks. It automatically discovers and catalogs data, making it ideal for preparing data for analytics. If your primary need is data transformation, AWS Glue might be more suitable.

Apache Airflow offers a different approach. It's an open-source platform that lets you define workflows as code. This flexibility allows you to handle intricate dependencies and scheduling. However, it requires more setup and maintenance compared to AWS Data Pipeline.

FineDataLink by FanRuan emphasizes real-time data synchronization and advanced ETL/ELT capabilities. Its user-friendly interface with drag-and-drop functionality makes it accessible even if you're not a coding expert. FineDataLink supports over 100 common data sources, allowing seamless integration across various platforms.

2. Integration and Ecosystem

AWS Data Pipeline integrates seamlessly within the AWS ecosystem. It works well with services like Amazon S3, Amazon RDS, and Amazon EMR, making data handling efficient. This integration ensures that your data workflows remain streamlined and cost-effective.

AWS Glue also fits well within the AWS environment, focusing on data preparation for analytics. It provides a fully managed ETL service, reducing the need for manual intervention.

Apache Airflow, being open-source, offers flexibility in integration. You can customize it to fit your specific needs, but it may require additional effort to integrate with AWS services.

FineDataLink stands out with its extensive support for diverse data sources. It offers a modern and scalable solution for data integration, addressing challenges like data silos and complex formats.

3. User Experience and Accessibility

AWS Data Pipeline provides a straightforward experience within the AWS console. You can automate data workflows without worrying about infrastructure, allowing you to focus on analyzing data.

AWS Glue offers a serverless ETL environment, simplifying data preparation. Its automatic data discovery and cataloging features enhance user experience.

Apache Airflow requires more technical expertise. You define workflows as code, which offers flexibility but may not be as accessible for non-technical users.

FineDataLink shines with its low-code platform. The drag-and-drop interface makes it easy to use, even if you're not familiar with coding. This accessibility makes it a valuable tool for businesses dealing with diverse data formats.

By understanding these differences and similarities, you can choose the right tool for your data integration needs. Whether you prioritize automation, flexibility, or ease of use, each option offers unique advantages to enhance your data workflows.

AWS Data Pipeline offers a robust solution for automating data workflows. Its flexibility and scalability make it an excellent choice for managing complex data processes. You can easily integrate data from various sources, ensuring seamless data movement and transformation. This tool empowers you to focus on data analysis rather than infrastructure management.

For those seeking alternative solutions, consider exploring FanRuan's FineDataLink and FineBI. FineDataLink provides a modern approach to real-time data integration, supporting over 100 common data sources. Its user-friendly interface simplifies complex tasks, making it accessible even if you're not a coding expert. FineBI, on the other hand, enhances your analytics capabilities with insightful visualizations and data-driven decisions. Both tools offer unique advantages, catering to diverse data integration and analytics needs.

Click the banner below to experience FineBI for free and empower your enterprise to convert data into productivity!

Mastering Data Pipeline: Your Comprehensive Guide

The Author

Howard

https://www.linkedin.com/in/lewis-chou-a54585181/

Related Articles

Best Software for Creating ETL Pipelines This Year

Discover the top ETL pipelines tools for 2025, offering scalability, user-friendly interfaces, and seamless integration to streamline your data pipelines.

Howard

Apr 29, 2025

What is Data Pipeline Management and Why It Matters

Data pipeline management ensures efficient, reliable data flow from sources to destinations, enabling businesses to make timely, data-driven decisions.

Howard

Mar 07, 2025

What is a Data Pipeline and Why Does It Matter

A data pipeline automates collecting, cleaning, and delivering data, ensuring accurate, timely insights for analysis and business decisions.

Howard

Mar 07, 2025