Data pipeline management involves designing, building, and maintaining systems that move data efficiently and reliably. This process ensures that businesses can access accurate and timely data for critical decision-making. Without it, organizations face challenges like inconsistent data quality, integration complexities, and scalability issues.

The growing importance of data management is evident. The global data pipeline tools market, valued at $6.8 billion in 2021, is projected to reach $35.6 billion by 2031, with a CAGR of 18.2%. This growth highlights how businesses increasingly rely on robust data systems to optimize operations and stay competitive.

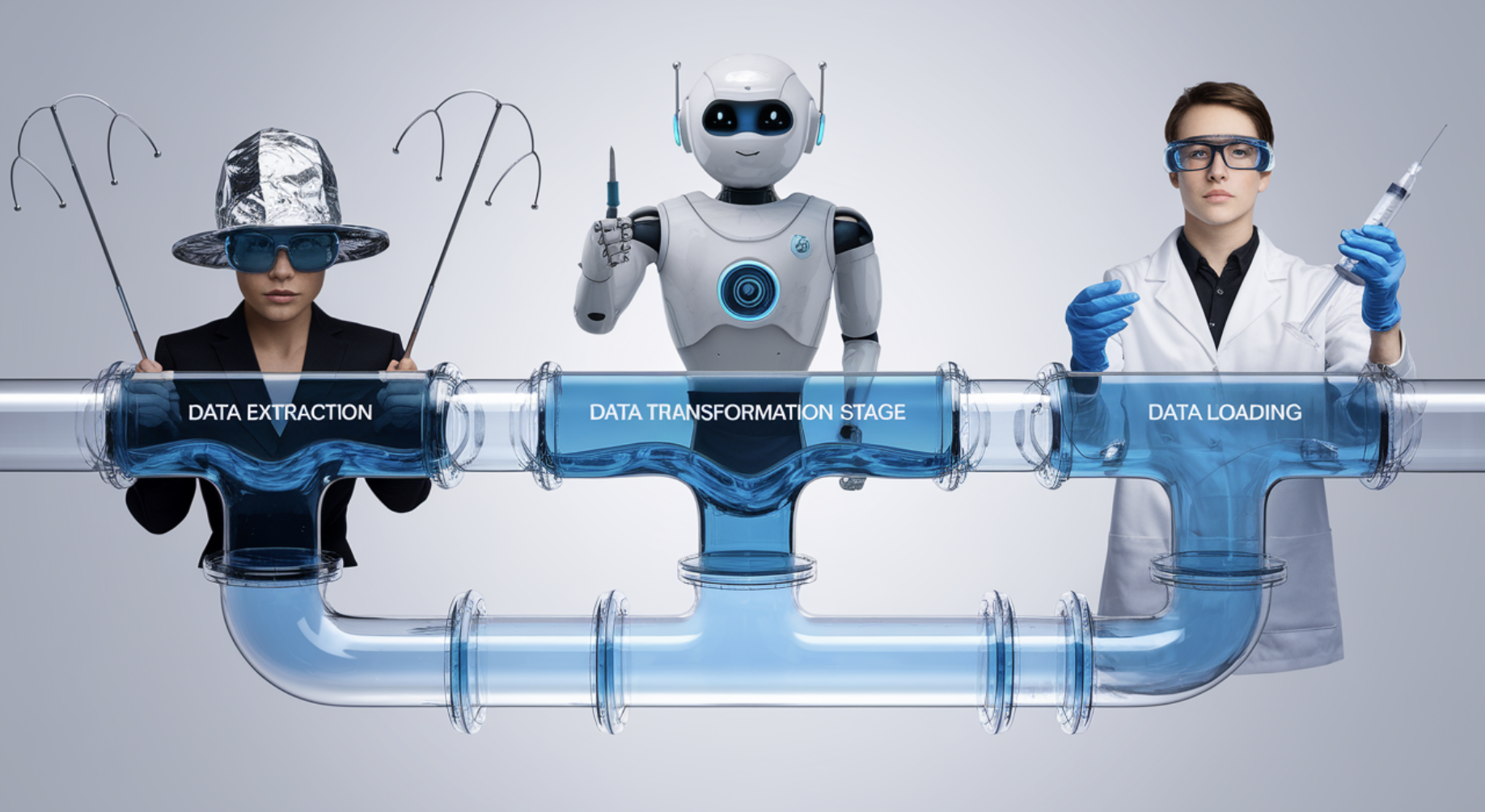

A data pipeline is a system that moves data from one location to another while transforming and processing it along the way. It ensures that raw data becomes usable and accessible for analysis or decision-making. Think of it as a series of interconnected steps that collect, clean, and deliver data to its final destination. These pipelines are essential for handling the vast amounts of data generated daily, enabling you to extract meaningful insights efficiently.

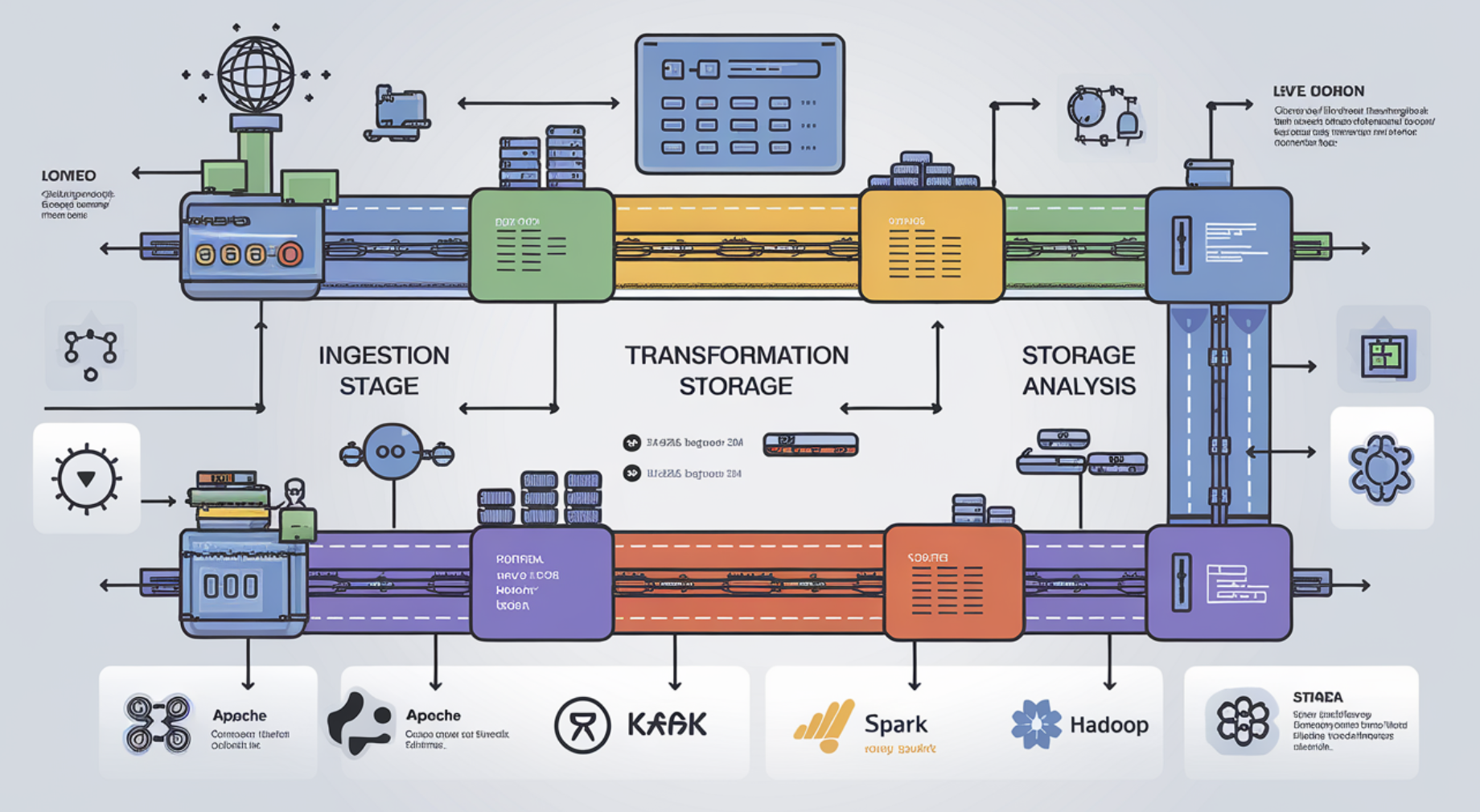

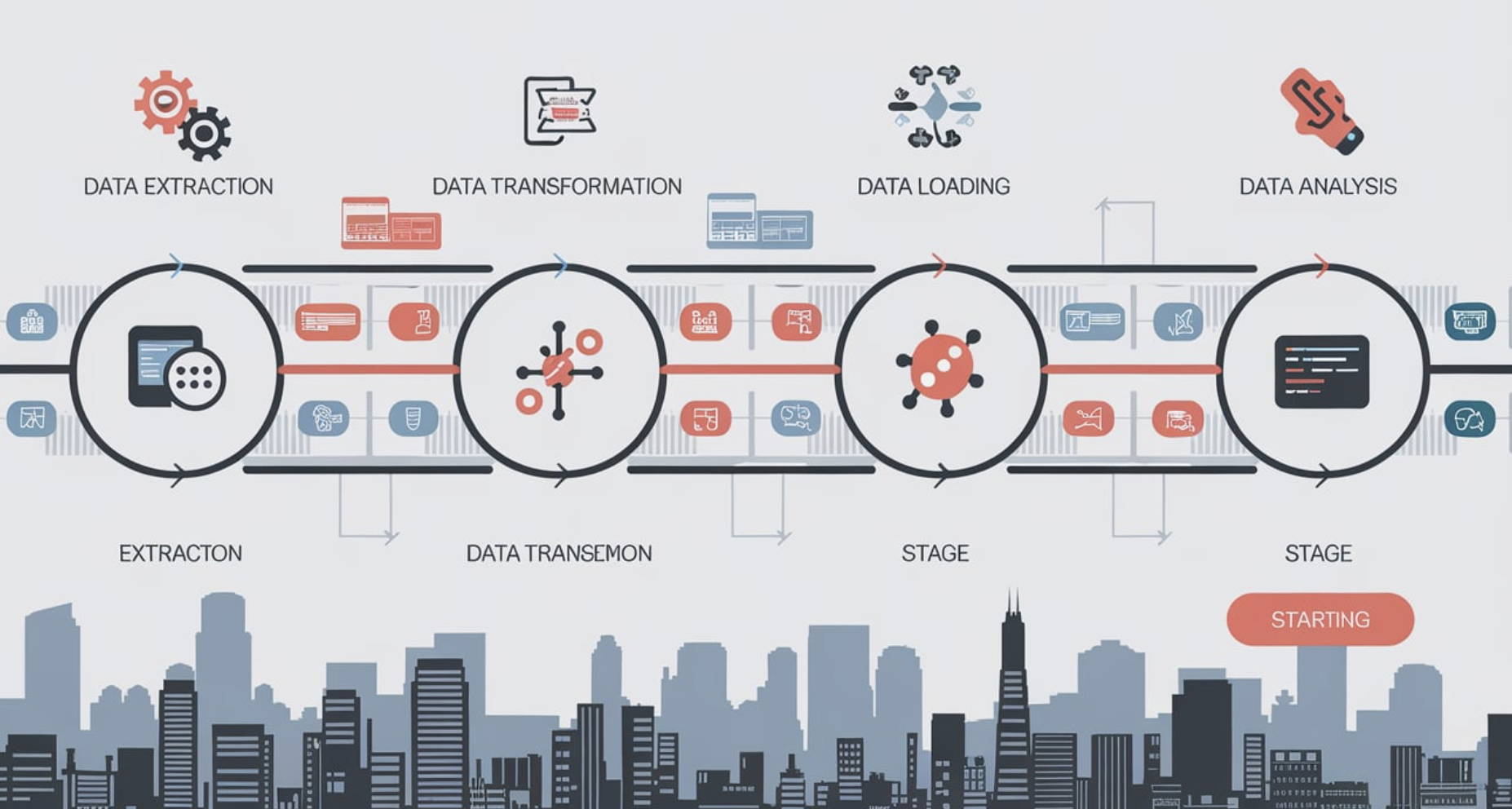

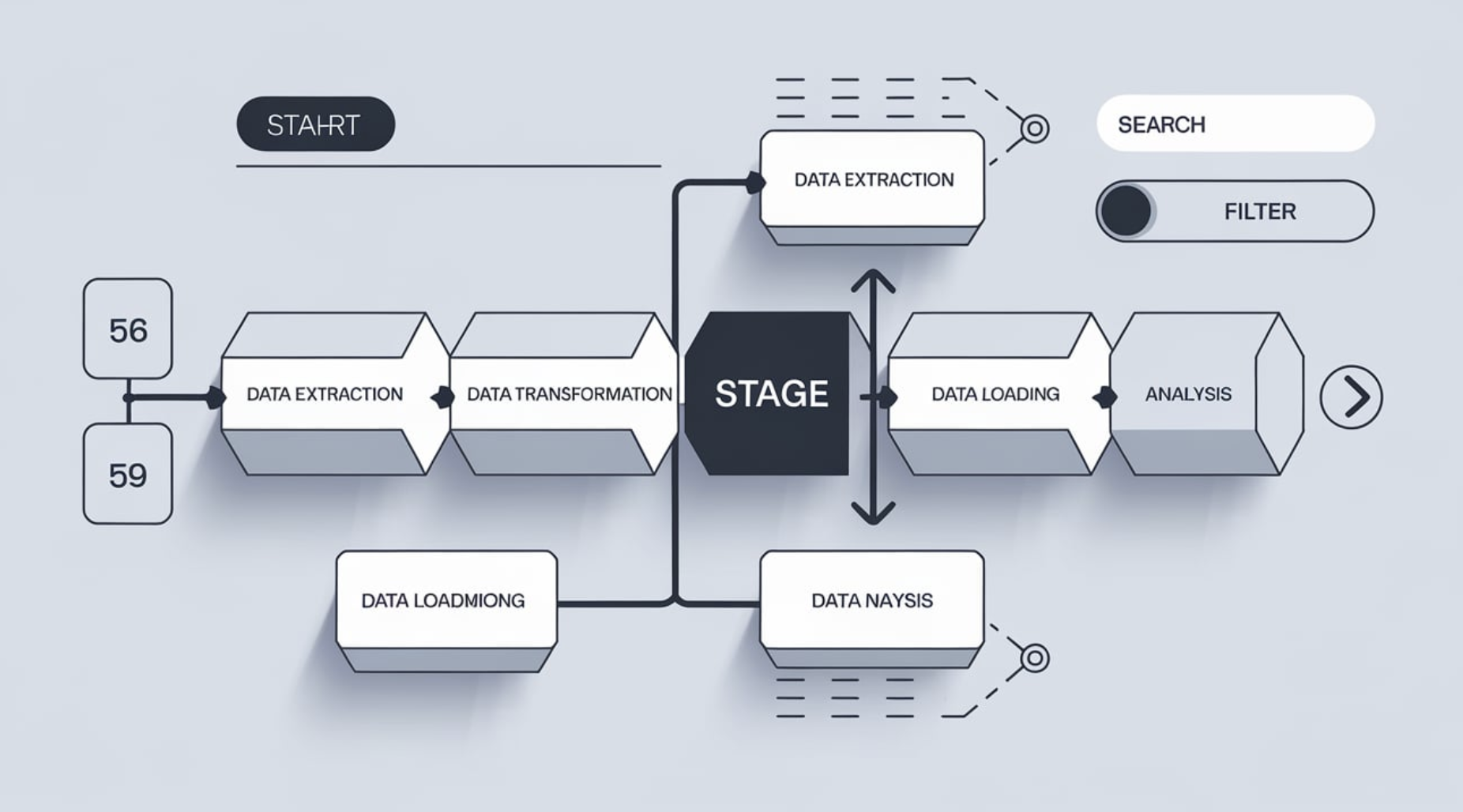

Every data pipeline consists of several critical stages that work together to ensure smooth data flow. Understanding these stages helps you appreciate how data pipelines function.

Data sources are the starting point of any pipeline. These can include databases, APIs, IoT devices, or even social media platforms. For example, a retail company might gather sales data from its point-of-sale systems or customer feedback from online reviews. The goal is to collect data from diverse origins to create a comprehensive dataset.

Once the data is collected, it undergoes processing to make it usable. This stage involves cleaning, transforming, and organizing the data. Tasks like removing duplicates, correcting errors, and standardizing formats occur here. For instance, if you’re analyzing customer data, this step ensures that names, addresses, and purchase histories are consistent and accurate.

The final stage involves delivering the processed data to its destination. This could be a data warehouse, a business intelligence tool, or a machine learning model. For example, a healthcare organization might store patient data in a secure database for future analysis. The destination depends on how you plan to use the data.

Data pipelines come in different forms, each suited to specific needs. The two primary types are batch processing pipelines and real-time processing pipelines.

Batch processing pipelines handle large volumes of data at scheduled intervals. They are ideal for tasks that don’t require immediate results. Industries like healthcare and retail often use batch processing to analyze historical data. For instance, a retail chain might process sales data overnight to optimize inventory levels the next day.

Real-time processing pipelines work continuously, processing data as it arrives. These pipelines are crucial for scenarios where immediate action is necessary. For example, financial institutions use real-time pipelines to detect fraudulent transactions instantly. This type of pipeline ensures that you can respond to changes as they happen.

| Criteria | Real-time data processing | Batch data processing |

|---|---|---|

| Data volume | Suitable for large data streams requiring immediate processing. | Suitable for low-volume data sets processed regularly. |

| Data speed | Handles high-speed data with rapid changes. | Processes low-velocity data with slow changes. |

| Data diversity | Ideal for diverse, unstructured data. | Best for homogeneous, structured data. |

| Data value | Supports high-value data impacting operations. | Suitable for low-value data indirectly affecting operations. |

Both types of pipelines play a vital role in modern data pipeline management, helping organizations meet their unique data needs.

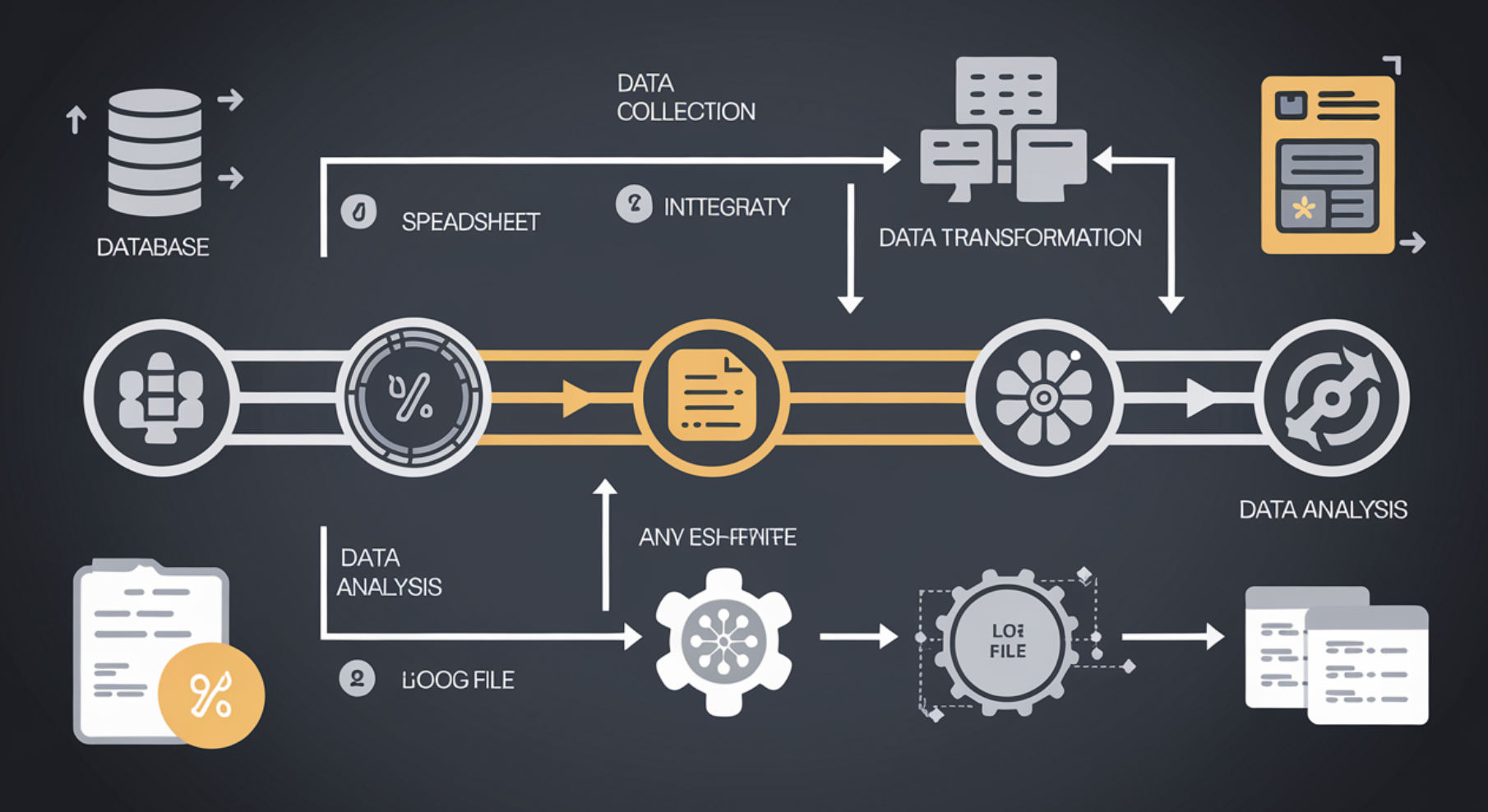

Data ingestion is the first step in any data pipeline management process. It involves capturing and importing data from various sources into the pipeline. You might collect data from internal systems like databases or external sources such as APIs, IoT devices, or social media platforms. This step ensures that raw data enters the pipeline for further processing.

To handle data ingestion effectively, you need to consider several factors:

For example, a retail business might use data ingestion to gather sales data from point-of-sale systems and customer feedback from online reviews. By ensuring seamless data ingestion, you can create a strong foundation for the rest of the pipeline.

Once data enters the pipeline, it undergoes transformation to make it usable. This stage involves cleaning, enriching, and structuring the data to meet your specific needs. Data transformation ensures that the information is accurate, consistent, and ready for analysis.

The process typically follows these steps:

For instance, a healthcare organization might transform patient data by standardizing medical codes and removing errors. This step ensures that the data is reliable and ready for use in decision-making.

Data storage is the final component of data pipeline management. It involves storing processed data in a secure and scalable environment. The choice of storage depends on your business objectives and how you plan to use the data.

To ensure effective data storage, follow these best practices:

For example, a financial institution might store transaction data in a cloud-based data warehouse. This approach allows for real-time access and analysis while maintaining high security standards.

By focusing on these core components—data ingestion, transformation, and storage—you can build a robust data pipeline management system. This ensures that your data flows seamlessly from source to destination, enabling better decision-making and operational efficiency.

Monitoring and maintaining your data pipeline management system is essential for ensuring its efficiency and reliability. Without proper oversight, data pipelines can encounter issues like delays, errors, or even complete failures. These problems can disrupt your operations and compromise the quality of your data. By implementing robust monitoring and maintenance practices, you can keep your pipelines running smoothly and ensure consistent data flow.

To monitor your data pipelines effectively, you need tools that provide visibility into data movement. These tools help you track how data flows through the pipeline and identify potential bottlenecks. For example, anomaly detection tools can alert you to performance issues and provide metrics to guide improvements. Data ingestion metrics also play a crucial role by tracking the performance of incoming data and helping you spot problems early.

Maintaining data quality is another critical aspect of data pipeline management. You can use various techniques to ensure your data remains accurate and reliable. Some of the most effective methods include:

These checks help you maintain high data quality, which is vital for making informed decisions.

Regular maintenance of your data pipelines involves proactive measures to prevent issues before they arise. You should schedule routine inspections to identify outdated components or inefficiencies. Updating your pipeline to accommodate new data sources or formats is equally important. By staying ahead of potential problems, you can minimize downtime and maintain seamless operations.

Incorporating these monitoring and maintenance practices into your data pipeline management strategy ensures that your system remains robust and scalable. Reliable pipelines not only improve operational efficiency but also enhance your ability to make data-driven decisions. With the right tools and techniques, you can build a resilient data infrastructure that supports your business goals.

Efficiency in data pipeline management ensures that your data flows seamlessly from source to destination without unnecessary delays or errors. By automating processes like data ingestion, transformation, and storage, you can eliminate manual errors and save valuable time. This automation allows you to focus on analyzing data rather than managing it.

Several factors contribute to the efficiency of a data pipeline. These include:

| Component | Description |

|---|---|

| Data Ingestion | Acquiring data from sources using techniques like batch processing or real-time streaming. |

| Data Processing | Cleaning, enriching, and transforming data into a suitable format for analysis or storage. |

| Transformation | Converting data into a usable format for downstream applications, including handling missing values. |

| Data Storage | Storing processed data in systems like data warehouses or lakes based on organizational needs. |

| Orchestration | Managing the flow of data through various stages to ensure correct order and timing. |

| Monitoring and Logging | Continuous monitoring to identify issues and logging for debugging and optimization. |

| Error Handling | Mechanisms for gracefully handling errors, including retries and notifications. |

| Security and Compliance | Adhering to security standards and regulations, including data encryption and access controls. |

By focusing on these components, you can build a pipeline that is not only efficient but also scalable and reliable. For example, orchestration tools ensure that data flows in the correct sequence, while monitoring tools help you identify and resolve issues before they escalate.

Reliability in data pipelines ensures that your data remains accurate and accessible at all times. Without reliability, you risk flawed analytics and poor decision-making. To maintain reliability, you must address common challenges like data quality concerns and integration hurdles.

Data quality plays a critical role in reliability. Inaccurate or incomplete data can undermine your entire pipeline. Implementing robust validation and cleansing processes ensures that your data meets the required standards. For example, completeness checks verify that no critical data is missing, while uniqueness tests ensure that each record is free from duplicates.

Integration complexity is another challenge. Merging data from diverse sources often involves overcoming differing formats and standards. Using flexible integration tools can simplify this process. These tools allow you to connect various data sources seamlessly, ensuring a consistent flow of information.

Regular monitoring and maintenance also enhance reliability. By tracking data flow and addressing issues proactively, you can prevent disruptions and maintain a steady pipeline. For instance, anomaly detection tools can alert you to irregularities, enabling you to take corrective action immediately.

As your business grows, your data pipeline must scale to handle increasing volumes and complexity. FineDataLink, a product by FanRuan, offers a robust solution for scaling your data pipeline management. Its cloud-native architecture allows you to adjust resources on demand, ensuring consistent performance regardless of data volume.

FineDataLink supports real-time data synchronization, making it ideal for businesses that require immediate insights. Its low-code platform simplifies complex data integration tasks, enabling you to scale your pipeline without extensive technical expertise. For example, you can use its drag-and-drop functionality to build new pipelines quickly, saving time and effort.

The platform also enhances operational efficiency by providing advanced ETL/ELT capabilities. These features allow you to preprocess and transform data effectively, ensuring that your pipeline remains efficient as it scales. Additionally, FineDataLink supports over 100 data sources, giving you the flexibility to integrate diverse systems seamlessly.

By leveraging FineDataLink, you can future-proof your data pipeline management. Its scalability ensures that your pipeline grows with your business, enabling you to maintain efficiency and reliability even as your data needs evolve.

Reliable data flow forms the backbone of any successful data pipeline. When your data moves seamlessly through the pipeline, it ensures that critical information reaches its destination without delays or errors. This reliability is essential for maintaining trust in your data systems and avoiding disruptions in operations.

A well-managed data pipeline helps you overcome challenges like data overload and inconsistent data quality. By implementing robust data management strategies, you can ensure that essential insights remain accessible for timely decisions. Reliable data flow also enhances your ability to manage large volumes of information effectively. It reduces the risk of errors during data collection and transformation, which could otherwise lead to flawed outcomes.

Tip: Regularly monitor your data pipeline to identify and resolve bottlenecks before they escalate. This proactive approach ensures uninterrupted data flow and minimizes downtime.

Data pipeline management plays a pivotal role in empowering decision-making processes. When your data pipeline operates efficiently, it delivers accurate and timely information to decision-makers. This enables you to analyze trends, assess risks, and make informed choices that drive business success.

Here’s how reliable data flow impacts decision-making:

For example, a retail business can use a well-structured data pipeline to analyze customer purchasing patterns. This insight allows you to adjust inventory levels and optimize sales strategies. By ensuring that your data pipeline delivers high-quality information, you can make decisions that align with your business goals.

Effective data pipeline management significantly boosts operational efficiency. By automating processes like data ingestion, transformation, and storage, you can reduce manual errors and save valuable time. This efficiency translates into faster data processing and retrieval, enabling you to respond quickly to business needs.

Consider these measurable impacts of a well-managed data pipeline:

For instance, automating data validation tasks can streamline your operations and free up resources for more strategic activities. Additionally, adhering to data governance policies ensures compliance and reduces the risk of security breaches. By focusing on these aspects, you can create a data pipeline that supports your organization’s growth and adaptability.

Note: Regular training on data governance policies can further enhance your team’s ability to manage data effectively, ensuring long-term operational success.

Effective data pipeline management ensures that your data remains accurate, consistent, and reliable throughout its journey. By implementing robust processes, you can address common challenges like duplicate records, missing information, or outdated entries. This improves the overall quality of your data, making it more valuable for analysis and decision-making.

Key metrics help you evaluate these improvements. For example:

| Metric | Description | Importance |

|---|---|---|

| Throughput | Amount of data processed per unit of time. | Indicates capacity for large volumes, crucial for real-time analytics. |

| Latency | Time taken for data to travel through the pipeline. | Critical for operations requiring immediate analysis. |

| Error Rate | Frequency of errors during data processing. | High rates suggest issues with data integrity. |

| Processing Time | Duration of processing individual data units or batches. | Reflects operational efficiency in converting data into insights. |

By focusing on these metrics, you can ensure that your data pipeline management system delivers high-quality data, enabling better insights and outcomes.

A well-managed data pipeline accelerates the flow of information, allowing you to gain insights quickly. Automation plays a key role here, reducing manual errors and speeding up data processing. This agility enables you to respond to market changes and make informed decisions faster.

For instance:

Businesses like McDonald's have leveraged data pipelines to aggregate information from franchise locations. This approach enables them to analyze sales performance, customer preferences, and operational efficiency, leading to actionable insights. By adopting similar strategies, you can enhance your decision-making capabilities and stay ahead in competitive markets.

Data pipeline management tools like FineDataLink offer significant cost-saving benefits. Automation reduces manual tasks, allowing your team to focus on strategic initiatives. This improves productivity and optimizes resource allocation. For example, a manufacturing company reduced its data processing time by 40% through automation, enabling engineers to concentrate on innovation rather than tedious data handling.

FineDataLink enhances scalability and performance, helping you manage data spikes effectively. Its real-time synchronization capabilities ensure seamless data integration, reducing errors and improving reliability. Additionally, the platform simplifies workflow management, breaking down data silos and streamlining complex processes. These features not only save costs but also enhance operational efficiency.

By using FineDataLink, you can build a robust data pipeline management system that supports your business growth while minimizing expenses. Its advanced features make it an ideal choice for organizations looking to optimize their data strategies.

Data pipeline management plays a pivotal role in various industries, enabling organizations to harness the power of data for improved decision-making and operational efficiency. Let’s explore how it transforms key sectors like finance, healthcare, and e-commerce.

In the finance industry, real-time data pipelines are indispensable for fraud detection. They continuously monitor transaction data as it flows through the system, identifying patterns and anomalies that may indicate fraudulent activities. For example, if a credit card transaction occurs in two different locations within minutes, the pipeline flags it for review. This proactive approach helps financial institutions prevent fraud before it impacts customers.

Key Applications:

Data pipeline management also enhances risk analysis by consolidating data from multiple sources. Financial institutions use pipelines to process historical and real-time data, enabling them to assess credit risks, market trends, and investment opportunities. For instance, a bank might analyze customer credit histories alongside economic indicators to make informed lending decisions. This ensures better risk management and improved financial stability.

In healthcare, data pipeline management ensures seamless patient data handling. Pipelines standardize and transform data from various sources, such as electronic health records and diagnostic devices. This data is then visualized through dashboards, helping healthcare providers make informed decisions. For example, a hospital can track patient admissions and equipment availability in real-time, reducing delays in care.

Benefits:

Predictive analytics in healthcare relies heavily on robust data pipelines. By analyzing historical patient data, pipelines help predict potential health issues, enabling early intervention. For instance, a healthcare provider might use predictive models to identify patients at risk of chronic diseases. This approach not only improves patient care but also optimizes resource allocation.

E-commerce platforms use data pipeline management to deliver personalized shopping experiences. Pipelines process customer data, such as browsing history and purchase behavior, to generate tailored recommendations. For example, if you frequently buy fitness gear, the platform might suggest related products like yoga mats or protein supplements. This personalization enhances customer satisfaction and boosts sales.

Efficient inventory management is another critical application in e-commerce. Data pipelines provide real-time insights into stock levels, helping businesses avoid overstocking or stockouts. For instance, during peak shopping seasons, pipelines adapt to varying data loads, ensuring timely restocking. This flexibility ensures smooth operations and customer satisfaction.

| Benefit | Description |

|---|---|

| Easy access to business insights | Facilitates quick retrieval of important data for analysis. |

| Faster decision-making | Enables timely responses to market changes and customer needs. |

| Flexible and agile | Adapts to varying data loads, especially during peak times. |

Data pipeline management empowers e-commerce businesses to stay competitive by streamlining operations and enhancing customer experiences.

Data pipeline management plays a crucial role in optimizing supply chain processes. By automating data movement and ensuring quality, you can streamline operations and make informed decisions. Optimized pipelines improve the speed and accuracy of data analysis, enabling you to respond quickly to market demands. For example, you can track inventory levels in real-time and adjust procurement strategies to avoid stockouts or overstocking.

Efficient data pipelines reduce redundancy and enhance data quality. This leads to better data management and more reliable insights. Automating these pipelines also minimizes mistakes, as built-in error detection mechanisms ensure consistency in data flows. With these improvements, you can process and analyze large data volumes efficiently, transforming raw data into actionable insights.

Benefits of supply chain optimization through data pipelines:

By leveraging data pipeline management, you can create a seamless flow of information across your supply chain, improving efficiency and reducing costs.

Real-time production monitoring relies heavily on robust data pipelines. These pipelines collect and process data from sensors, machines, and other sources on the factory floor. This allows you to monitor production metrics, such as equipment performance and output rates, in real-time. For instance, if a machine shows signs of malfunction, the pipeline can alert you immediately, preventing costly downtime.

Data pipeline management ensures that production data remains accurate and up-to-date. This accuracy helps you identify inefficiencies and optimize workflows. Additionally, real-time monitoring enables you to maintain consistent product quality by quickly addressing deviations in the production process.

Key advantages of real-time production monitoring:

With data pipeline management, you can transform your production processes into a well-oiled machine, ensuring reliability and efficiency.

In the public sector, data pipeline management supports data-driven governance initiatives. By integrating and processing data from various sources, you can enhance transparency and improve decision-making. For example, the USDA secured funding for its Enterprise Data Governance program by demonstrating improved data quality for agricultural research. Similarly, the CDC created a shared dashboard to track COVID-19 infection rates, streamlining decision-making and enhancing public trust.

| Agency | Initiative Description |

|---|---|

| USDA | Improved agricultural research through better data quality. |

| CDC | Developed a COVID-19 dashboard for real-time tracking. |

| EPA | Published open data plans to enhance transparency. |

| DOT | Linked accountability to mission outcomes for better collaboration. |

These examples highlight how data pipeline management can drive impactful governance initiatives, ensuring data consistency and accessibility.

In retail, data pipeline management enhances customer experiences by providing actionable insights. Tools like FineBI enable you to analyze customer behavior and preferences, helping you deliver personalized recommendations. For instance, if a customer frequently purchases fitness products, your system can suggest related items like yoga mats or protein supplements.

You can try it out in the demo model below:

FineBI also supports real-time data analysis, allowing you to monitor sales trends and adjust strategies promptly. This agility ensures that you meet customer demands effectively, even during peak shopping seasons. By integrating data from various sources, FineBI creates a unified view of your operations, helping you make data-driven decisions.

Benefits of using FineBI in retail:

With FineBI and effective data pipeline management, you can stay ahead in the competitive retail landscape, delivering exceptional value to your customers.

Data pipeline management is essential for modern businesses aiming to thrive in a data-driven world. It ensures timely and accurate data flow, enabling you to make informed decisions that drive growth and customer satisfaction. By automating processes, it improves operational efficiency, freeing up resources for strategic initiatives. Moreover, effective data management mitigates risks by ensuring data accuracy and compliance, protecting your business and maintaining trust.

Consider adopting solutions like FineDataLink and FineBI to streamline your data processes. These tools help you capitalize on real-time data, scale with agility, and consolidate information for strategic decisions. With robust data pipeline management, your organization can unlock new opportunities and achieve sustainable success.

Click the banner below to also experience FineDataLink for free and empower your enterprise to convert data into productivity!

Mastering Data Pipeline: Your Comprehensive Guide

How to Build a Spark Data Pipeline from Scratch

Data Pipeline Automation: Strategies for Success

Understanding AWS Data Pipeline and Its Advantages

Designing Data Pipeline Architecture: A Step-by-Step Guide

How to Build a Python Data Pipeline: Steps and Key Points

What is the purpose of data pipeline management?

Data pipeline management ensures your data flows efficiently from source to destination. It helps you process, transform, and store data accurately, enabling better decision-making and operational efficiency.

How does data pipeline management improve data quality?

It improves data quality by automating processes like cleaning, validation, and transformation. This ensures your data remains accurate, consistent, and ready for analysis.

Can data pipeline management handle real-time data?

Yes, it can. Real-time data pipelines process information as it arrives, enabling you to respond instantly to changes, such as detecting fraud or monitoring production.

What tools are commonly used for data pipeline management?

Popular tools include FineDataLink, Apache Airflow, and Talend. FineDataLink stands out for its real-time synchronization, low-code interface, and support for over 100 data sources.

Why is scalability important in data pipeline management?

Scalability ensures your pipeline can handle growing data volumes as your business expands. It prevents bottlenecks and maintains performance during data spikes.

How does automation benefit data pipeline management?

Automation reduces manual errors, speeds up processes, and ensures consistency. It allows you to focus on analyzing data rather than managing it.

What industries benefit most from data pipeline management?

Industries like finance, healthcare, e-commerce, and manufacturing rely heavily on data pipeline management. It helps them optimize operations, improve decision-making, and enhance customer experiences.

How can FineDataLink enhance your data pipeline management?

FineDataLink simplifies complex tasks with its low-code platform. It supports real-time synchronization, advanced ETL/ELT capabilities, and seamless integration with diverse data sources, making your pipeline efficient and scalable.

The Author

Howard

Data Management Engineer & Data Research Expert at FanRuan

Related Articles

Best Software for Creating ETL Pipelines This Year

Discover the top ETL pipelines tools for 2025, offering scalability, user-friendly interfaces, and seamless integration to streamline your data pipelines.

Howard

Apr 29, 2025

What is a Data Pipeline and Why Does It Matter

A data pipeline automates collecting, cleaning, and delivering data, ensuring accurate, timely insights for analysis and business decisions.

Howard

Mar 07, 2025

2025 Data Pipeline Examples: Learn & Master with Ease!

Unlock 2025’s Data Pipeline Examples! Discover how they automate data flow, boost quality, and deliver real-time insights for smarter business decisions.

Howard

Feb 24, 2025