Data formats play a crucial role in how information is structured and utilized across various applications. They encompass a wide range of types, including text, numeric, multimedia, and more. Understanding these formats is essential for effective data management and integration. For instance, FineDataLink simplifies complex data integration tasks by supporting diverse data formats. Similarly, FineReport and FineBI enhance data visualization and analysis by seamlessly handling different data structures. Recognizing the importance of data formats ensures that businesses can efficiently process and leverage data for informed decision-making.

Understanding Data Format

Definition and Importance of Data Format

What are Data Formats?

Data formats define the structure and organization of data within a file or database. They determine how information is stored, accessed, and interpreted. In technology, data formats can be referred to as data types, file formats, or content formats. Each serves a unique purpose in representing data, whether it be text, numeric, multimedia, or other types. For example, a CSV file organizes data in a tabular format, while JSON structures data in a hierarchical manner. Understanding these formats is crucial for efficient data management and processing.

Why Data Formats Matter in Technology

Data formats play a pivotal role in technology by influencing how data is stored, retrieved, and utilized. The choice of format can significantly impact the speed and performance of data operations. For instance, selecting the right format can optimize storage methods and data usage, saving valuable engineering time and resources. As data has grown, file formats have evolved to meet the demands of modern technology.

Key Reasons for Choosing Appropriate Data Formats:

- Performance Optimization: Matching the file format to specific needs minimizes the time required to access and analyze data.

- Data Integrity: Proper formats ensure that data remains consistent and accurate across different systems.

- Interoperability: Standardized formats facilitate seamless data exchange between diverse applications and platforms.

Text-Based Data Format

Text-based data formats serve as a fundamental means of organizing and exchanging information. They are widely used due to their simplicity and human readability. This section explores three prominent text-based data formats: CSV, JSON, and XML.

CSV (Comma-Separated Values)

Characteristics of CSV

CSV files represent data in a tabular format, where each line corresponds to a row, and fields within a row are separated by commas. This format is straightforward and easy to generate, making it a popular choice for exporting data from spreadsheets and databases. CSV files do not support complex data structures or metadata, which limits their use to simple datasets.

Common Uses of CSV

CSV files are commonly used for data exchange between applications that handle tabular data. They are ideal for importing and exporting data in spreadsheet programs like Microsoft Excel or Google Sheets. Many databases also support CSV for data import and export operations. Due to their simplicity, CSV files are often used in data analysis and reporting tasks where complex data structures are unnecessary.

JSON (JavaScript Object Notation)

Characteristics of JSON

JSON is a lightweight data format that uses a text-based syntax to represent structured data. It organizes data in key-value pairs, allowing for hierarchical data representation. JSON's syntax is easy to read and write, making it a preferred choice for web applications and APIs. Unlike CSV, JSON supports complex data structures, including nested objects and arrays.

Common Uses of JSON

JSON is extensively used in web development for data interchange between clients and servers. It is the standard format for RESTful APIs, enabling seamless communication between different systems. JSON's ability to represent complex data structures makes it suitable for configuration files, data serialization, and data storage in NoSQL databases like MongoDB.

XML (eXtensible Markup Language)

Characteristics of XML

XML is a markup language that defines a set of rules for encoding documents in a format that is both human-readable and machine-readable. It uses tags to define elements and attributes, allowing for a flexible and extensible data structure. XML supports metadata and can represent complex data hierarchies, making it versatile for various applications.

Common Uses of XML

XML is widely used in web services, configuration files, and document storage. It serves as a standard format for data exchange in enterprise applications and is often used in conjunction with other technologies like SOAP for web services. XML's ability to represent complex data structures makes it suitable for applications that require detailed data descriptions, such as databases and document management systems.

Binary Data Format

Binary data formats offer a more efficient way to store and transmit data compared to text-based formats. They encode data in a compact binary form, which reduces file size and enhances processing speed. This section delves into two prominent binary data formats: Protocol Buffers and Avro.

Protocol Buffers

Characteristics of Protocol Buffers

Protocol Buffers, often referred to as Protobufs, provide a method for serializing structured data. Developed by Google, Protobufs are smaller, faster, and simpler than XML. They use a binary format that minimizes data size, making them ideal for network communication and data storage. Protobufs require a schema definition, which specifies the structure of the data. This schema ensures that data remains consistent and interpretable across different systems.

Common Uses of Protocol Buffers

Many projects leverage Protocol Buffers for their efficiency and speed. Google uses Protobufs extensively in its internal systems. Other notable projects include ActiveMQ and Netty, which benefit from the reduced data size and increased processing speed. Protobufs excel in scenarios where data needs to be transmitted quickly and efficiently, such as in real-time applications and microservices architectures.

Avro

Characteristics of Avro

Apache Avro is a row-based, language-neutral, schema-based serialization technique. It uses a compact binary format that supports efficient data storage and retrieval. Avro files are self-describing, meaning they contain the schema along with the data. This feature allows for schema evolution, enabling changes to the data structure without breaking compatibility with existing data.

Common Uses of Avro

Avro is widely used in big data environments, particularly with Apache Hadoop. Its ability to handle large volumes of data efficiently makes it a popular choice for data storage and processing. Avro's schema evolution capability is particularly beneficial in dynamic environments where data structures frequently change. It is also used in data serialization tasks, where maintaining compatibility across different versions of data is crucial.

Image Data Format

Image data formats play a vital role in how digital images are stored, shared, and displayed. They determine the quality, size, and compatibility of images across different platforms and devices. Two of the most widely used image data formats are JPEG and PNG.

JPEG (Joint Photographic Experts Group)

Characteristics of JPEG

JPEG stands as one of the most popular image data formats due to its ability to compress images significantly. This compression reduces file size while maintaining acceptable image quality. JPEG uses a lossy compression technique, which means some image data gets discarded to achieve smaller file sizes. This format supports millions of colors, making it ideal for photographs and complex images. However, repeated editing and saving can degrade image quality over time.

Common Uses of JPEG

JPEG finds extensive use in digital photography, web graphics, and social media. Its small file size makes it perfect for sharing images online and storing large photo collections without consuming excessive storage space. Photographers and graphic designers often choose JPEG for its balance between image quality and file size. Websites also prefer JPEG images to ensure faster loading times and better user experience.

PNG (Portable Network Graphics)

Characteristics of PNG

PNG offers a lossless compression format, preserving all image data without sacrificing quality. This format supports transparency, allowing images to have clear backgrounds, which is essential for web design and graphic creation. PNG handles detailed images and text with sharp edges effectively, making it suitable for logos and illustrations. Unlike JPEG, PNG files tend to be larger due to their lossless nature.

Common Uses of PNG

PNG is widely used in web design, digital art, and graphic design. Designers favor PNG for its ability to maintain image quality and support transparency. This format is ideal for images that require frequent editing or need to be layered over other graphics. Websites often use PNG for logos, icons, and images that demand high quality and transparency.

Audio Data Format

Audio data formats determine how sound is stored and transmitted. They play a crucial role in the quality and compatibility of audio files. Two of the most common audio data formats are MP3 and WAV.

MP3 (MPEG Audio Layer III)

Characteristics of MP3

MP3 files use a lossy compression algorithm to reduce file size. This compression removes some audio data, which can affect sound quality. However, the reduction in size makes MP3 files highly portable and easy to share. Users can adjust the bit rate of MP3 files, allowing for a balance between file size and audio quality. This flexibility makes MP3 a popular choice for music streaming and storage.

Common Uses of MP3

MP3 files dominate the music industry due to their small size and compatibility with various devices. They are ideal for digital music players, smartphones, and online streaming services. Many users prefer MP3 for its convenience in storing large music libraries without consuming excessive storage space. The format's widespread support across platforms ensures that users can play MP3 files on almost any device.

WAV (Waveform Audio File Format)

Characteristics of WAV

WAV files offer uncompressed, lossless audio quality. They store sound in its original form, preserving every detail of the audio. This results in larger file sizes compared to MP3. WAV files maintain a fixed bit rate, ensuring consistent sound quality. The absence of compression algorithms means that WAV files provide the highest possible audio fidelity.

Common Uses of WAV

WAV files are the preferred choice for professional audio production and editing. Musicians, sound engineers, and producers use WAV for recording and mastering due to its superior sound quality. The format is also common in applications where audio quality is paramount, such as radio broadcasting and film production. Despite their large size, WAV files ensure that the audio remains pristine and unaltered.

Video Data Format

Video data formats determine how video content is stored, played, and shared across different platforms. They influence the quality, compatibility, and file size of video files. Two of the most widely used video data formats are MP4 and AVI.

MP4 (MPEG-4 Part 14)

Characteristics of MP4

MP4, or MPEG-4 Part 14, stands as one of the most popular video formats due to its versatility and efficiency. It supports a wide range of multimedia content, including video, audio, subtitles, and images. MP4 uses a compression technique that maintains high video quality while reducing file size. This makes it ideal for streaming and sharing videos online. The format's compatibility with various devices and platforms further enhances its appeal.

Common Uses of MP4

MP4 is extensively used in digital media for its ability to deliver high-quality video content with relatively small file sizes. Streaming services like YouTube and Netflix rely on MP4 for efficient video delivery. The format's compatibility with smartphones, tablets, and computers makes it a preferred choice for video playback and sharing. Additionally, MP4's support for metadata allows for advanced features like chapter markers and interactive menus, enhancing the viewing experience.

AVI (Audio Video Interleave)

Characteristics of AVI

AVI, or Audio Video Interleave, is a multimedia container format introduced by Microsoft. It stores video and audio data in a synchronous manner, ensuring smooth playback. AVI supports a variety of codecs, which allows for flexibility in video compression and quality. However, AVI files tend to be larger than MP4 files due to less efficient compression methods. This can result in higher storage requirements and longer download times.

Common Uses of AVI

AVI remains a popular choice for video editing and production due to its high-quality output. Professionals in the film and television industry often use AVI for capturing and editing raw footage. The format's ability to store uncompressed video makes it suitable for applications where quality is paramount. Despite its larger file size, AVI's compatibility with various editing tools and software ensures its continued relevance in multimedia production.

Specialized Data Format

Specialized data formats cater to specific fields and applications, offering tailored solutions for unique data requirements. These formats ensure that data remains accurate, accessible, and useful within their respective domains.

GIS Data Formats

Geographic Information Systems (GIS) rely on specialized data formats to manage spatial data effectively. These formats facilitate the representation, storage, and analysis of geographic information.

Characteristics of GIS Data Formats

GIS data formats possess unique characteristics that enable them to handle spatial data efficiently. They often include both vector and raster data types. Vector data represents geographic features using points, lines, and polygons, while raster data uses grid cells to depict continuous surfaces. GIS formats also support metadata, which provides additional context about the data, such as coordinate systems and projection information. This metadata ensures that spatial data aligns correctly with real-world locations.

Common Uses of GIS Data Formats

GIS data formats find widespread use in various applications, including urban planning, environmental monitoring, and disaster management. Urban planners utilize these formats to design infrastructure and assess land use patterns. Environmental scientists employ GIS data to track changes in ecosystems and monitor natural resources. Disaster management teams rely on GIS data to map hazard zones and coordinate emergency response efforts. These formats enable professionals to visualize and analyze spatial data, leading to informed decision-making and effective resource management.

Scientific Data Formats

Scientific research often involves complex data sets that require specialized formats for accurate representation and analysis. These formats support diverse scientific disciplines, ensuring that data remains consistent and interpretable.

Characteristics of Scientific Data Formats

Scientific data formats exhibit characteristics that accommodate the intricate nature of research data. They often include support for multidimensional arrays, which allow researchers to store and analyze data across multiple variables. These formats also provide mechanisms for data compression, reducing file size without sacrificing accuracy. Additionally, scientific formats often incorporate metadata, detailing experimental conditions, measurement units, and data provenance. This metadata ensures that researchers can replicate studies and validate findings.

Common Uses of Scientific Data Formats

Scientific data formats serve a crucial role in various research fields, including physics, biology, and chemistry. Physicists use these formats to model complex systems and simulate experiments. Biologists rely on scientific data formats to analyze genetic sequences and study biological processes. Chemists employ these formats to store molecular structures and conduct computational analyses. By facilitating the storage and analysis of research data, scientific formats enable researchers to advance knowledge and drive innovation across disciplines.

Emerging Data Format

Emerging data formats like Parquet and ORC (Optimized Row Columnar) have gained prominence in the realm of big data analytics. These formats offer advanced features that cater to the needs of modern data processing and storage.

Parquet

Characteristics of Parquet

Parquet is a columnar storage file format designed for efficient data processing. It excels in scenarios where write-once, read-many operations are common. Parquet's columnar layout allows for efficient compression and encoding, which reduces storage space and enhances read performance. This format supports complex data structures and schema evolution, making it adaptable to changing data requirements.

- Columnar Storage: Parquet stores data in columns rather than rows, optimizing read performance.

- Efficient Compression: The format uses advanced compression techniques to minimize storage needs.

- Schema Evolution: Parquet allows for changes in data structure without breaking compatibility.

Common Uses of Parquet

Parquet is widely used in analytics and data warehousing environments. Its ability to handle large datasets efficiently makes it a preferred choice for big data platforms like Apache Hadoop and Apache Spark. Organizations leverage Parquet for tasks that require high data throughput and performance, such as data analysis and reporting.

- Analytics: Ideal for OLAP (Online Analytical Processing) use cases.

- Data Warehousing: Supports efficient querying and data retrieval.

- Big Data Platforms: Commonly used with Hadoop and Spark for large-scale data processing.

ORC (Optimized Row Columnar)

Characteristics of ORC

ORC is another columnar storage format optimized for read-heavy operations. It stores collections of rows in a single file, allowing for parallel processing across clusters. ORC's columnar layout enables efficient compression and data skipping, reducing the load on read and decompression processes.

- Row Collections: ORC organizes data in collections of rows for parallel processing.

- Data Skipping: The format allows skipping of unnecessary data and columns, enhancing read efficiency.

- Compression: ORC files are highly compressed, reducing storage requirements.

Common Uses of ORC

ORC is particularly suited for environments that require frequent data reads and updates. It is optimized for Hive data, making it a popular choice for data warehousing solutions. ORC's ability to handle complex data structures and provide fast query performance makes it valuable for business intelligence and analytics applications.

- Data Warehousing: Optimized for Hive and other data warehousing solutions.

- Business Intelligence: Supports fast query performance for analytics.

- Read-Heavy Operations: Ideal for scenarios with frequent data access and updates.

FanRuan's Role in Data Format

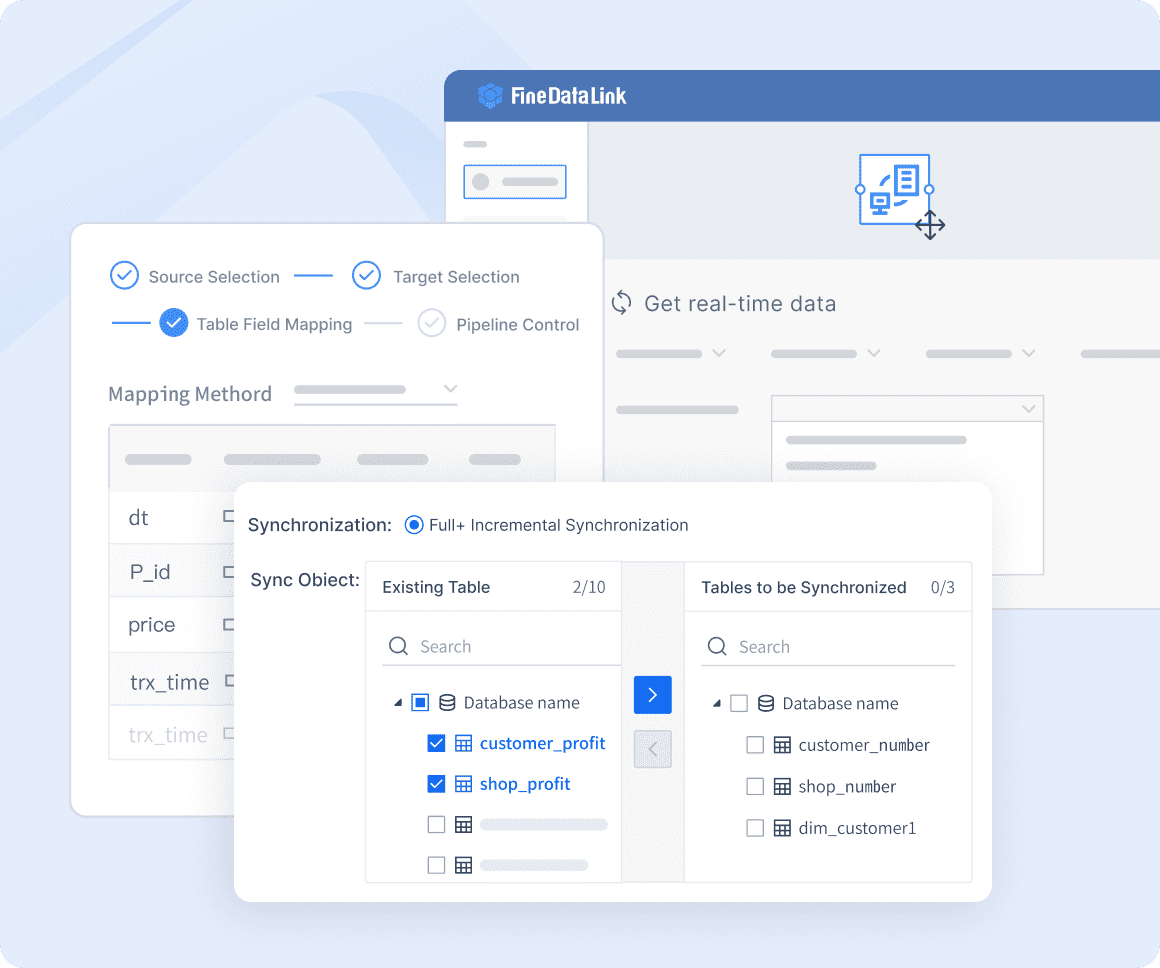

FineDataLink and Data Integration

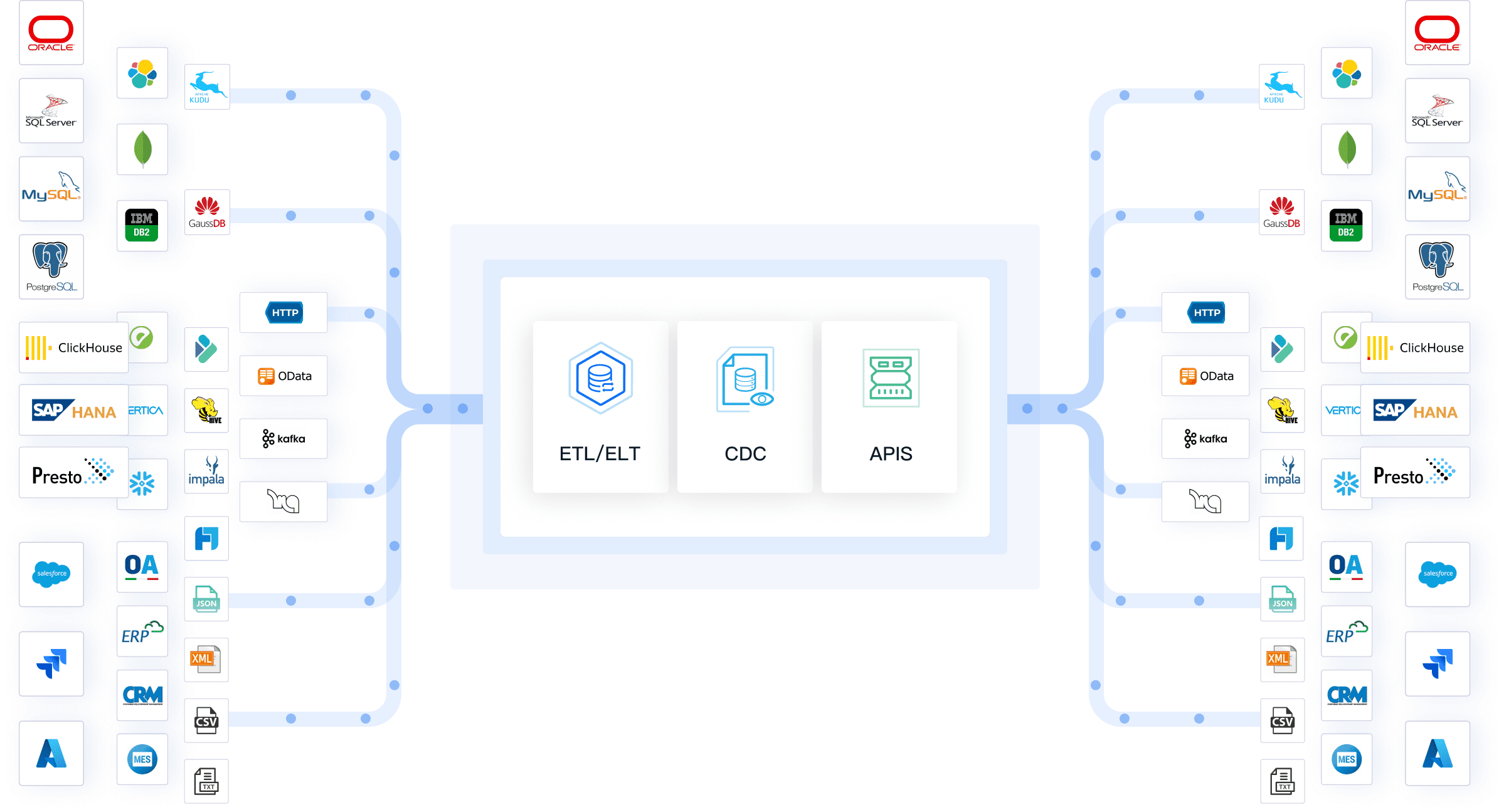

How FineDataLink Handles Various Data Formats

FineDataLink excels in managing diverse data formats, providing seamless integration across multiple platforms. It supports over 100 common data sources, including CSV, XML, JSON, and proprietary formats. This capability allows businesses to integrate data from various systems without manual transformation efforts. By automating data mapping and synchronization, FineDataLink reduces errors and enhances efficiency. Its low-code platform simplifies complex data integration tasks, making it accessible to users with varying technical expertise.

Benefits of Using FineDataLink for Data Integration

Using FineDataLink offers several advantages for data integration. It ensures real-time data synchronization, which is crucial for businesses that rely on up-to-date information. The platform's drag-and-drop interface streamlines the integration process, saving time and resources. FineDataLink also enhances data quality by maintaining consistency and accuracy across different systems. This leads to better decision-making and improved operational efficiency. Additionally, its support for a wide range of data formats ensures compatibility with existing infrastructure, making it a versatile solution for enterprises.

FineReport and Data Visualization

Leveraging FineReport for Reporting Across Data Formats

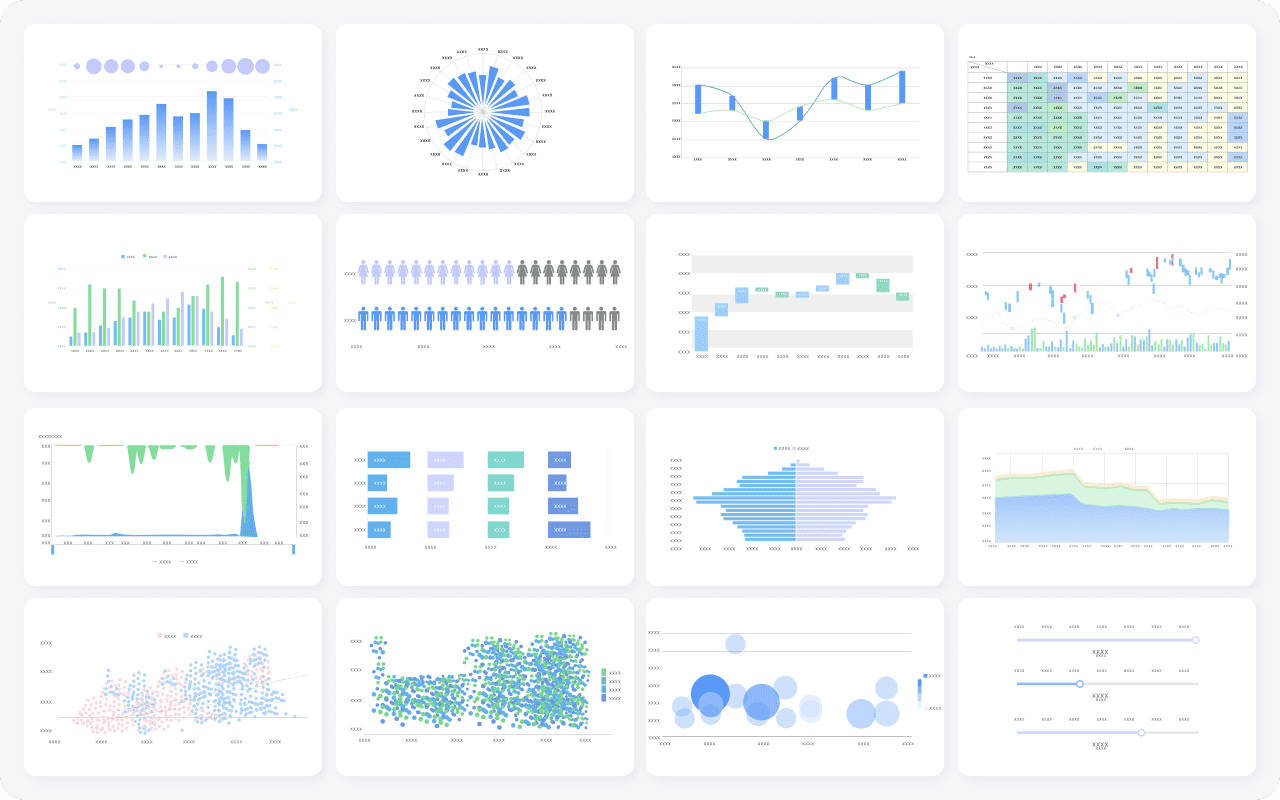

FineReport provides a robust solution for reporting across various data formats. It connects to multiple data sources, allowing users to create dynamic reports and dashboards. FineReport's flexible report designer enables rapid generation of reports through a user-friendly interface. This feature supports diverse visualization types, making it easier to uncover insights from complex data sets. By integrating data from different formats, FineReport helps businesses gain a comprehensive view of their operations.

Enhancing Data Insights with FineReport

FineReport enhances data insights by offering interactive analysis and visualization capabilities. Users can explore data through various chart styles and drill-down features, facilitating deeper understanding. The software's ability to handle large datasets ensures that businesses can analyze data at scale. FineReport's mobile BI capabilities allow users to access insights on the go, ensuring that decision-makers have the information they need, whenever they need it. This empowers organizations to make informed decisions based on real-time data analysis.

FineBI and Business Intelligence

FineBI's Approach to Data Formats in Analytics

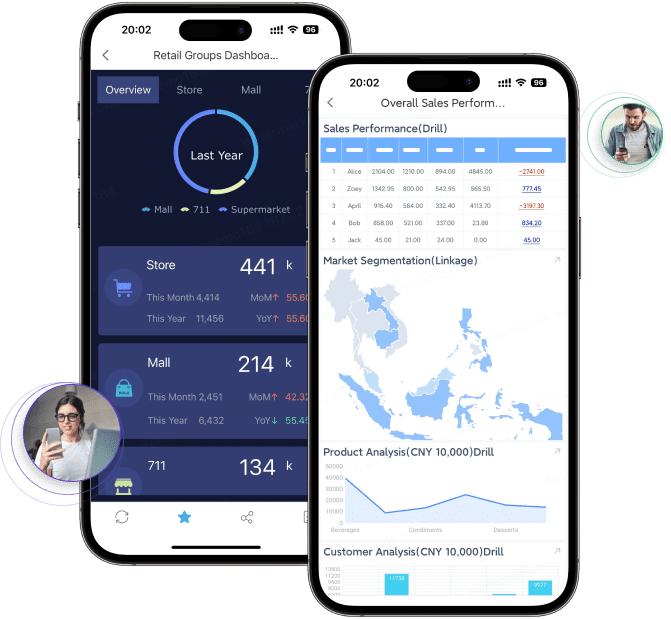

FineBI adopts a comprehensive approach to handling data formats in analytics. It connects to a wide range of data sources, including big data platforms and relational databases. FineBI's high-performance computing engine processes massive datasets efficiently, supporting real-time analysis. This capability allows businesses to conduct in-depth analysis across various data formats, uncovering trends and patterns that drive strategic decisions. FineBI's flexible ETL and ELT data processing modes further enhance its ability to manage diverse data structures.

Improving Decision-Making with FineBI

FineBI improves decision-making by transforming raw data into actionable insights. Its augmented analytics feature provides automatic analysis generation, simplifying the data interpretation process. Users can track key performance indicators (KPIs) and identify trends through intuitive visualizations. FineBI's role-based access control ensures data security, allowing organizations to manage permissions effectively. By enabling self-service analytics, FineBI empowers business users to explore data independently, fostering a data-driven culture within the organization.

Understanding various data formats is essential for efficient data handling and analysis. Each format influences how information is stored, accessed, and utilized. Choosing the right format can significantly impact performance and efficiency. Businesses must match file formats to their specific needs to optimize data analysis. As technology evolves, new formats will emerge, offering enhanced capabilities for data sharing, reuse, and preservation. Staying informed about these developments ensures that organizations can leverage data effectively, driving innovation and growth.

FAQ

Textual Data: XML, TXT, HTML, and PDF/A are popular choices for preserving textual information.

Tabular Data: CSV is widely used for spreadsheets and databases due to its simplicity and compatibility.

Images: TIFF, PNG, and JPEG are common, with TIFF and PNG offering lossless quality, while JPEG provides lossy compression.

Audio: FLAC, WAV, and MP3 are prevalent, with WAV offering uncompressed quality and MP3 providing compressed files for easy sharing.

Data formats influence how quickly and efficiently data can be accessed and analyzed. Choosing the right format minimizes processing time and enhances performance. For instance, file-based formats like CSV are straightforward and easy to use, while directory-based formats and database connections offer more complex structures suitable for larger datasets.

The choice of data format affects everything from data storage to analysis. A suitable format ensures data integrity, facilitates interoperability, and optimizes performance. For example, using a columnar format like Parquet in big data environments can significantly reduce storage space and improve read performance.

Yes, most data formats can be converted into others. This flexibility allows users to adapt data to different applications and requirements. However, it is crucial to understand the characteristics of each format to avoid potential pitfalls during transformation.

Consider the data's characteristics, size, and intended use. Evaluate the infrastructure of the project and the speed of writing and reading data files. Simplicity and complexity should be balanced according to the project's needs, following the principle of Occam's razor: keep it as simple as possible, but as complex as necessary.

Continue Reading About Data Format

Data Pipeline Automation: Strategies for Success

Understand definition and key components of data pipeline automation. Explore the essential strategies for successful data pipeline automation.

Howard

Jul 18, 2024

Data Validation Techniques: Secrets to Achieving Precision and Accuracy

Master data validation techniques from manual to machine learning methods. Ensure data quality with our practical steps.

Howard

Aug 06, 2024

Data Pipelines vs ETL Pipelines Explained

Understand the key differences between data pipelines and ETL pipelines. Learn how 'data pipeline vs ETL' impacts data movement, transformation, and analysis.

Howard

Dec 13, 2024

Explore the Best Data Visualization Projects of 2025

Discover 2025's top data visualization projects that transform data into insights, enhancing decision-making across industries with innovative tools.

Lewis

Nov 25, 2024

Designing Data Pipeline Architecture: A Step-by-Step Guide

Learn how to design data pipeline architecture for seamless data flow, ensuring efficient processing and analytics for informed business decisions.

Howard

Nov 06, 2024

Data Analysis vs Data Analytics: What’s the Real Difference?

Data Analysis vs Data Analytics: What’s the Difference? Discover How One Interprets History While the Other Shapes Tomorrow. Explore Here!

Lewis

Mar 10, 2025