You want to know which data lake tools stand out. FineDataLink, Databricks, Snowflake, Microsoft Azure, Amazon Web Services, Google Cloud, IBM, and Cloudera all lead the pack. Data lake tools help you unify, organize, and analyze massive data from different sources. The best data lake tools support a strong data lake architecture, letting you extract valuable data insights and build a reliable data management solution. When you compare cloud-based data lake platforms, look for scalability, easy integration, security, governance, and cost.

| Tool | Market Share (%) |

|---|---|

| Databricks | 11 |

| Snowflake | N/A |

| Microsoft Azure | N/A |

| Amazon Web Services | N/A |

| Google Cloud | N/A |

| IBM | N/A |

| Cloudera | N/A |

When you start exploring data lake tools, you probably wonder what makes one solution better than another. A data lake is a centralized repository that lets you store, manage, and analyze large volumes of structured and unstructured data. The best data lake tools help you break down data silos, unify information from different sources, and make your data accessible for business intelligence and analytics.

You need to look at several factors when comparing data lake platforms. Scalability stands out because your data lake must handle growing data volumes as your business expands. Integration matters since you want your data lake to connect smoothly with existing systems and applications. Security is critical for protecting sensitive information and meeting compliance requirements. Governance ensures your data lake maintains high data quality and consistency. Cost affects your ability to sustain and scale your data lake market presence.

Here’s a quick overview of the most critical factors enterprises consider when choosing data lake tools:

| Factor | Description |

|---|---|

| Ability to perform complex stateful transformations | You need to analyze data from multiple sources, so your data lake should handle joins, aggregations, and other advanced operations without extra databases. |

| Support for evolving schema-on-read | Your data lake must adapt to new data structures, especially when working with semi-structured data. |

| Optimizing object storage for improved query performance | Fast access to business-critical data depends on columnar formats and ongoing storage optimization. |

| Integration with metadata catalogs | Searching and understanding datasets in your data lake gets easier with strong metadata management. |

You should also consider technology options, security and access control, data ingestion capabilities, metadata management, performance, management tools, governance, analysis features, and total cost of ownership. Make sure your data lake platforms offer a strong UI for monitoring and administration.

Data lakes help you solve many common enterprise challenges. You often face issues like fragmented data, inconsistent quality, and integration headaches. Data lake tools address these problems by providing centralized governance, automated data quality checks, and robust APIs for interoperability.

Here’s how data lake platforms tackle typical enterprise data challenges:

| Challenge | Solution |

|---|---|

| Data Governance and Quality | Set clear policies, assign data stewards, use automated quality tools, and adopt centralized governance. |

| Data Security and Privacy | Use strong access controls, encryption, and comply with privacy regulations. |

| Performance Issues | Optimize storage, use efficient indexing, and invest in query optimization. |

| Scalability and Storage Costs | Choose cloud-based storage, use compression, and manage data lifecycle. |

| Integration with Existing Systems | Implement robust APIs and standardized formats. |

| Lack of Skilled Resources | Train employees and use managed services. |

Scalability lets you manage large data volumes. Integration ensures smooth data flow. Security protects sensitive information. Governance maintains compliance and data quality. Cost influences your ability to grow in the data lake market. When you choose the right data lake tools, you set your business up for success in managing and analyzing data lakes.

When you look for the best data lake tools, you want solutions that help you store, manage, and analyze massive amounts of data from different sources. Data lake tools give you a way to break down data silos and make all your information available for business intelligence, analytics, and reporting. The best data lake tools let you handle structured, semi-structured, and unstructured data in one place. You can scale your storage as your business grows, keep your data secure, and make sure your team can access the information they need.

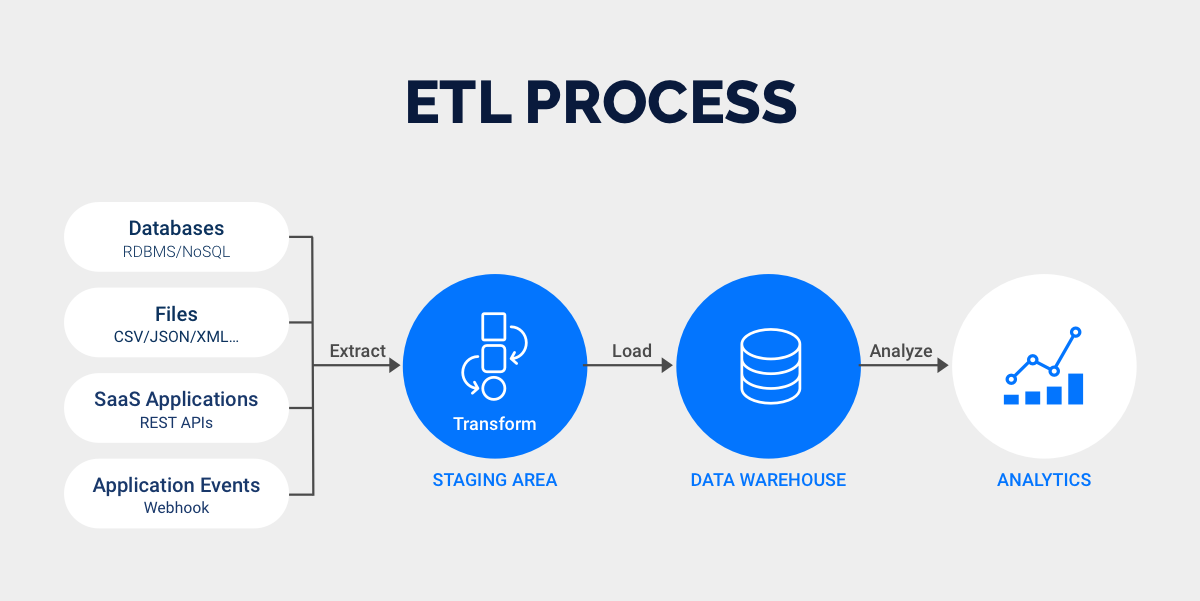

A data lake is a centralized platform that stores raw data in its native format until you need it. Unlike traditional databases, a data lake can handle huge volumes of data from many sources, including databases, files, APIs, and streaming services. You can use data lake tools to collect, process, and analyze this data, making it easier to find insights and make better decisions. The best data lake tools also offer features like real-time data synchronization, advanced ETL/ELT capabilities, and strong security controls.

Let’s dive into the top data lake tools and see how each one stands out in the data lake market.

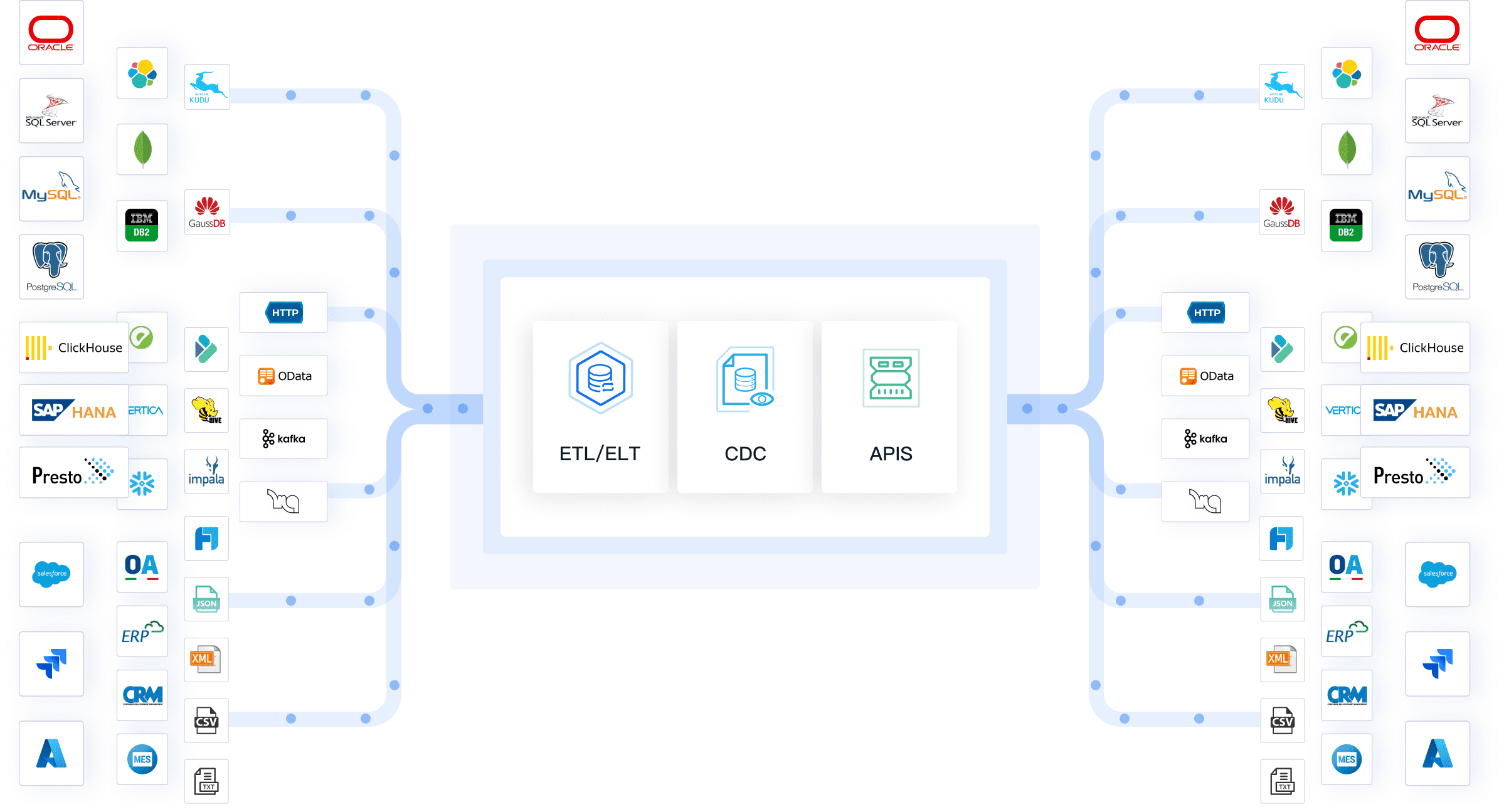

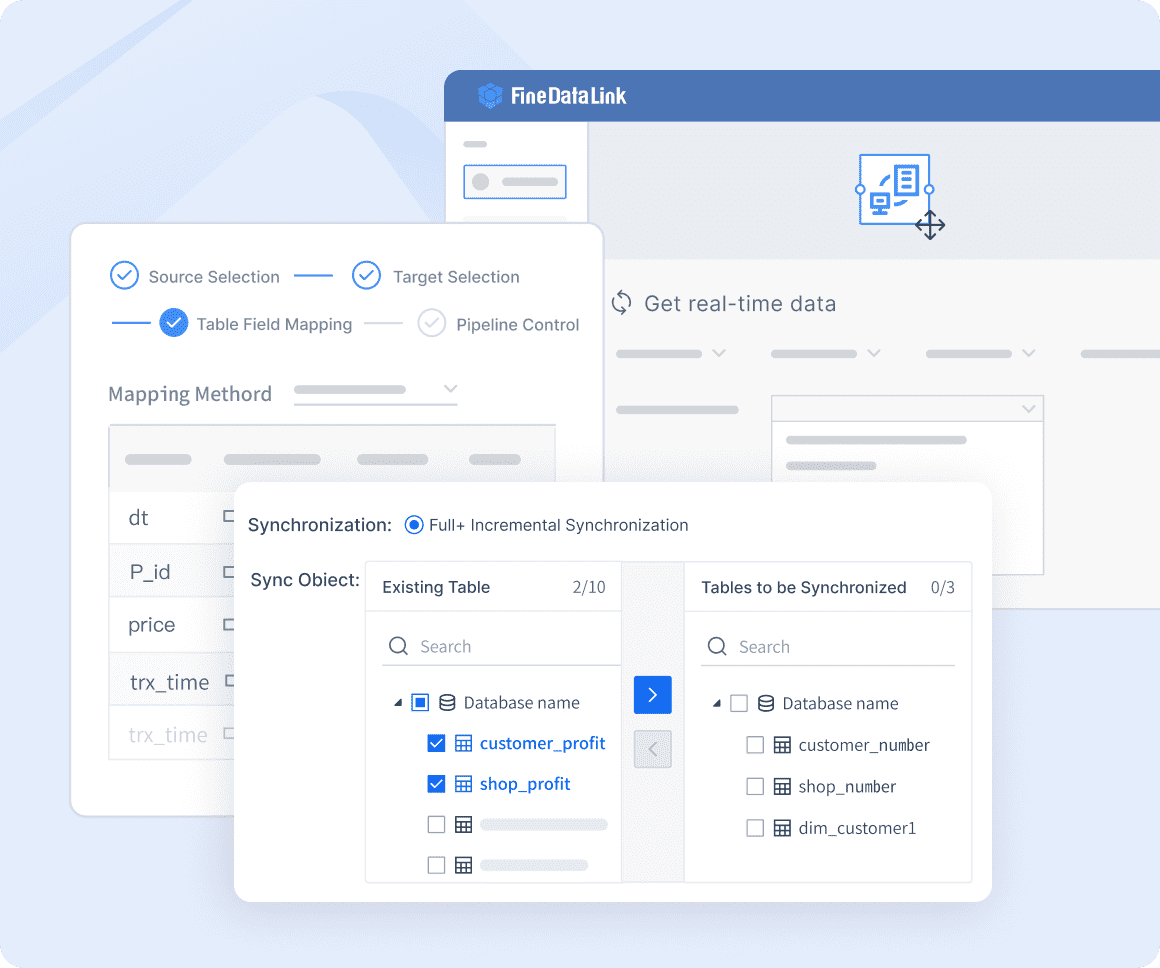

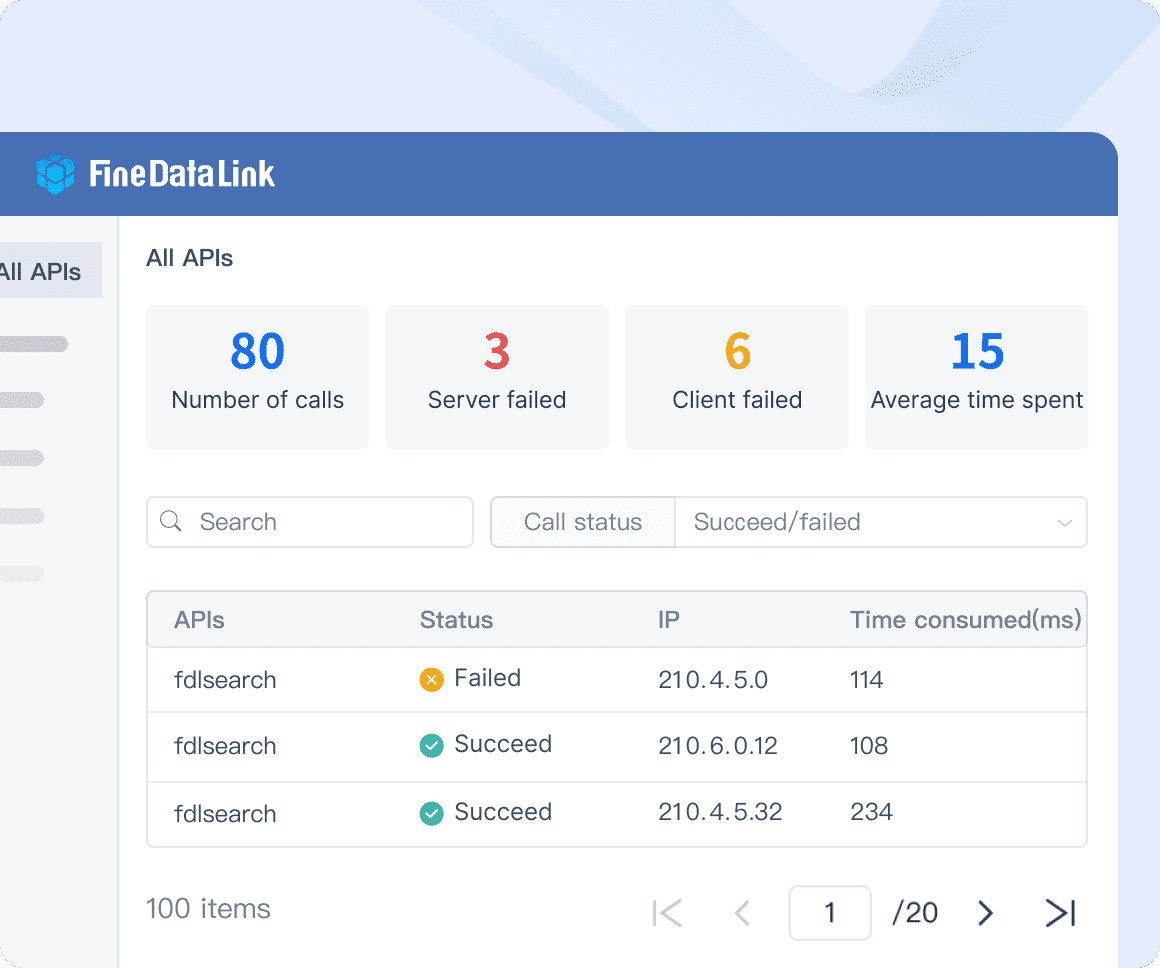

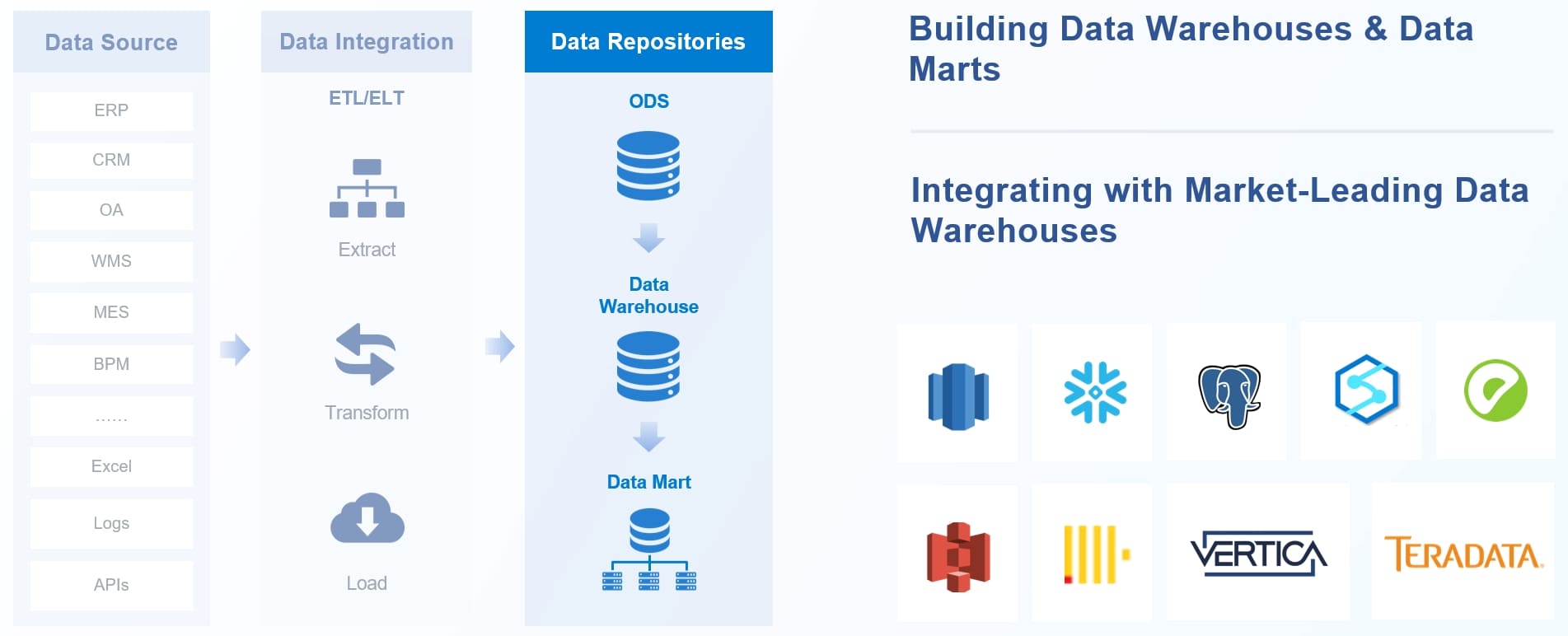

FineDataLink stands out among the best data lake tools for its low-code integration, real-time data sync, and user-friendly interface. You can connect over 100 data sources, including relational, non-relational, interface, and file databases. FineDataLink lets you synchronize data in real time across multiple tables or entire databases, so your business always has up-to-date information. You can build enterprise-level data assets using APIs, making it easy to share and connect data across your organization.

You get a visual, drag-and-drop interface that makes data integration simple, even if you don’t have a technical background. FineDataLink supports both ETL and ELT, giving you flexibility for different business scenarios. You can schedule tasks, monitor operations in real time, and reduce your operational workload. Security features like data encryption and SQL injection prevention keep your data safe.

Here’s a quick look at what FineDataLink offers:

| Feature/Capability | Description |

|---|---|

| Multi-source data collection | Supports various data sources including relational, non-relational, interface, and file databases. |

| Non-intrusive real-time synchronization | Synchronizes data in multiple tables or entire databases, ensuring timely business data. |

| Low-cost data service construction | Constructs enterprise-level data assets using APIs for interconnection and sharing. |

| Efficient operation and maintenance | Allows flexible task scheduling and real-time monitoring, reducing operational workload. |

| High extensibility | Built-in Spark SQL enables calling of scripts like Shell scripts. |

| Efficient data development | Dual-core engine (ELT and ETL) provides customized solutions for various business scenarios. |

| Five data synchronization methods | Offers methods based on timestamp, trigger, full-table comparison, full-table increment, and log parsing. |

| Security | Supports data encryption and SQL injection prevention rules. |

| Process-oriented low-code platform | User-friendly design enhances development efficiency. |

FineDataLink works well for companies that need to integrate data from many sources, build real-time or offline data warehouses, and improve data management and governance. Many organizations report a 50-70% reduction in data storage costs and a 30% decrease in time-to-insight after implementing FineDataLink data lake services.

Amazon Lake Formation is one of the best data lake tools for enterprises that use AWS. You can quickly set up a secure data lake, manage permissions, and share data with internal and external users. Lake Formation centralizes access control, making it easy to manage fine-grained permissions at scale. You can audit data access and monitor compliance, which is important for regulated industries.

| Advantage | Description |

|---|---|

| Manage permissions | Centralized management of fine-grained data lake access permissions using familiar features. |

| Scaled management | Simplifies security management and governance for users at scale. |

| Deeper insights | Enables quick insights from securely shared data with internal and external users. |

| Data auditing | Comprehensive monitoring of data access to help achieve compliance. |

| Govern and secure data at scale | Scales permissions by setting attributes on data and applying attribute permissions. |

| Simplify data sharing | Facilitates innovation by allowing users to find, access, and share data confidently. |

| Monitor access and improve compliance | Proactively addresses data challenges with comprehensive data-access auditing. |

Amazon Lake Formation is ideal for organizations that already use AWS and want to simplify data governance, security, and sharing in their data lake.

Microsoft Azure Data Lake is a top choice for large organizations that need scalability, integration, and security. You can store petabytes of structured, semi-structured, and unstructured data without performance issues. Azure Data Lake uses frameworks like Apache Spark and Hadoop for fast, parallel data processing. You get enterprise-grade security, including encryption and role-based access control, and compliance with global standards like GDPR and HIPAA.

| Feature | Description |

|---|---|

| Scalability | Handles petabytes of data without performance degradation, allowing seamless scaling as data grows. |

| Massive Data Storage | Supports structured, semi-structured, and unstructured data in a unified platform. |

| Parallel Processing | Utilizes frameworks like Apache Spark and Hadoop for fast data processing. |

| Strong Security | Built with enterprise-grade security, including data encryption and role-based access control. |

| Compliance | Meets global standards such as GDPR, HIPAA, and PCI DSS for data protection. |

| Seamless Integration | Integrates with Azure services like Azure Synapse Analytics and Power BI for enhanced analytics. |

Azure Data Lake works best for enterprises that need to scale quickly, integrate with other Azure services, and meet strict security and compliance requirements.

Google BigLake is one of the best data lake tools for organizations that want multi-cloud flexibility and advanced analytics. You can analyze data stored in different formats across Google Cloud, AWS, and Azure without duplicating data. BigLake integrates with BigQuery, giving you a unified access point for all your data. You get fine-grained security controls and performance enhancements for fast queries.

Google BigLake is a great fit for companies that need to manage data across multiple clouds and want to avoid data duplication.

Snowflake is a leader in the data lake market because of its innovative architecture and ease of use. You can separate storage and compute, which helps you scale efficiently and control costs. Snowflake supports structured, semi-structured, and unstructured data, making it flexible for many use cases. You get powerful data sharing features and robust security.

| Strengths of Snowflake Data Lake Platform | Limitations of Snowflake Data Lake Platform |

|---|---|

| Innovative architecture that separates storage and compute | Complexity of cost management |

| Scalability and cost efficiency | Performance issues for highly complex stored procedures |

| Supports structured, semi-structured, and unstructured data | Advanced security features only in higher-cost editions |

Snowflake is ideal for organizations that want a cloud-native, easy-to-use data lake platform with strong sharing and security features.

Snowflake's success is built upon several core strengths that differentiate it in the market: Innovative Architecture, Multi-Cloud and Cross-Cloud Capabilities, Ease of Use and Near-Zero Management, Powerful and Secure Data Sharing, Versatile Data Handling, and a Rich and Evolving Feature Set.

Databricks Delta Lake is one of the best data lake tools for real-time analytics and machine learning. You can ingest high-velocity data streams from sources like Apache Kafka and Kinesis. Delta Lake ensures data accuracy and reliability with ACID transactions and schema enforcement. You can run end-to-end analytics and AI projects in a single workspace.

Databricks Delta Lake is perfect for teams that need real-time analytics, machine learning, and unified data management.

Cloudera offers a fully integrated, open-source-aligned data-in-motion stack. You can use Apache NiFi for data ingestion, Apache Kafka for event streaming, and Apache Flink for real-time processing. Cloudera uses Apache Iceberg for low-cost, scalable storage, making it ideal for storing streaming data for years.

Cloudera Data Lake Tools are best for organizations that need open-source flexibility, cost efficiency, and long-term data storage.

IBM Data Lake Services focus on security and compliance, making them a strong choice for regulated industries. You can use decentralized data management, so each domain manages its own regulatory needs. IBM supports data-driven compliance practices, standardized governance protocols, and domain-level privacy control.

IBM Data Lake Services are ideal for enterprises in healthcare, finance, and other regulated sectors.

Oracle Data Lake Platform is designed for large-scale enterprise deployments. You can integrate data and run ETL processes using Oracle Cloud Infrastructure Data Integration. Oracle supports real-time analytics with its streaming service and accelerates machine learning model development with AutoML.

Oracle Data Lake Platform works best for enterprises that need robust integration, real-time analytics, and industry-specific solutions.

Dremio Lakehouse Platform is one of the best data lake tools for business intelligence and low-latency data access. You can connect to disparate data sources without moving data, thanks to zero-ETL query federation. Dremio uses autonomous performance management and learns from each query to optimize performance automatically.

Dremio Lakehouse Platform is a great choice for organizations that need fast, reliable data access for analytics and reporting.

By exploring these best data lake tools, you can find the right solution for your business needs. Each platform offers unique strengths, whether you need real-time analytics, multi-cloud support, strong security, or cost efficiency. The data lake market continues to evolve, and these data lake platforms help you stay ahead by making your data more accessible, secure, and valuable.

You might wonder what a data lake is and why it matters for your business. A data lake is a central place where you store all your raw data, whether it’s structured, semi-structured, or unstructured. You can use a data lake to collect information from many sources, making it easier to analyze and find insights.

When you compare data lake tools, you want to see how each platform stands out. Some data lake solutions focus on scalability, while others make integration simple or offer strong security. You need to pick a data lake that fits your needs, whether you want real-time data sync, cost efficiency, or advanced analytics.

Here’s a table that shows the main differences, strengths, and unique features of the top data lake tools for modern enterprises. You’ll see how FineDataLink, Amazon Lake Formation, Microsoft Azure Data Lake, Google BigLake, Snowflake, Databricks, Cloudera, IBM, Oracle, and Dremio compare:

| Platform | Scalability | Integration & AI/ML Support | Security & Governance | Cost Model | Unique Features | Best For |

|---|---|---|---|---|---|---|

| FineDataLink | High | Low-code, 100+ sources, API, ETL/ELT | Data encryption, governance | Affordable | Real-time sync, drag-and-drop, easy API | Multi-source, real-time needs |

| Amazon Lake Formation | Massive | AWS ecosystem, AI (SageMaker) | Robust, encryption | Pay-as-you-go | Simplified setup, strong security | Large AWS-based enterprises |

| Microsoft Azure Data Lake | Effortless | Azure ML, seamless integration | Enterprise-grade, compliance | Cost-effective | Parallel processing, role-based access | Enterprises needing compliance |

| Google BigLake | Massive | Multi-cloud, BigQuery, AI/ML | Fine-grained, unified | Pay-as-you-go | Unified warehouse/lake, multi-cloud | Multi-cloud, analytics focus |

| Snowflake | Elastic | ACID, cross-cloud | Secure sharing | Usage-based | Storage-compute separation | Cloud-native, sharing |

| Databricks Delta Lake | High | Streaming, ML, unified analytics | ACID, schema enforcement | Flexible | Real-time analytics, ML integration | AI, real-time analytics |

| Cloudera | Petabyte-scale | Open-source, Apache stack | Centralized governance | Flexible | Iceberg storage, streaming data | Open-source, long-term storage |

| IBM Data Lake Services | High | Decentralized, compliance | Domain-level privacy | Enterprise | Data-driven compliance, privacy control | Regulated industries |

| Oracle Data Lake | Large-scale | OCI, AutoML, streaming | Secure, industry-specific | Enterprise | Real-time analytics, AutoML | Finance, healthcare |

| Dremio Lakehouse | High | BI integration, zero-ETL | Autonomous management | Flexible | Sub-second queries, query federation | Fast BI, low-latency access |

Tip: If you want a data lake that’s easy to use and connects to many sources, FineDataLink gives you a low-code platform with real-time sync and simple API creation. You can build a data lake for business intelligence without heavy coding or complex setup.

Data lakes help you break down silos and make your data accessible for analysis. Each data lake tool offers something unique, so you can choose the one that matches your business goals.

Choosing the right data lake platform can transform how you manage and analyze your business data. A data lake is a centralized repository that stores raw data from multiple sources, making it easy for you to access, process, and use information for data analytics and reporting. When you select a data lake, you want a solution that fits your business needs, scales with your growth, and supports your team’s workflow.

You should start by understanding what a data lake does. It collects structured, semi-structured, and unstructured data in one place. This flexibility lets you break down data silos and unify information across your organization. With a strong data lake, you can onboard new data sources quickly, control access, and maintain high data quality.

Scalability is key when you choose a data lake platform. You want a solution that grows with your business and handles large volumes of data without slowing down. Performance benchmarks help you compare platforms:

| Platform | Key strengths | Ideal for |

|---|---|---|

| Databricks Lakehouse Platform | Multi-cloud execution, unified analytics, strong AI integration | Enterprises focused on data engineering and machine learning |

| Snowflake | Decoupled compute-storage, simple scaling | Teams seeking elasticity and low operational overhead |

| Microsoft Azure Synapse Analytics | Combines big data and warehousing, native governance | Organizations in the Microsoft ecosystem |

| AWS Lake Formation / Athena | Centralized access control, serverless querying | Cloud-first enterprises using AWS |

You should look for a data lake that ingests vast quantities of data, transforms it efficiently, and enables real-time analytics.

Integration and connectivity set the foundation for a successful data lake. FineDataLink excels here, offering low-code integration and support for over 100 data sources. You can connect relational, non-relational, interface, and file databases. Real-time synchronization keeps your data fresh and accessible. API-based services make it easy to share data across systems.

| Feature | Description |

|---|---|

| Multi-source data collection | Supports various data sources including relational, non-relational, interface, and file databases. |

| Real-time synchronization | Enables non-intrusive synchronization of data across multiple tables or entire databases. |

| API-based data service | Facilitates low-cost construction of enterprise-level data assets through APIs for interconnection and sharing. |

When you evaluate a data lake, check how well it integrates with your existing systems and supports future growth.

Security and compliance protect your data lake from threats and ensure you meet industry standards. You need strong access controls, encryption, and auditing features. Platforms like AWS Lake Formation and Azure Data Lake offer centralized permissions management and scalable access control. FineDataLink provides data encryption and SQL injection prevention, helping you safeguard sensitive information.

Data governance keeps your data lake organized and trustworthy. You should control who loads data, document sources, and set clear policies. Centralized permissions, scalable access control, and easy data sharing are important features. FineDataLink supports governance with automated quality checks and flexible scheduling.

| Feature | AWS Lake Formation | Azure Data Lake |

|---|---|---|

| Centralized permissions management | Utilizes AWS Glue Data Catalog for centralized management of data permissions. | N/A |

| Scalable access control | Implements attribute-based permissions that can be adjusted as user needs grow. | N/A |

| Simplified data sharing | Enables easy data sharing both within and outside the organization. | N/A |

| Data security and compliance | Offers auditing features to monitor data access and improve compliance. | Ensures data protection with enterprise-grade security features, including encryption and auditing. |

| Large-scale storage | N/A | Allows storage and analysis of petabyte-size files without artificial constraints. |

| Optimized data processing | N/A | Supports development and scaling of data transformation programs with no infrastructure management. |

| Integration with existing systems | N/A | Works with Azure Synapse Analytics, Power BI, and Data Factory. |

Cost plays a big role in your data lake decision. You should look at expenses for compute, storage, integration, streaming, analytics, business intelligence, machine learning, identity management, data catalog, and labor. FineDataLink offers affordable pricing and reduces operational workload with its user-friendly design.

| Component | Description |

|---|---|

| Dedicated compute | Costs associated with the computing resources required for data processing. |

| Storage | Expenses related to data storage solutions. |

| Data integration | Costs for integrating various data sources into the data lake. |

| Streaming | Expenses for real-time data processing capabilities. |

| Spark analytics | Costs for utilizing Spark for data processing and analytics. |

| Data lake | Direct costs associated with the data lake infrastructure. |

| Business intelligence | Costs for BI tools and analytics platforms. |

| Machine learning | Expenses related to implementing machine learning capabilities. |

| Identity management | Costs for managing user identities and access controls. |

| Data catalog | Expenses for maintaining a data catalog for data governance. |

| Labor costs | Costs for data migration, ETL integration, analytics migration, and ongoing support. |

Tip: Start by assessing your current data centers and future needs. Balance migration complexity and evaluate compatibility with your existing systems. Choose a data lake platform that matches your business goals and supports efficient data analytics.

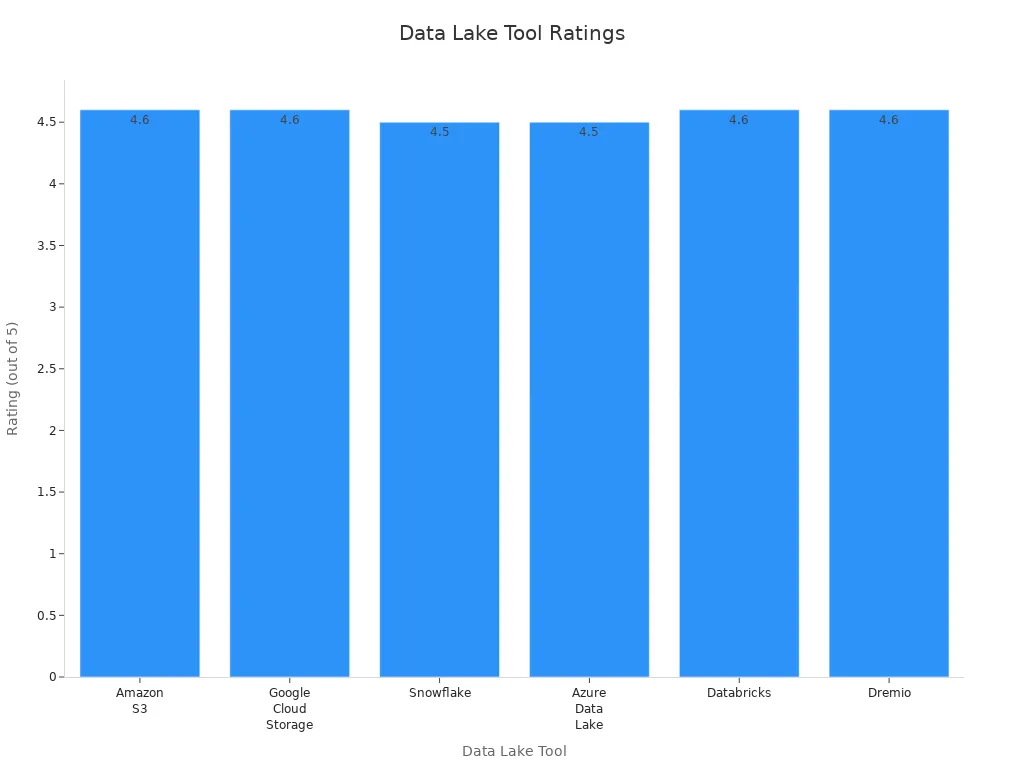

A data lake gives you one place to store all your raw data, making it easy to manage and analyze. You can use a data lake to break down silos and support business intelligence. The right data lake tool depends on your needs. For small teams, Dremio or Google Cloud Storage works well. Medium businesses often choose Snowflake or Azure Data Lake. Large enterprises prefer Databricks or Amazon S3. Check the table below for ratings and pros:

| Data Lake Tool | Rating | Pros | Cons |

|---|---|---|---|

| Amazon S3 | 4.6 | Security, scalability, ease of use | Confusing billing |

| Google Cloud Storage | 4.6 | Documentation, price, integration | Costly support |

| Snowflake | 4.5 | Architecture, security, integration | Vague pricing |

| Azure Data Lake | 4.5 | Cost, security, integration | Setup complexity |

| Databricks | 4.6 | ACID, fast processing | High cost |

| Dremio | 4.6 | Management, low TCO, SQL engine | No legacy source support |

Use the comparison guide to pick the best data lake for your company. Try a demo or free trial, like the one from FineDataLink, to see what fits your workflow.

Enterprise Data Integration: A Comprehensive Guide

What is enterprise data and why does it matter for organizations

Understanding Enterprise Data Centers in 2025

Enterprise Data Analytics Explained for Modern Businesses

The Author

Howard

Data Management Engineer & Data Research Expert at FanRuan

Related Articles

What is a data management platform in 2025

A data management platform in 2025 centralizes, organizes, and activates business data, enabling smarter decisions and real-time insights across industries.

Howard

Dec 22, 2025

Top 10 Database Management Tools for 2025

See the top 10 database management tools for 2025, comparing features, security, and scalability to help you choose the right solution for your business.

Howard

Dec 17, 2025

Best Data Lake Vendors For Enterprise Needs

Compare top data lake vendors for enterprise needs. See which platforms offer the best scalability, integration, and security for your business.

Howard

Dec 07, 2025