Data source setup in enterprise systems involves connecting and configuring various data locations so you can manage, analyze, and migrate information efficiently. You need to approach this process with careful planning to ensure your data remains secure and reliable. Security measures and validation steps protect your valuable data assets and support your data engineering goals.

Low-code platforms like FineDataLink now lead the way in enterprise environments. According to industry research, 87% of enterprise developers use low-code tools, and 70% of new enterprise applications will use no-code or low-code by 2025. The table below shows how quickly enterprises adopt these solutions:

| Evidence | Description |

|---|---|

| Forrester's Market Projection | The low-code market could reach $50 billion by 2028. |

| Enterprise Developer Adoption | 87% of enterprise developers use low-code platforms for some development work. |

| Gartner's Application Prediction | 70% of new enterprise applications will utilize no-code/low-code by 2025. |

| Large Enterprise Tool Usage | 75% of large enterprises are expected to use at least four low-code tools by 2024. |

You can simplify your data source setup by choosing a modern tool that supports your data integration and migration needs.

You need to start your planning and preparation by defining clear objectives for your data source setup. Setting specific goals helps you align your enterprise data architecture with business needs. Many enterprises aim to achieve several outcomes when integrating new data sources. These objectives often include:

You should consider how each objective supports your overall data engineering strategy. Planning and preparation at this stage ensures your data acquisition process will deliver value and support a scalable data architecture.

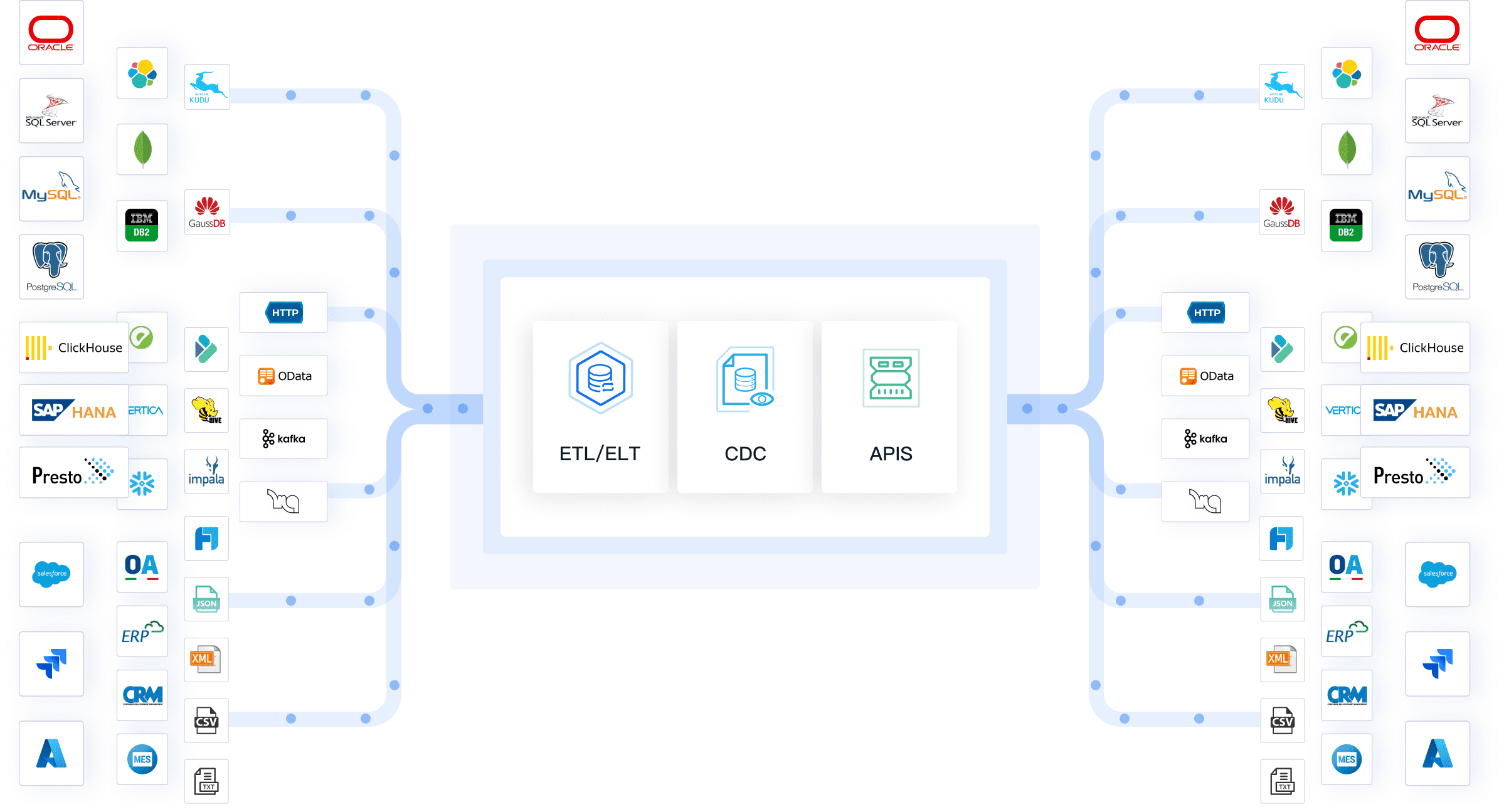

After you define your objectives, you need to select the right data sources for your enterprise. The most frequently integrated data sources in large organizations include:

When you identify which data sources to integrate, you should evaluate several key factors. The table below outlines what enterprises consider most important during planning and preparation:

| Factor | Description |

|---|---|

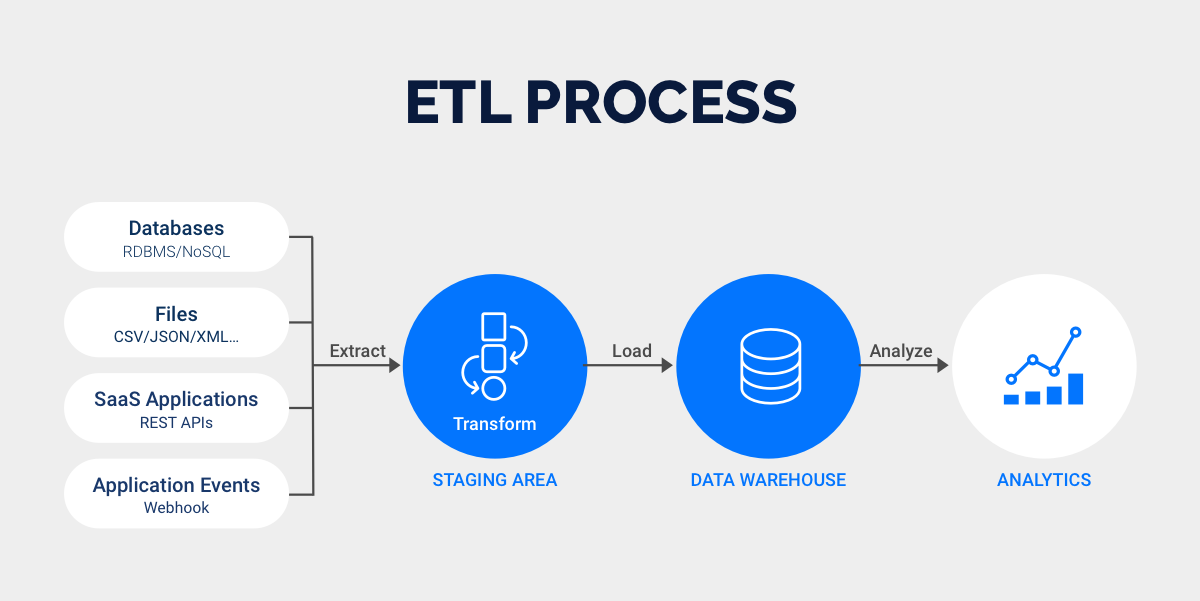

| Integration Methods | Various methods like ETL, ELT, Real-Time Integration, and API-based Integration are considered. |

| Data Formats and Compatibility | Support for diverse data formats such as relational databases, flat files, and unstructured data. |

| Scalability and Performance | Ability to handle growing data volumes with considerations for throughput, latency, and elasticity. |

| Security and Compliance | Features like data encryption, RBAC, audit trails, and compliance with regulations like GDPR/CCPA. |

| Cost and Licensing Models | Total cost of ownership including licensing, hardware, maintenance, and support costs. |

| Vendor Expertise and Support | Importance of vendor reputation, technical support availability, and training resources. |

You should follow a structured approach to planning and preparation for data source setup. The recommended steps are:

By following these steps, you can build a robust foundation for your enterprise data architecture and support future data-driven initiatives.

You need to start your enterprise data migration by checking system requirements. This step ensures your data migration process will run smoothly. Review the hardware and software specifications for both the source and target systems. Confirm that your infrastructure can handle the volume and complexity of your data. Assess network bandwidth and storage capacity to avoid bottlenecks during migration. Evaluate compatibility between your current systems and the new environment. A comprehensive migration plan should include a checklist for all technical prerequisites. This planning step helps you identify potential issues early and reduces the risk of delays.

Involve business stakeholders in this phase. Their insights help you understand how data supports daily operations. Excluding them can lead to errors and inefficiencies. You should also use this opportunity to address messy or outdated data. Many organizations hesitate to clean their data, but enterprise data migration offers a chance to improve data quality and streamline management.

Gathering credentials is a critical part of enterprise data migration. You need secure access to all data sources involved in the migration. Collect usernames, passwords, API keys, and any other authentication details required for both source and destination systems. Store these credentials securely and limit access to authorized personnel only.

Common challenges during this stage include inaccurate or incomplete data transfer and data quality issues. Inaccurate transfers can cause reporting errors and data gaps. Low-quality data, such as outdated or duplicate entries, can affect system performance and accuracy. To avoid these problems, verify all credentials before starting the migration. Develop a clear strategy for credential management, including reviewing credentialing software and providing training for stakeholders. Responsible management of legacy credentials is also important when you sunset old systems.

By following these steps, you set a strong foundation for successful enterprise data migration. Careful planning and attention to detail support your data engineering goals and ensure a smooth transition.

Setting up a data source for enterprise data migration requires careful planning and attention to detail. You need to ensure that your integration architecture supports both current and future business needs. FineDataLink offers a streamlined approach to configuring data sources, making the migration process efficient and reliable.

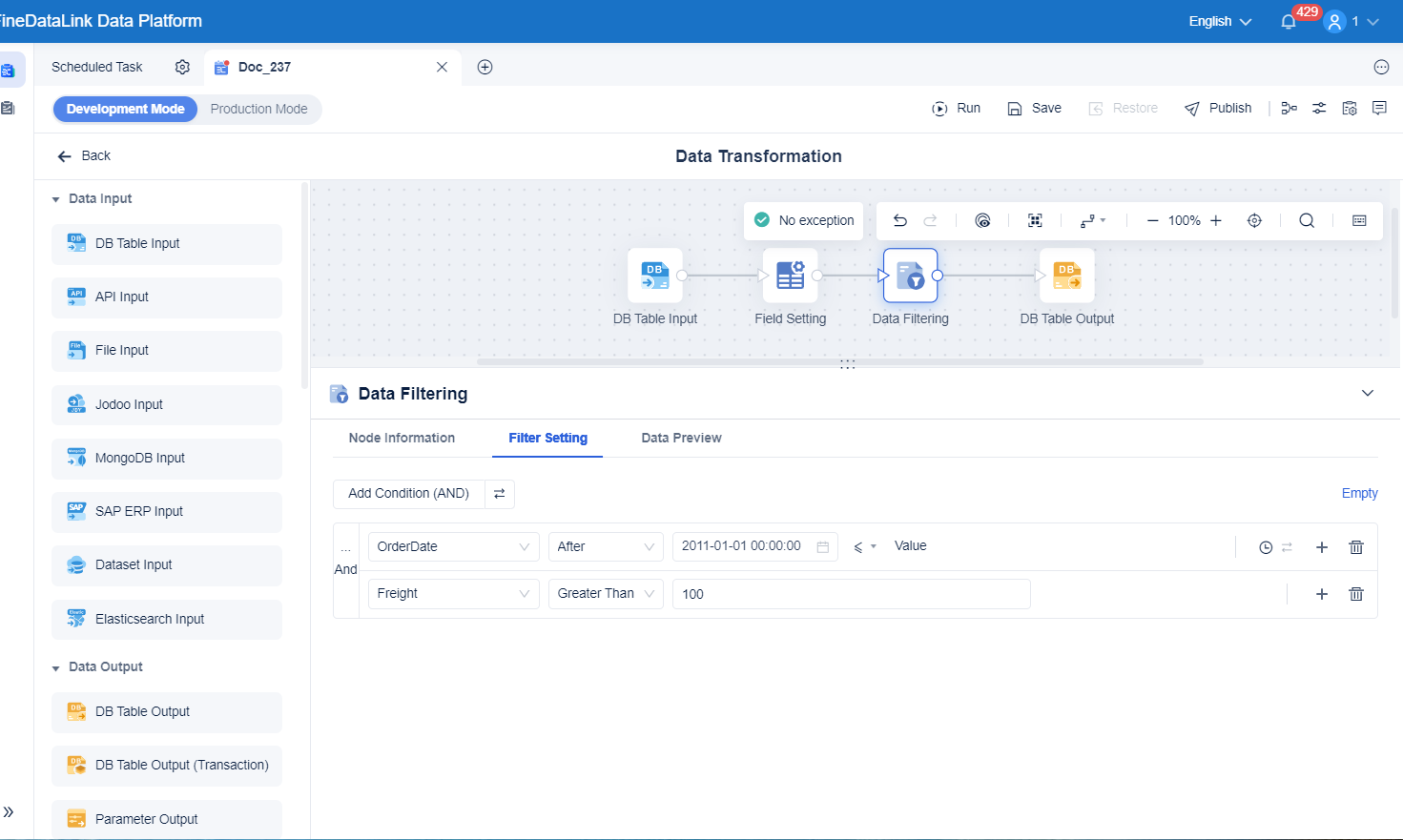

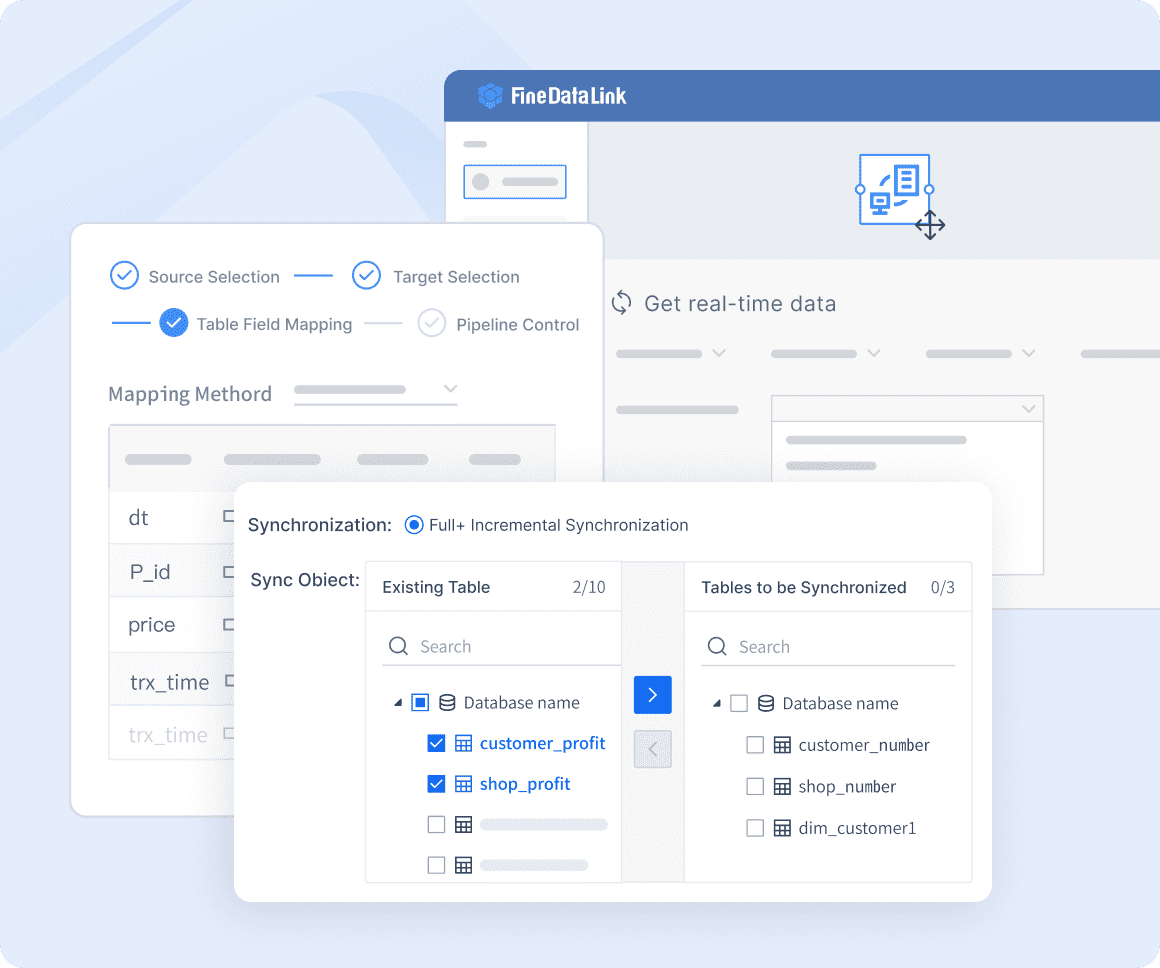

You begin by selecting the appropriate connector for your data source. FineDataLink provides a visual, low-code interface that simplifies this step. You see a list of available connectors, which cover a wide range of databases, SaaS applications, and cloud environments. This flexibility allows you to connect to almost any system in your enterprise.

Tip: Review your planning documents before selecting a connector. Confirm that the connector matches your data engineering requirements and supports seamless data migration.

After you select the connector, you enter the connection details for your data source. You provide information such as server address, database name, authentication credentials, and port number. FineDataLink’s interface guides you through each field, ensuring that you supply all necessary data for a successful connection.

You need to verify the accuracy of each entry. Incorrect details can cause migration failures or data loss. FineDataLink includes validation checks that alert you to missing or incorrect information. This step supports data integrity and reduces the risk of errors during enterprise data migration.

Note: Store your credentials securely and restrict access to authorized users. This practice protects sensitive data and supports compliance with enterprise security policies.

Testing the data source is a critical step in the migration process. FineDataLink allows you to run connection tests directly from the interface. You receive immediate feedback on the status of your connection. If the test passes, you can proceed with migration. If the test fails, you review the error messages and adjust your settings.

Testing ensures that your integration architecture is ready for seamless data migration. You confirm that the data flows correctly between systems and that your enterprise data migration will not encounter unexpected issues. FineDataLink’s real-time synchronization capabilities allow you to preview data movement and verify that all records transfer accurately.

Testing before migration helps you avoid downtime and data inconsistencies. You maintain control over the process and ensure that your data engineering goals are met.

FineDataLink stands out among enterprise data integration platforms. The following table highlights key features that support efficient data source setup and migration:

| Feature | FineDataLink Description |

|---|---|

| Low-Code Interface | Provides a user-friendly low-code interface for ease of use by data engineers and developers. |

| Real-Time ETL/ELT | Supports real-time data integration with both ETL and ELT capabilities. |

| Over 100 Connectors | Offers connectivity to over 100 data sources, enhancing integration flexibility. |

| Real-Time Sync | Enables real-time data synchronization across multiple tables with minimal delay. |

| Hybrid and Multi-Cloud Support | Facilitates integration across various cloud services, providing control and automation. |

| Dual-Core Engine | Allows users to choose between ETL and ELT processes for different integration tasks. |

| Automation and Scheduling | Automates tasks and allows for scheduling of data workflows, improving efficiency. |

| Strong Governance and Quality | Ensures high data quality and governance standards, crucial for enterprise applications. |

| Scalability and Performance | Designed to scale effectively, handling large volumes of data with high performance. |

FineDataLink streamlines seamless data migration by automating complex tasks and supporting real-time data synchronization. You benefit from a robust integration architecture that adapts to changing business needs. The platform’s low-code approach makes it accessible to both technical and non-technical users, reducing the barriers to successful enterprise data migration.

You complete your data source setup with confidence, knowing that FineDataLink supports your planning, data engineering, and migration objectives. The platform’s advanced features help you maintain data quality, improve operational efficiency, and achieve reliable integration across your enterprise.

Securing and monitoring your data source setup in enterprise systems is essential for maintaining data integrity and supporting successful migration. You need to protect your data from external attacks, insider threats, and application vulnerabilities. Careful planning and ongoing monitoring help you prevent data loss and ensure compliance with industry regulations.

You must set permissions to control who can access your data sources. Involving your security team during planning helps you identify risks and integrate protection measures from the start. The most effective strategies for permission-setting include:

| Strategy | Description |

|---|---|

| Unified Permissions Platform | Establishes a single entry point for all clients, enforcing robust permissions. |

| Pre-filtering | Applies access controls during queries to retrieve only relevant data. |

| Post-filtering | Refines access after retrieval using advanced checks like ReBAC and ABAC. |

| Real-time Auditing | Maintains compliance with regulations through detailed audit logs. |

You should also conduct regular reviews of access controls and educate employees on security best practices. These steps help prevent data breaches and support compliance.

Encryption protects your data during migration and storage. You need to implement cryptographic policies using a centralized console. This approach ensures that only authorized users can access sensitive information. Healthcare companies must comply with HIPAA, financial services follow banking regulations, and European organizations adhere to GDPR privacy laws. Ongoing monitoring and regular check-ups help you maintain compliance and improve data protection strategies.

Monitoring data flow is vital for maintaining data integrity throughout migration. You can use tools like DataBuck, Integrate.io, Fivetran, Hevo, Stitch, Gravity Data, Splunk, Mozart Data, Monte Carlo, and Datadog. These platforms offer real-time visibility, automated alerts, and advanced dashboards. You should conduct vulnerability assessments and implement threat detection tools. Continuous monitoring allows you to troubleshoot issues quickly and ensure your data engineering goals are met.

By securing and monitoring your data source setup in enterprise systems, you protect your data, maintain compliance, and support reliable migration. These practices help you build a strong foundation for future data-driven initiatives.

You may encounter challenges during the data source setup in enterprise systems, especially when you begin the enterprise data migration. Connection issues can disrupt the migration process and affect the reliability of your integration architecture. You need to identify and resolve these problems quickly to maintain a comprehensive migration plan and achieve successful data migration.

The most common connection issues in enterprise environments include data silos, compatibility problems, security breaches, performance bottlenecks, and resistance to change. You can address these challenges using proven strategies. The table below summarizes typical connection issues and their resolutions:

| Connection Issue | Resolution |

|---|---|

| Data Silos | Implement a unified data management strategy and invest in integration platforms for data sharing. |

| Compatibility Problems | Adopt standardization practices and use middleware solutions for better integration. |

| Security Breaches | Implement robust security measures like encryption and regular security audits. |

| Performance Bottlenecks | Design scalable integration strategies and utilize cloud-based platforms. |

| Resistance to Change | Develop a change management strategy with training and communication for employees. |

You should also watch for frequent causes of migration failure. These include poor data quality, regulatory compliance risks, downtime during cutover, and cost overruns. You can prevent these issues by following a comprehensive migration plan and paying close attention to detail. The most frequent causes of migration failure are:

FineDataLink helps you resolve connection issues by providing real-time monitoring and alert features. You receive instant notifications about errors or disruptions in your integration architecture. You can use these tools to troubleshoot problems and maintain control over the migration process.

Tip: Always test your data source connections before starting enterprise data migration. Use FineDataLink's built-in validation tools to identify and fix issues early.

Validating seamless data migration is essential for ensuring that your data remains accurate, complete, and secure after integration. You need to confirm that every record from the source appears in the target system and that relationships between data elements remain intact. A comprehensive migration plan should include clear validation steps to support successful data migration.

You can use several key metrics to validate the migration process. The table below outlines important metrics and how you can test them:

| Metric | Definition | Example | Testing Method |

|---|---|---|---|

| Data Completeness | Every record from the source must exist in the target after migration. | If the source has 1,000,000 records, the target must have the same. | Count checks, reconciliation scripts, automated tools. |

| Data Integrity | Relationships and dependencies between data must remain intact. | An invoice linked to a customer ID must maintain that link post-migration. | Referential integrity checks, constraint validation. |

| Data Accuracy | Data values should remain consistent after transformation. | Dates must convert correctly from DD/MM/YYYY to YYYY-MM-DD. | Field-to-field mapping validation, sampling comparisons. |

| Performance | Migration must complete within a defined time without resource overload. | A retail company must migrate before holiday sales without extended outages. | Load testing, stress testing, dry-run simulations. |

| Security | Sensitive data must be protected during migration. | Patient data must remain encrypted to comply with HIPAA. | Security audits, encryption verification, penetration testing. |

You can validate the integrity of migrated data using several methods:

Establishing a quality assurance process after migration calls for rigorous testing to validate the migrated data and confirm its accuracy.

Begin by developing a comprehensive QA plan that outlines the methods and tools you will use to assess the migrated data against the original source.

FineDataLink supports ongoing validation with advanced monitoring and alert features. You can track data flow in real time, receive automated notifications about discrepancies, and access detailed dashboards for analysis. These tools help you maintain high data quality and ensure that your integration architecture supports seamless data migration.

A real-world example demonstrates the value of these practices. NTT DATA Taiwan partnered with FanRuan to implement a unified data platform using ETL processes. The company integrated backend systems such as ERP, POS, and CRM, then visualized the data for decision-making. By following a comprehensive migration plan and using FineDataLink's integration architecture, NTT DATA Taiwan achieved successful data migration. The organization validated data completeness, integrity, and accuracy, enabling employees to perform self-service analysis and make informed decisions. This approach improved operational efficiency and supported sustainable growth.

You can achieve similar results by following best practices for troubleshooting and validation. Use FineDataLink’s features to monitor your migration process, validate your data, and support successful data migration in your enterprise. Careful planning and attention to detail help you build a reliable data source setup in enterprise systems and support future data-driven initiatives.

You can achieve seamless data migration by following a clear process: planning, selecting the right tools, and validating each step. FineDataLink helps you save costs, reduce maintenance, and improve data security, as shown below:

| Benefit | Description |

|---|---|

| Cost Savings | Data migration can lead to significant cost savings by eliminating the need to maintain outdated systems. |

| Enhanced Data Security | Migrating data to secure platforms enhances data security and reduces the risk of breaches. |

| Better Data Integration | Facilitates the integration of data from various sources into a unified system. |

To ensure ongoing success, you should establish data governance, monitor data quality, and invest in scalable solutions. Modern tools like FineDataLink boost productivity and support your enterprise as data needs grow.

Mastering Data Management: A Complete Guide

Essential Data Integration: A Beginner's Guide

Top Data Integration Tools: 2025 Guide

Top 10 Data Integration Software for 2025

What is API Data Integration? API vs Data Integration

The Author

Howard

Data Management Engineer & Data Research Expert at FanRuan

Related Articles

Self-Service Analytics Defined and Why You Need It

Self-service analytics lets you analyze data without IT help, empowering faster, data-driven decisions and boosting agility for your business.

Lewis

Jan 04, 2026

Best Self-Service Tools for Analytics You Should Know

See which self-service tools for analytics let business users access data, build dashboards, and make decisions faster—no IT help needed.

Lewis

Dec 29, 2025

Understanding Predictive Analytics Services in 2026

Predictive analytics services use data and AI to forecast trends, helping businesses make informed decisions, reduce risks, and improve efficiency in 2026.

Lewis

Dec 30, 2025