Enterprise data architecture serves as the blueprint for managing organizational data assets in a data-driven world. This architecture enables enterprises to simplify, standardize, and optimize data management. Effective data architecture supports fact-based decision-making and aligns the organization for better business outcomes. With a solid foundation, organizations can unlock the full value of data, improve data analytics, and ensure scalability as their needs evolve. A formal data architecture plan helps guide informed decisions and enhances overall performance.

Enterprise data architecture consists of several foundational elements that enable organizations to manage, secure, and leverage their data assets effectively. These components work together to create a robust framework for data management, supporting business intelligence, analytics, and operational efficiency.

Data governance forms the backbone of any successful data architecture. Organizations establish clear policies, rules, and standards to ensure consistency, compliance, and accountability in data management. Data governance frameworks typically include a centralized Data Governance Office, data stewards, and a cross-functional Data Council. These groups oversee the creation and enforcement of policies, manage data quality, and set the strategic direction for data initiatives.

| Component | Description |

|---|---|

| Data Governance Office (DGO) | Centralized team responsible for creating policies, standards, and managing program execution. |

| Data Stewards | Individuals managing and ensuring data quality in their specific areas. |

| Data Council | Cross-functional team determining the strategic direction of the data governance program. |

Organizations focus on accountability, transparency, consistency, and integrity to build trust and reliability in their data assets. Security and compliance remain critical, especially when handling sensitive information. Data stewardship and metadata management help maintain high data quality and support continuous improvement. Common challenges include balancing data access with security controls, optimizing data quality, managing compliance, and overcoming cultural resistance to change.

Tip: Regular reviews and enhancements of governance processes help organizations adapt to evolving regulatory requirements and business needs.

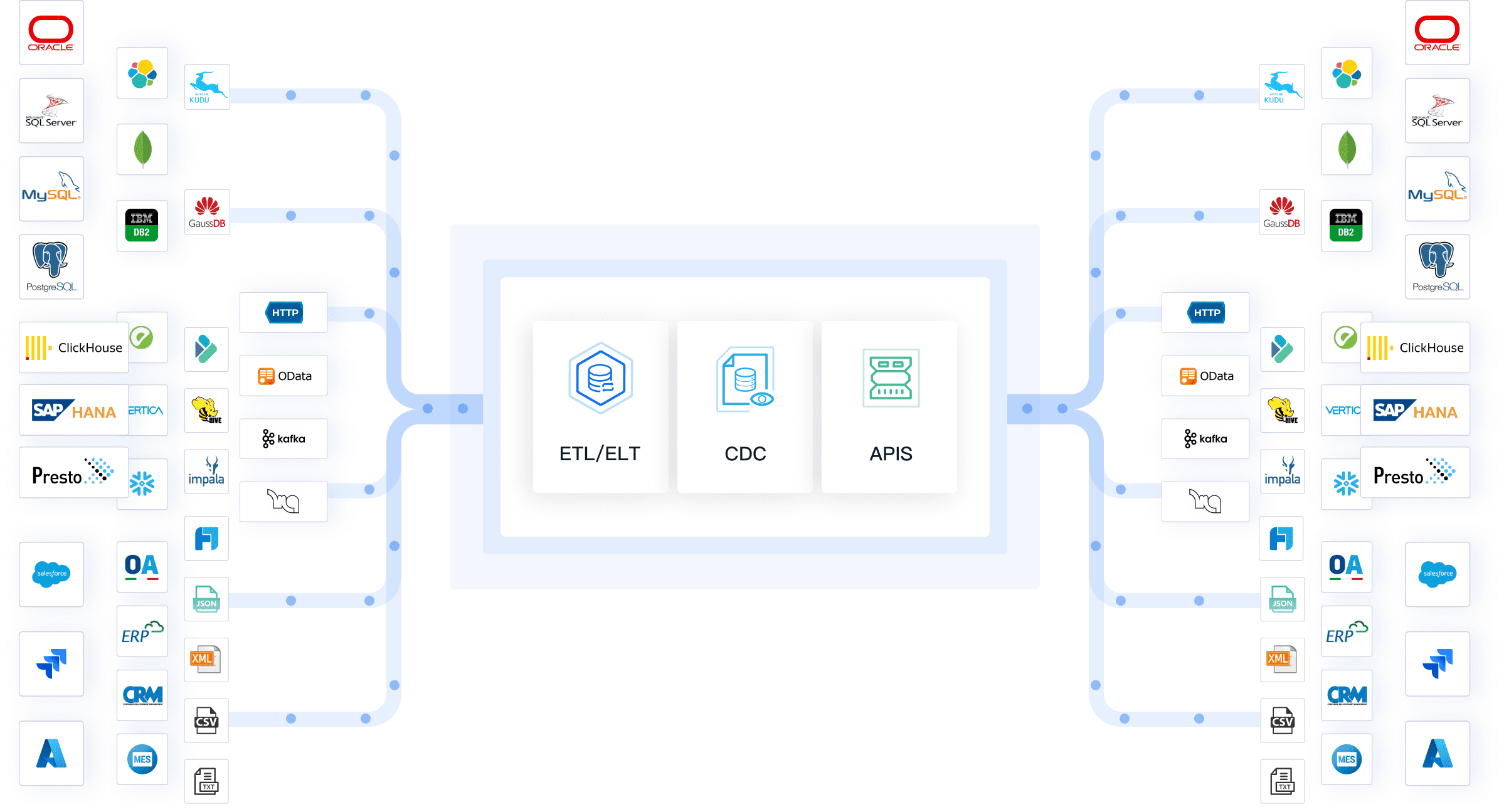

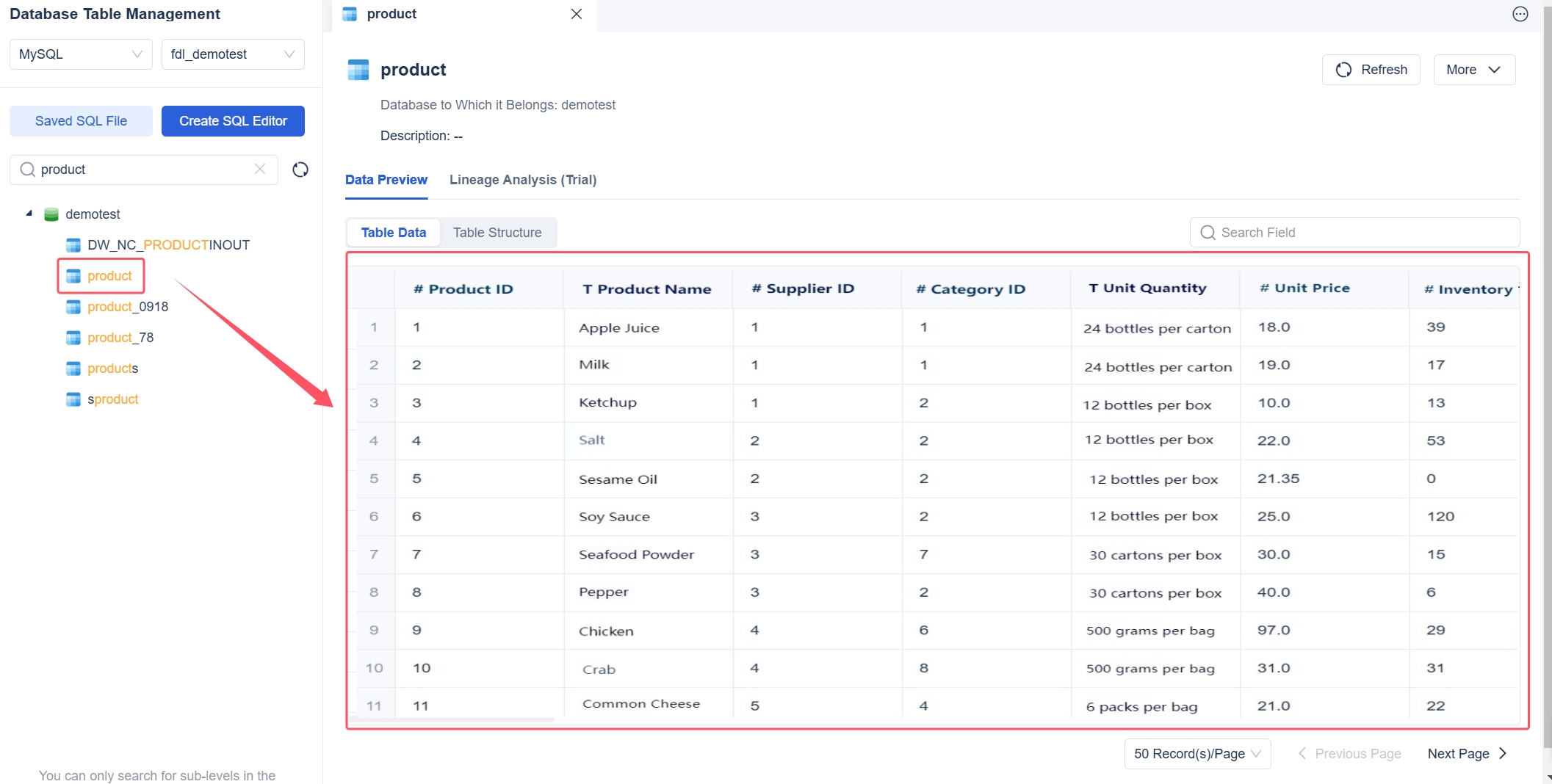

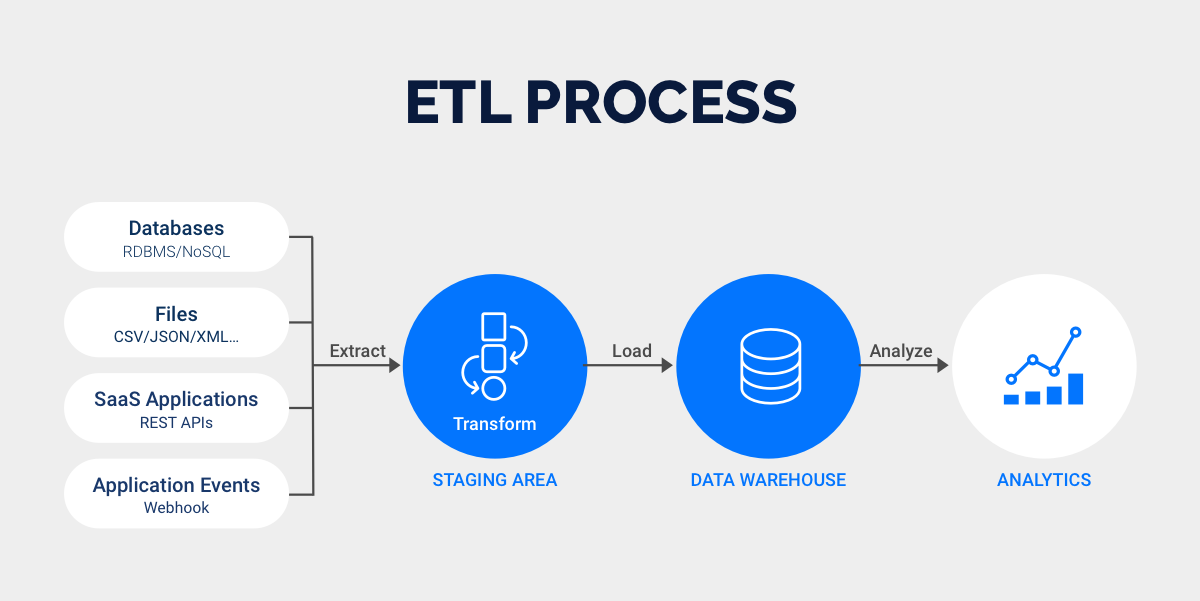

Data integration is a core pillar of enterprise data architecture. It enables organizations to combine data from multiple sources, creating a unified view for analysis and decision-making. Traditional data integration methods often involve manual processes, which can be slow and error-prone. Modern platforms like FineDataLink address these challenges by providing efficient, scalable, and automated solutions.

FineDataLink offers a low-code platform that simplifies complex data integration tasks. Its visual interface allows users to design data pipelines using drag-and-drop functionality. The platform supports real-time data synchronization, advanced ETL and ELT operations, and seamless connectivity to over 100 data sources, including databases, APIs, and files. FineDataLink’s scheduled task module enables efficient data extraction, transformation, and loading, while its flexible source and target selection feature allows integration across heterogeneous environments.

| Benefit | FineDataLink | Traditional Methods |

|---|---|---|

| Efficiency | Enhanced efficiency in integration | Often slower and more manual |

| Real-time Synchronization | Seamless real-time data synchronization | Delayed data updates |

| Data Quality | Improved data quality through ETL/ELT | Prone to errors and inconsistencies |

Organizations using FineDataLink experience measurable improvements in efficiency, data quality, and real-time synchronization. The platform’s data cleaning and conversion capabilities ensure that integrated data is accurate and ready for analysis. By reducing manual intervention and automating workflows, FineDataLink helps enterprises build a high-quality data layer for business intelligence and analytics.

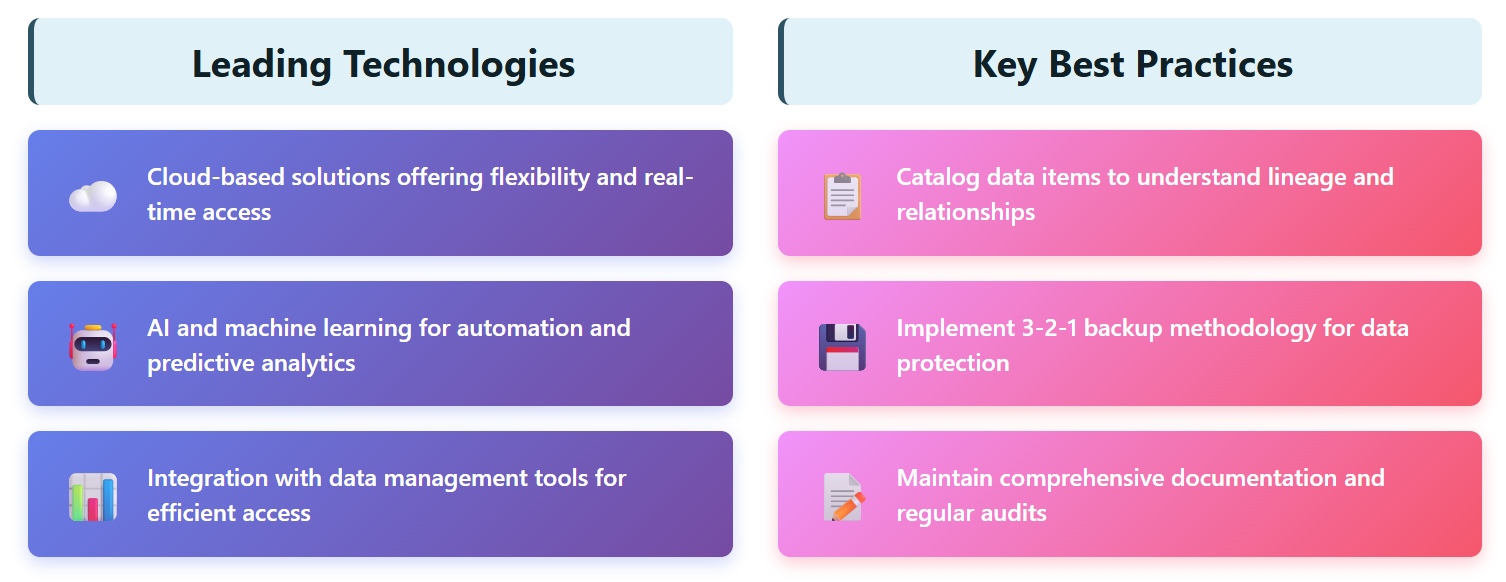

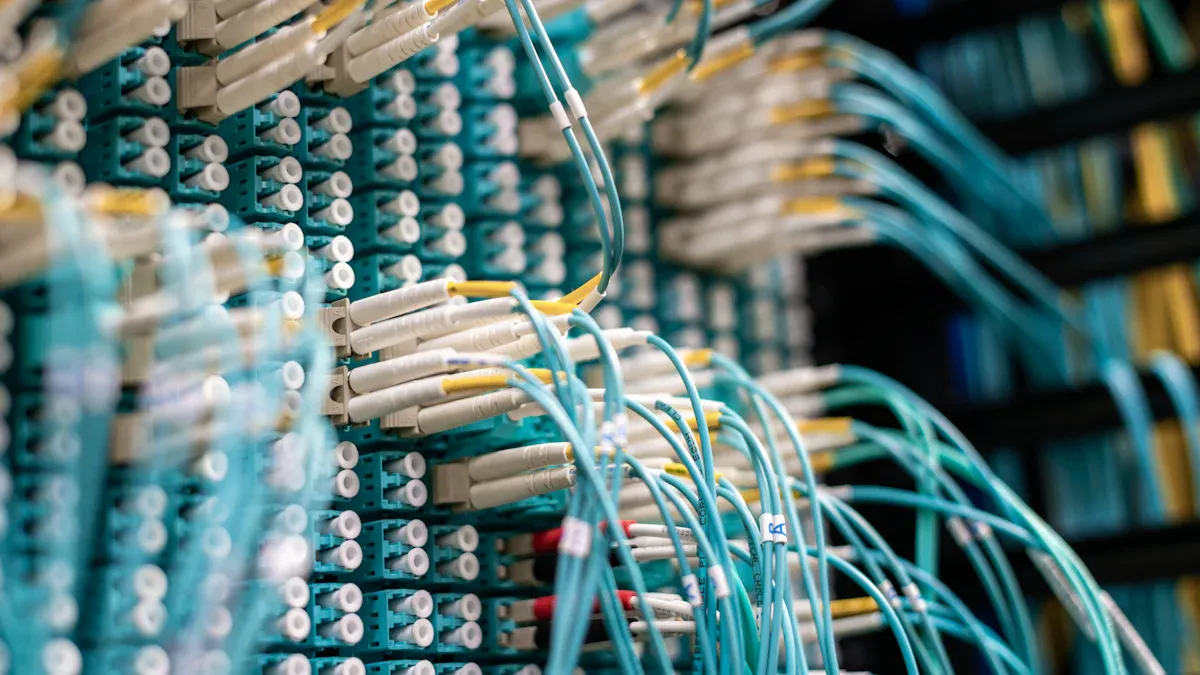

Data storage and management are essential components of enterprise data architecture. Organizations must handle diverse data types and volumes, ensuring scalability, reliability, and security. Leading technologies include cloud-based solutions, which offer flexibility and real-time access, as well as AI and machine learning for automation and predictive analytics.

Key practices in data storage and management include cataloging data items to understand lineage, implementing the 3-2-1 backup methodology, and maintaining comprehensive documentation. Enterprises foster a data-driven culture by conducting regular audits and enforcing robust management processes. Scalability is achieved through horizontal and vertical scaling, allowing organizations to expand capacity and performance as data demands grow.

Enterprise data storage solutions integrate with data management tools to provide efficient access and manipulation of both structured and unstructured data. These systems ensure data integrity, support compliance requirements, and enable organizations to leverage their data assets for strategic advantage.

Data security and privacy stand as critical pillars in enterprise data architecture. Organizations must protect sensitive information and comply with a growing list of regulations. Security teams play a vital role in the early stages of application development, ensuring that protection measures become part of the architecture from the start. This proactive approach helps identify risks before they escalate.

| Strategy | Description |

|---|---|

| Early Security Involvement | Security teams participate in development to integrate protection measures from the beginning. |

| Compliance with Regulations | Regular risk assessments align data management with laws such as GDPR and HIPAA. |

| Data Lifecycle Management | Security measures cover every stage, from creation to deletion. |

| Access Controls | Multi-factor authentication and strict access controls prevent unauthorized access. |

| Encryption Solutions | Encryption and regular software updates protect stored data. |

| Employee Training | Ongoing training ensures staff understand best practices in data security. |

Organizations must also comply with a range of regulatory requirements. These include GDPR for information privacy in the EU, CCPA for consumer protection in California, HIPAA for healthcare data in the U.S., PCI DSS for credit card security, SOX for financial reporting, FISMA for government data, PIPEDA for Canadian privacy, and ISO/IEC for global information safety standards. Failure to comply can result in significant penalties, as seen when the Irish Data Protection Commission fined a major tech company $1.3 billion for improper data transfers.

Note: Regular employee training and updated access controls help maintain a secure environment and reduce the risk of breaches.

Data architecture must integrate these strategies and regulations to ensure robust data privacy and security. Effective architecture not only protects data but also builds trust with customers and stakeholders.

High data quality forms the foundation of effective data architecture. Accurate, complete, and timely data supports reliable analytics and informed decision-making. Organizations use several metrics to assess and improve data quality:

| Metric | Description |

|---|---|

| Data accuracy | Data reflects real-world events and values correctly. |

| Data completeness | All necessary records are present, with no missing values. |

| Data consistency | Data remains standardized and coherent across the organization. |

| Data timeliness | Data stays current, preventing decisions based on outdated information. |

| Data uniqueness | Data contains no duplicates or redundancies. |

| Data validity | Data meets business rules and format standards. |

Organizations track these metrics to monitor data quality over time. High-quality data enables better business outcomes and supports advanced use cases such as artificial intelligence. When data quality falls short, organizations face serious consequences. Poor data quality can cause revenue loss, reduce operational efficiency, and increase compliance risks. Employees may spend valuable time correcting errors, which leads to higher costs and lost productivity. Inconsistent data can also damage a company’s reputation and erode customer trust.

Data quality management becomes essential for maintaining the integrity of enterprise data architecture. By investing in strong data quality practices, organizations can avoid costly mistakes and unlock the full value of their data assets.

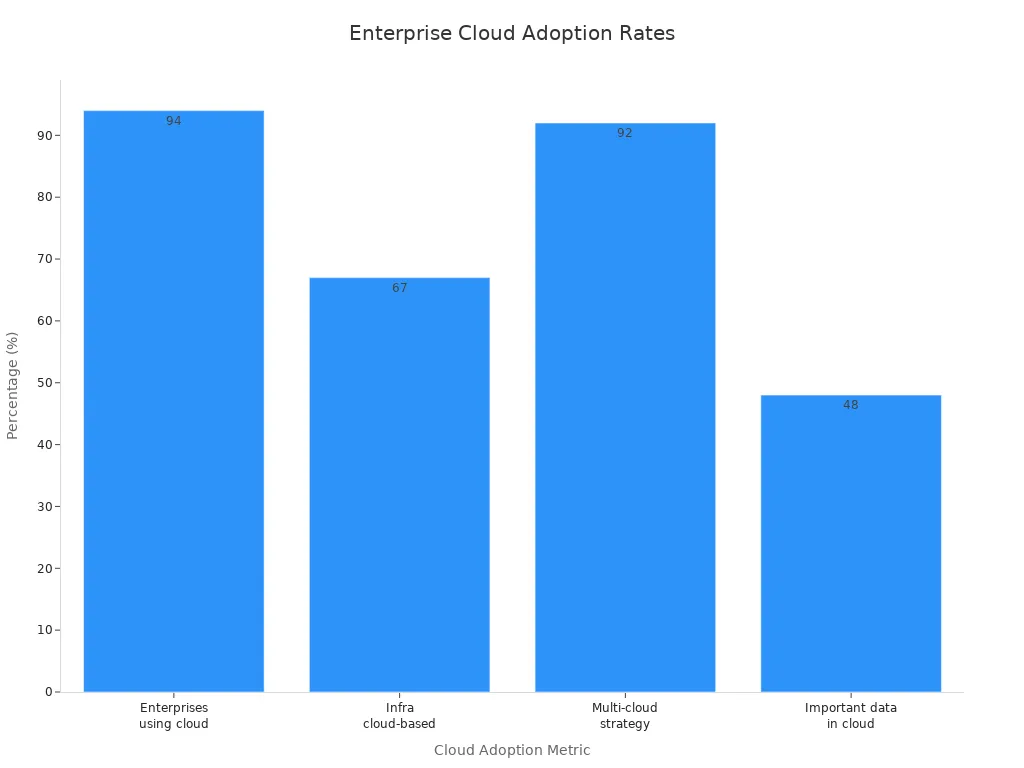

Cloud-based data architecture has become the standard for organizations seeking flexibility and scalability. Today, 94% of enterprises use cloud services, and 67% of their infrastructure is cloud-based. Multi-cloud strategies are common, with 92% of businesses adopting them, while 48% store important data in the cloud.

The main drivers for this shift include cost savings, operational efficiency, enhanced security, and the ability to leverage advanced technologies. Cloud-based data architecture offers several advantages, such as scalability, cost efficiency, and improved disaster recovery. However, organizations face challenges like security concerns, vendor lock-in, and compliance hurdles.

| Advantages | Challenges |

|---|---|

| Scalability | Security and privacy concerns |

| Cost efficiency | Vendor lock-in and lack of portability |

| Enhanced accessibility and collaboration | Compliance and regulatory hurdles |

| Access to cutting-edge technology | Managing complexity and controlling costs |

| Improved security and disaster recovery | Skills gaps and organizational change |

Data mesh and data fabric represent two innovative approaches to data architecture. Data mesh decentralizes data management, giving domain teams ownership of their data products. This approach increases accountability and speeds up access. In contrast, data fabric centralizes integration, connecting data across silos and ensuring consistent governance.

| Feature | Data Mesh | Data Fabric |

|---|---|---|

| Core Principle | Decentralized, domain-oriented data management | Centralized approach, focuses on integrating data across silos |

| Ownership | Domain teams own and manage their own data products | Centralized data team or service manages connections and integration |

| Governance Model | Domain-oriented governance | Centralized governance with policy-based controls |

| Data Access | Direct access to domain-specific data through APIs | Provides a virtualized data access layer across systems |

A multinational bank unified risk management data using data fabric, reducing data retrieval time by 40% and improving fraud detection by 25%. Organizations like JPMorgan Chase have adopted data mesh to speed up access and reduce bottlenecks. Both architectures aim to improve data quality and decision-making.

AI and automation are transforming data architecture by creating dynamic, efficient, and data-driven systems. These technologies optimize processes and enhance decision-making. Key applications include predictive analytics, process automation, and real-time decision support.

| Benefit | Description |

|---|---|

| Cost Reduction | Companies using AI in their data stack reduced engineering labor costs by up to 25% through automation. |

| Improved Efficiency | AI speeds up data pipeline development, allowing teams to move from prototype to production faster. |

| Enhanced Data Quality | AI automatically flags inconsistencies and ensures compliance, leading to cleaner datasets. |

| Real-Time Decision-Making | AI enables near-instant responses based on fresh data, improving operational efficiency. |

AI-driven workflows handle repetitive tasks, increase productivity, and allow employees to focus on strategic activities. In supply chain management, AI forecasts inventory needs, preventing overstock or stockouts. As organizations adopt AI and automation, they build architectures that support real-time data streaming and distributed development, including data lakehouse environments.

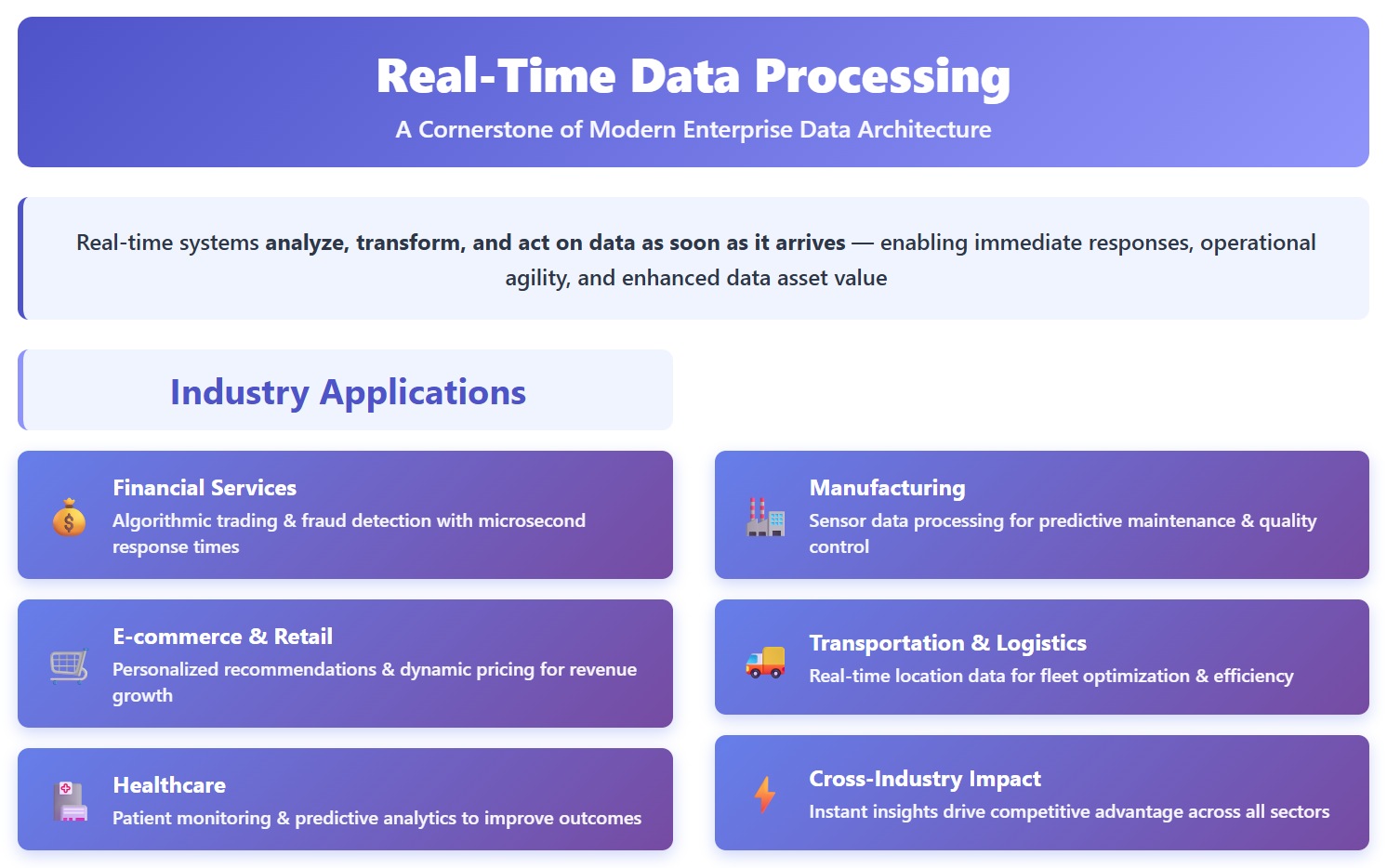

Real-time data processing has become a cornerstone of modern enterprise data architecture. Organizations rely on real-time systems to analyze, transform, and act on data as soon as it arrives. This approach enables immediate responses to business events, supports operational agility, and enhances the value of data assets.

Many industries have adopted real-time data processing to address specific challenges and opportunities:

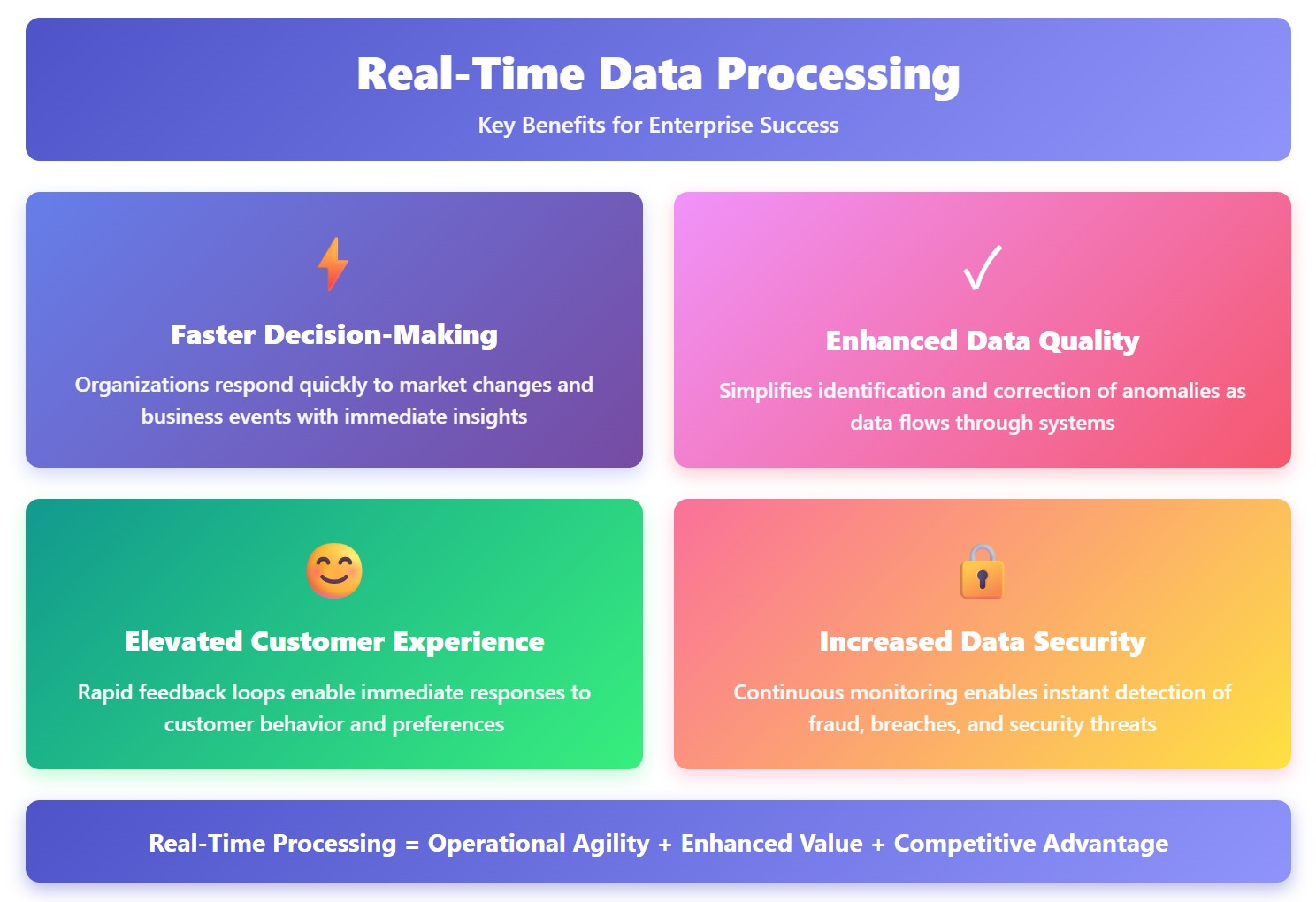

Real-time data processing offers several key benefits:

Organizations measure the return on investment for real-time data processing by evaluating speed to insight, decision quality, and business outcomes.

| Dimension | Description |

|---|---|

| Speed to Insight | Measures how quickly business users can obtain answers to critical questions. |

| Decision Quality | Assesses the accuracy, completeness, and trustworthiness of the answers provided. |

| Business Outcomes | Evaluates whether improved insights lead to measurable revenue growth, cost reduction, or competitive advantage. |

Successful implementations focus on revenue enablement and decision improvement, not just cost reduction. Tracking both technical and business metrics ensures that data solutions deliver meaningful results. Establishing baselines, monitoring indicators, and comparing alternatives help organizations accurately assess the impact of real-time data processing.

Tip: Real-time data processing strengthens enterprise data architecture by enabling immediate action, improving data quality, and supporting strategic goals.

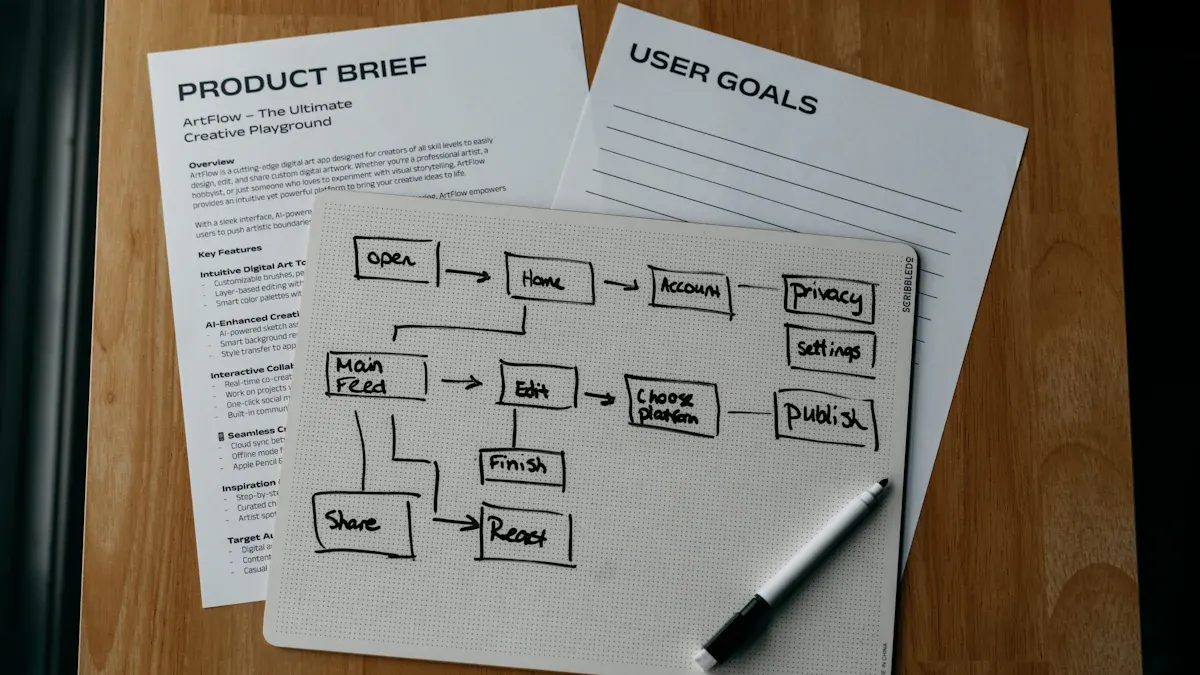

Organizations must begin their data architecture strategy by evaluating business needs and defining clear data goals. This process ensures that the architecture aligns with enterprise objectives and supports ongoing data initiatives. Teams analyze the current state of data management, identifying gaps in data quality, governance, and security. They look for opportunities to improve processes through new technologies and review existing data governance policies.

Stakeholders play a crucial role in this assessment. Leaders conduct initial stakeholder assessments to identify key individuals who influence data decisions. They prioritize assessment plans based on objectives and capabilities, maintaining flexibility to address unexpected requirements. A centralized site for documents and notes streamlines collaboration. A dedicated core team, balanced between business and IT resources, drives the assessment forward.

Organizations evaluate their data culture and employees’ access to tools and training. They determine if existing data answers all business questions, check accessibility for all users, and assess the level of detail in available data. Teams also evaluate the frequency of data updates and identify any restrictions related to data usage.

Tip: Regular reviews of data governance and data literacy help organizations adapt their data architecture to changing business needs.

Checklist for Assessing Data Needs:

After assessing business needs, organizations design a data architecture blueprint that supports enterprise goals. This blueprint provides a structured approach to managing data assets and enables scalable, flexible architecture. Teams align the blueprint with business objectives, ensuring that data architecture supports strategic priorities and ongoing data initiatives.

Industry frameworks guide the blueprint design process. DAMA-DMBOK offers best practices for data management, covering governance, quality, architecture, and security. TOGAF provides a comprehensive methodology for enterprise architecture, emphasizing collaboration and governance.

| Framework | Description |

|---|---|

| DAMA-DMBOK | Guidelines and best practices for data management, including governance, quality, architecture, and security. |

| TOGAF | Comprehensive enterprise architecture framework with methodologies for designing and governing data architectures. |

Organizations follow a structured process to align data architecture with business objectives:

Note: Using established frameworks like DAMA-DMBOK and TOGAF helps organizations create robust data architecture blueprints that support enterprise growth.

Selecting the right data integration platform is critical for successful enterprise data architecture. Organizations must choose solutions that support seamless data integration, automation, and scalability. FineDataLink stands out as a modern platform designed to address complex data integration challenges.

Key factors in selecting a data integration platform include compatibility, automation capabilities, ease of use, security, and data quality. FineDataLink offers compatibility with over 100 data sources, enabling integration across diverse systems. Its automation features streamline repetitive tasks such as data mapping and cleansing, enhancing efficiency. The platform’s user-friendly interface allows teams to manage integration without extensive technical skills.

Security remains a top priority. FineDataLink provides robust security features to protect sensitive data during integration. Data quality practices, including audits and validation rules, maintain high standards throughout the integration process.

Organizations benefit from FineDataLink’s real-time data synchronization, advanced ETL and ELT capabilities, and visual drag-and-drop interface. These features support efficient data warehouse construction, application integration, and enhanced connectivity. FineDataLink empowers enterprises to build a high-quality data layer for business intelligence and analytics, driving successful data initiatives.

Tip: Choosing a data integration platform with strong automation and security features ensures that enterprise data architecture remains scalable, efficient, and secure.

Organizations recognize that strong governance and compliance form the foundation of a reliable data architecture strategy. They create clear data policies and standards to guide consistent management practices. Data governance teams assign stewardship roles, empowering employees to protect and manage data assets. These roles ensure accountability and foster a culture of responsibility across departments.

Data governance establishes a structured approach to managing data. By integrating compliance requirements into governance policies, organizations ensure that data aligns with legal and industry standards. Regular audits help maintain compliance and uncover gaps in data management practices. Risk assessments identify vulnerabilities in systems, allowing teams to address issues before they escalate.

Tip: Integration of governance and compliance ensures that data is handled according to legal standards and builds trust with stakeholders.

Organizations evaluate the effectiveness of governance and compliance measures through several steps:

They also conduct regular audits and risk assessments to monitor compliance. These activities help organizations adapt to changing regulations and maintain robust data governance. Integration of governance and compliance supports enterprise goals and protects sensitive data throughout its lifecycle.

Continuous monitoring and optimization play a vital role in maintaining high-performing data architecture. Organizations collect data-specific metrics such as query response times and data throughput. They set up data alerts for performance metrics, receiving notifications when abnormal behavior occurs. Teams diagnose performance issues by reviewing collected metrics and identifying bottlenecks.

A variety of tools support monitoring and optimization efforts. Data quality tools measure error rates and completeness. Database monitoring tools track query response times and downtime. Data security tools monitor user permissions and detect unauthorized access. Business intelligence tools provide metrics on query performance and data access. Open-source solutions offer governance and monitoring metrics at no cost.

| Metric/Tool | Description |

|---|---|

| Data quality tools | Tools like Informatica Axon and SAP Data Services measure metrics like error rates and completeness. |

| Database monitoring tools | Tools such as SolarWinds Database Performance Analyzer monitor query response times and downtime. |

| Data security tools | Varonis DatAdvantage and Netwrix Auditor track user permissions and detect unauthorized access. |

| BI tools | Tableau, Qlik, and Microsoft Power BI provide metrics on query performance and data access. |

| Open-source tools | Apache Atlas, Apache Ranger, and Grafana offer governance and monitoring metrics at no cost. |

Teams monitor several key metrics to optimize data architecture:

| Metric | Description |

|---|---|

| Data quality | Measures accuracy, completeness, consistency, validity, and timeliness of data. |

| Data accessibility | Tracks ease and speed of user access to data, including query response times and user satisfaction. |

| Data security | Monitors access to sensitive data and unauthorized access attempts. |

| Data storage | Keeps track of storage capacity usage for current and future needs. |

Organizations also track total IT cost savings and IT portfolio Total Cost of Ownership. These indicators help reduce complexity and costs. Annual IT project costs provide insights into the effectiveness of the data architecture strategy.

Note: Setting up data alerts and regularly reviewing performance metrics enables teams to respond quickly to issues and optimize data architecture for enterprise growth.

Enterprise data architecture transforms how organizations approach decision-making. By providing a strategic framework, architecture ensures that data is accessible, accurate, and secure. Teams break down data silos, gaining a holistic view of operations. Strong data governance policies increase confidence in data-driven decisions. Organizations that implement data-driven decision-making within an architecture framework anticipate market trends and operational challenges more effectively.

Enterprise architects must improve how they provide operational data to colleagues. When organizations democratize data accessibility, they unlock actionable insights. Data architecture supports business intelligence, enabling leaders to respond quickly to changes in the market.

| Benefit | Description |

|---|---|

| Data accessibility | Ensures users can access relevant data easily |

| Data quality | Provides accurate and timely information |

| Data management | Supports consistent and reliable decision-making |

Data architecture plays a vital role in protecting sensitive information and maintaining compliance. Organizations integrate security measures into every stage of the data lifecycle. Architecture teams establish robust access controls, encryption protocols, and regular risk assessments. These practices safeguard data privacy and ensure compliance with regulations such as GDPR and HIPAA.

Security teams participate early in development, embedding protection into the architecture. Regular audits and employee training reinforce best practices. Data management policies align with legal standards, reducing the risk of breaches and penalties. Architecture supports ongoing compliance, building trust with customers and stakeholders.

Note: Effective data architecture not only protects data but also strengthens the organization’s reputation.

Organizations with mature enterprise architecture practices are 33% more likely to achieve their business goals. Data architecture streamlines processes, optimizes data management, and improves data quality. Teams measure operational efficiency through process optimization, compliance management, and data governance.

There is significant anecdotal evidence that architecture provides benefits, especially in advisory services that drive business change. The capability to deliver strategic guidance through architecture creates indirect value for organizations.

| Efficiency Factor | Impact on Organization |

|---|---|

| Process optimization | Faster, more reliable operations |

| Data quality | Fewer errors and improved outcomes |

| Compliance management | Reduced risk and lower costs |

Scalability and flexibility define the strength of modern data architecture. Organizations must adapt quickly to changing business needs and increasing data volumes. A robust architecture supports seamless expansion, allowing systems to handle more data without performance loss. Teams use modular design to add new data sources or applications with minimal disruption. Cloud-based solutions provide elastic resources, enabling enterprises to scale storage and processing power on demand.

Flexible data architecture empowers organizations to integrate emerging technologies. Data engineers deploy microservices and containerization to support rapid development and deployment. These approaches reduce downtime and simplify maintenance. Real-time data integration platforms, such as FineDataLink, synchronize data across multiple environments, ensuring consistent access and reliability.

Key benefits of scalable and flexible data architecture include:

Tip: Investing in scalable and flexible data architecture prepares organizations for growth and technological change.

A well-designed data architecture delivers a significant competitive advantage. Organizations leverage data to drive innovation, improve decision-making, and respond swiftly to market shifts. Centralized management and integration eliminate data silos, fostering collaboration across departments. Advanced analytics uncover trends and opportunities, supporting strategic planning.

The following table highlights real-world examples of competitive advantage gained through enterprise data architecture:

| Case Study | Background | Solution | Outcome |

|---|---|---|---|

| Company XYZ | Multinational manufacturing company facing operational challenges. | Implemented a centralized data management system and integrated legacy systems for improved efficiency. | Achieved streamlined processes, enhanced data integrity, and fostered innovation through cross-functional collaboration. |

| Organization ABC | Large financial services company with regulatory compliance challenges. | Focused on regulatory compliance and data security with advanced encryption and a robust governance structure. | Maintained high regulatory compliance, zero downtime during disasters, and grew the business by 25% year over year. |

| Company PQR | Fast-growing AI startup needing scalability and agility. | Adopted Kubernetes and microservices for modular scaling and agile methodologies for rapid feature delivery. | Scaled to support over 1000 customers, launched new products annually, and achieved an IPO within five years. |

Organizations that invest in advanced data architecture outperform competitors. They respond faster to customer demands, maintain high data quality, and ensure compliance. Data-driven strategies enable continuous improvement and long-term success.

Note: Competitive advantage stems from the ability to harness data effectively through robust architecture and innovative practices.

Enterprise data architecture empowers organizations to manage data assets with precision. Teams gain reliable access to data, which supports analytics and drives informed decisions. Strong architecture improves data quality, security, and scalability. Companies that invest in robust strategies see measurable business growth. FineDataLink offers a modern solution for integrating and transforming data from multiple sources. Leaders should evaluate current data practices and consider platforms like FineDataLink to enhance enterprise data architecture.

Enterprise Data Integration: A Comprehensive Guide

What is enterprise data and why does it matter for organizations

Understanding Enterprise Data Centers in 2025

Enterprise Data Analytics Explained for Modern Businesses

The Author

Howard

Data Management Engineer & Data Research Expert at FanRuan

Related Articles

What is a data management platform in 2025

A data management platform in 2025 centralizes, organizes, and activates business data, enabling smarter decisions and real-time insights across industries.

Howard

Dec 22, 2025

Top 10 Database Management Tools for 2025

See the top 10 database management tools for 2025, comparing features, security, and scalability to help you choose the right solution for your business.

Howard

Dec 17, 2025

Best Data Lake Vendors For Enterprise Needs

Compare top data lake vendors for enterprise needs. See which platforms offer the best scalability, integration, and security for your business.

Howard

Dec 07, 2025